Exploring Windows Containers: Page Files

In this post

📣 Dive into the code samples from this blog on GitHub!

A few weeks ago I received an excellent question from a community member, which sent me down the fascinating rabbit hole of Windows containers once again! The question, and while I’m paraphrasing, went something along these lines:

“Could you share some information on how page files are allocated in a Windows Server container setting?”

I honestly did not know whether page files would behave differently in a containerized setting, though I suspected that they wouldn’t. I decided to write down and share my findings.

The test setup

As I was figuring things out, I used a few Azure services to perform my tests. If you’d like to try some of these tests out for yourself, feel free to follow along. In the GitHub repository you can find a Bicep template, that provisions a number of resources. This should make it fairly straightforward to stress test the container’s (and host’s) memory, along with the associated page file.

I performed my tests by using a few platform-as-a-service offerings:

- Azure Container Registry + Tasks

- Will automatically pull code and build a Windows container image.

- Eventually, the built image is pushed into the registry.

- Azure Container Instance

- Used to run the built Windows container image.

- Azure App Service Plan P1V3

- runs Hyper-V isolated Windows Containers.

- Azure App Services

- Used to run the built Windows container image.

- Memory limit for a single container has been modified to use four-ish GBs of the eight that are available.

And some infrastructure-as-a-service offerings:

- Two Azure Virtual Machines

- One Standard_D4s_v3 and one Standard_E4-2s_v4.

- Visual Studio 2022 latest with Windows Server 2022.

- Two premium Managed Disks

- P10/128 Gb disks

- Two custom script extensions

- A PowerShell script that installs Docker and the Containers and Hyper-V Windows features.

- This becomes deprecated by September 2022.

- A PowerShell script that installs Docker and the Containers and Hyper-V Windows features.

- Virtual Network

- Holds the two VMs.

- Two public IP addresses

- Since we need to connect to it without much hassle.

- Network Security Group

- Only allows RDP from your IP to the VMs.

Windows Container crash course

First though, let’s briefly go over the concept of Windows Containers (and by extension many other forms of containers):

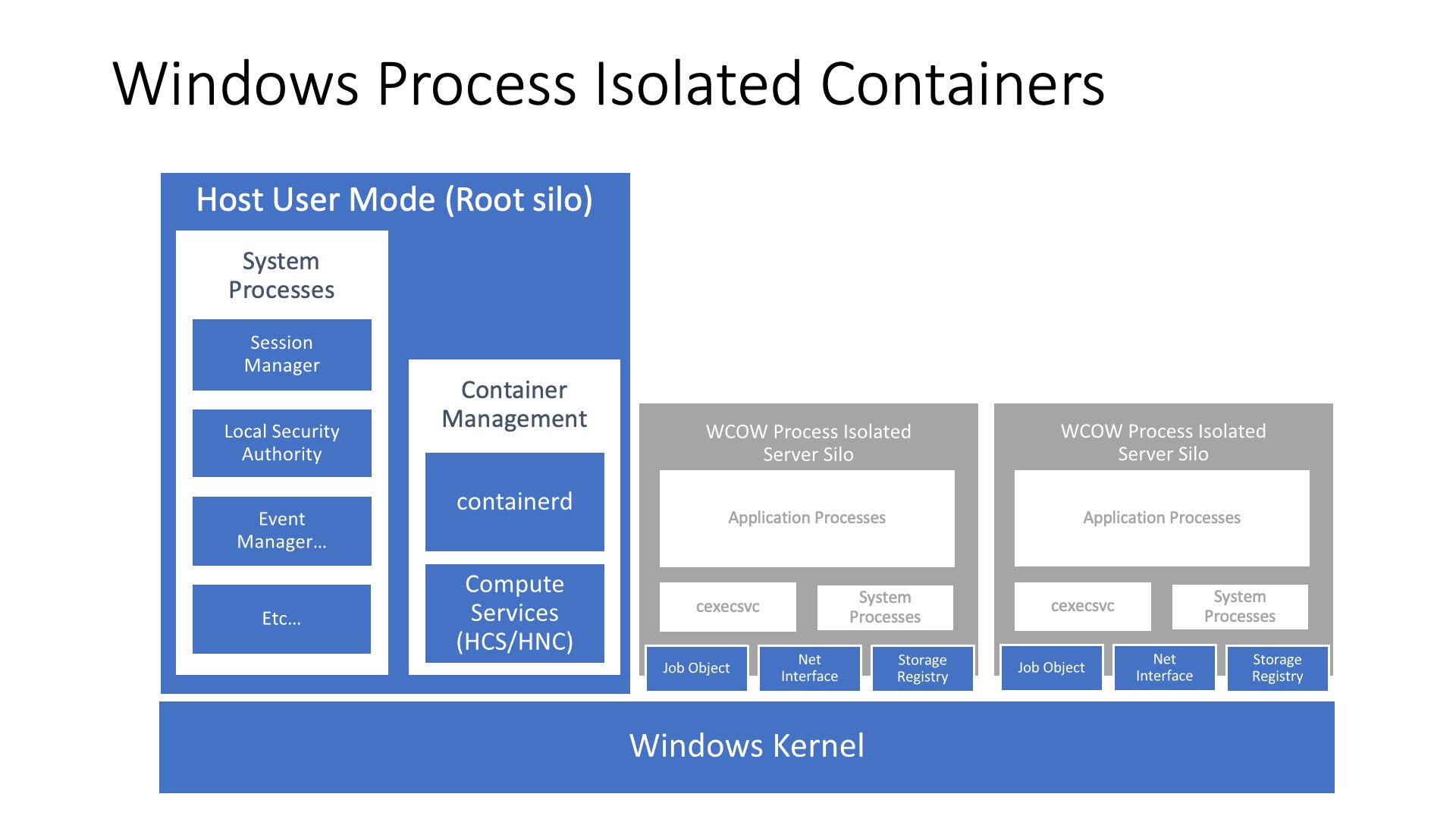

💡 A Windows (or Linux) container is just another process, like the ones you can see when you fire op “Task Manager” right now, running on a host’s kernel. The value proposition of Windows containers, from the start, has been that you could run a container in different isolation modes, either process or Hyper-V isolated, against a Windows kernel.

Process isolation, on Windows, comes in two flavours, either “Process” or “HostProcess” mode. Without going into too much detail, the technical difference between these two modes boils down to whether a process gets an isolated instance of certain resources (such as the network stack) or not. With “Process” isolation, a process built with “Windows Server Silos” can have any of the following items completely separate from the host: process table, list of users, IPC system, network stack, hostname and timezone… In “HostProcess” mode, implemented via a Windows Job Object and available on Windows Server 2022, the container has access to the host network namespace, storage, and devices when the process has been given the appropriate user privileges.

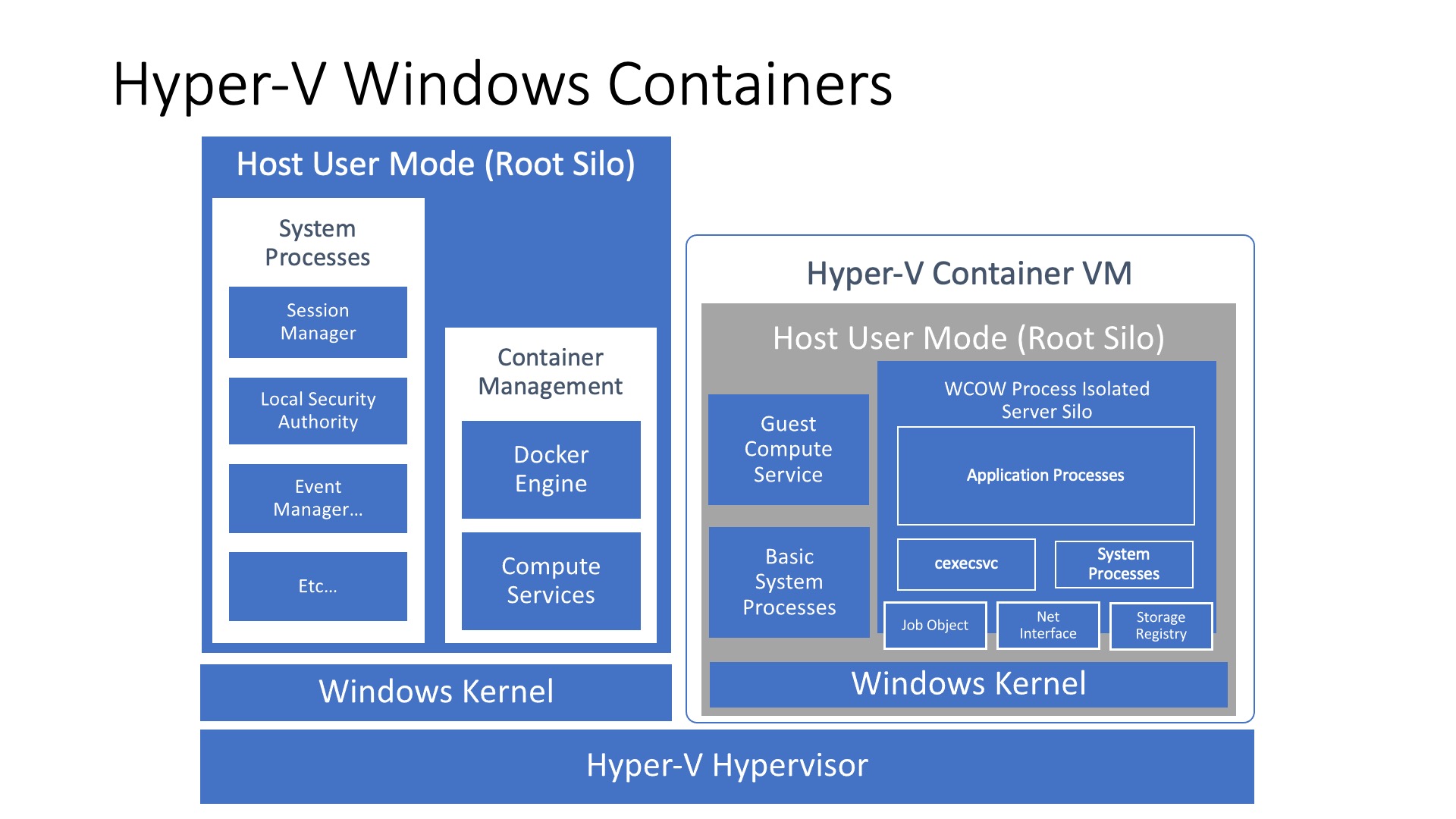

Hyper-V isolation will fire up an immutable and stateless Hyper-V virtual machine, which starts an optimized version of the Windows kernel. Your process runs against this kernel, much like if were running in “process isolation” mode. This way, your container is completely isolated from the host system because another layer of isolation has been added due to the virtual machine running on a hypervisor.

📖 What are these “Server Silos”, “Isolated resource instances” and “Job Objects”? If you would like to familiarize yourself with some of the nitty gritty details of Windows containers, you’re in luck, I’ve written about the subject in another post: “Exploring Windows Containers”

Page Files

A page file is an optional, hidden system file, on a hard disk. A page file enables the operating system to remove infrequently accessed modified pages (which are fixed-length blocks of memory) from physical memory to let the system use physical memory more efficiently, for more frequently accessed pages. Page files can also be used to support system crash dumps and extend how much system-committed memory (“virtual memory”) a system can support.

If we want to know how page files are allocated, we can take a quick look over on the Microsoft docs, which states:

💡 “System-managed page files automatically grow up to three times the physical memory or 4 GB (whichever is larger, but no more than one-eighth of the volume size) when the system commit charge reaches 90% of the system commit limit. This assumes that enough free disk space is available to accommodate the growth.”

However, the book Windows Internals, 7th Edition (more specifically chapter five: memory management) goes into much more detail on how exactly all of this occurs, on a given Windows machine. Since the 7th edition book was published in 2017, and there have been several versions of Windows since then, I cannot say for certain whether page files are still allocated in the manner that was described in the book. I have a strong feeling, after reading the Microsoft docs, this is still the case.

Since I decided to run a couple of tests using Azure virtual machines, there is a little caveat. If a VM SKU, the D4s_v3 in my case, has a temporary disk then the Marketplace images of Windows Server will ensure that your page file is created on the temporary disk. This is mainly because the temporary disk is, typically, significantly faster than a 128 GiB premium managed disk, thus accessing the page file should also be much faster. One of downsides to the temporary disk is that it is a bit smaller and its size is linked to the VM SKU.

- Azure VM Standard D4s v3

- Temp storage (SSD): 32 GiB

On the other hand, the E4-2s_v4 does not come with a temporary disk.

- Azure VM Standard E4-2s v4

- Temp storage (SSD): 0 GiB

Why do I go on this tangent? Well, I think it’s important to estimate how much the page file is going to grow… I did some quick napkin math (so take this with a grain of salt):

- the D4s_v3 machine:

- Memory: 48GiB (16x3) or 4 GiB?

- Volume: 32GiB/8 = 4 GiB

- Verdict: “No more than 1/8 of the volume size” and since the volume size is smaller, we can assume that it will grow up to 4 GiBs.

- the E4-2s v4 machine:

- Memory: 96GiB (32x3) or 4 GiB?

- Volume: 128GiB/8 = 16 GiB

- Verdict: “No more than 1/8 of the volume size” and since the volume size is also smaller, we can assume that it will grow up to 16 GiBs.

I left the page file settings to the “system-managed” setting, which seems to be the default for the marketplace image.

Process Isolated

A process isolated container runs on the host kernel and thus many of the same rules that apply to “regular” processes apply to it. I did not think that the container would get its own unique instance of the page file and that proved to be correct. A process isolated container will even know a thing or two about the host’s page file.

We can test this out by starting a process isolated container which in turn launches a PowerShell console, with a memory limit similar to the host VM. I am setting this memory limit because I am going to take up as much of the host’s memory as possible, but I want to ensure that I can still use the host to a certain extent. Unlike Linux, Windows will not kill a process that is using up all of its memory and processes pages to disk instead, as we will see in a moment.

Let’s start by running these containers:

# On the D4s_v3 (tvl-vm-001)

docker run -it -m 16384m --rm --isolation=process mcr.microsoft.com/windows/servercore:ltsc2022 powershell

# Or on the E4-2s_v4

docker run -it -m 32768m --rm --isolation=process mcr.microsoft.com/windows/servercore:ltsc2022 powershell

Once the PowerShell console was up and running, I ran the following command to gain some more insights as to what the process knows about the host’s page file. You can learn more about WMI here.

# Inside the container

Get-WmiObject Win32_PageFileusage | Select-Object -Property *

# PSComputerName : 38922E24AA04 👈 Our container's hostname

# Status :

# Name : D:\pagefile.sys 👈 Though no D: drive was mounted

# CurrentUsage : 0

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="D:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : 38922E24AA04

# __NAMESPACE : root\cimv2

# __PATH : \\38922E24AA04\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# AllocatedBaseSize : 2944 👈 Currently allocated size

# Caption : D:\pagefile.sys

# Description : D:\pagefile.sys

# InstallDate :

# PeakUsage : 0

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\38922E24AA04\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\38922E24AA04\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

Set-Location -Path D:\

# Set-Location : Cannot find drive. A drive with the name 'D' does not exist.

The path property is interesting here, as we did not mount any additional drives inside of our container. This most likely means that the container is aware of our host’s page file.

💡 A container typically has its own isolated set of mount points, in Linux this happens via the mount_namespaces(7) and Windows has its own implementation of this process. With these namespaces, we can make it so our containerized process sees a completely different directory hierarchy as opposed to a different process, which can have another different directory hierarchy entirely.

The “AllocatedBaseSize” was set to “2944” on the D4s v3 and on the E4-2s it was set to “5120”. “AllocatedBaseSize” is the actual amount of disk space allocated for use with the page file. Additionally, the path on both machines pointed to the page file that was located on the host’s D: drive. When I ran that PowerShell command outside the container, I got the same result.

# On the host.

Get-WmiObject Win32_PageFileusage | Select-Object -Property *

# PSComputerName : tvl-vm-001 👈 Our VM's actual hostname

# Status :

# Name : D:\pagefile.sys

# CurrentUsage : 0

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="D:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : tvl-vm-001

# __NAMESPACE : root\cimv2

# __PATH : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# AllocatedBaseSize : 2944

# Caption : D:\pagefile.sys

# Description : D:\pagefile.sys

# InstallDate : 20220201210905.340757+000

# PeakUsage : 0

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

Get-ChildItem -Path D:\ -Hidden

# Directory: D:\

#

#

# Mode LastWriteTime Length Name

# ---- ------------- ------ ----

# -a-hs- 2/1/2022 9:09 PM 3087007744 pagefile.sys

The way I understand it, since the page file was allocated at the system startup, it does not matter to the process isolated container what memory or storage limit I impose. The container will just happily keep on using the host’s page file settings. We can use a tool like SysInternal’s TestLimit to allocate a significant amount of memory, so much that it will cause the host’s page file to expand.

Invoke-WebRequest "https://download.sysinternals.com/files/Testlimit.zip" -OutFile "TestLimit.zip"

Expand-Archive -Path ".\TestLimit.zip"

Set-Location -Path "TestLimit"

.\Testlimit64.exe -d 1 -c 17000 -accepteula

# -d Leak and touch memory in specified MBs (default is 1).

# -c Count of number of objects to allocate (default is as many as possible). This must be the last option specified.

# -accepteula Ensures the container does not freeze while waiting for us to accept the EULA through a UI prompt (which we can't see).

# Testlimit v5.24 - test Windows limits

# Copyright (C) 2012-2015 Mark Russinovich

# Sysinternals - www.sysinternals.com

#

# Process ID: 6836

#

# Leaking private bytes with touch 1 MB at a time...

# Leaked 16200 MB of private memory (16200 MB total leaked). Lasterror: 1455

# The paging file is too small for this operation to complete.

# Leaked 0 MB of private memory (16200 MB total leaked). Lasterror: 1455

# The paging file is too small for this operation to complete.

# Sleeping for 5 seconds to allow for paging file expansion...

As that is happening, we can take a look on the host side to see what is going on with our page file.

Get-WmiObject Win32_PageFileusage | Select-Object -Property *

# PSComputerName : tvl-vm-001

# Status :

# Name : D:\pagefile.sys

# CurrentUsage : 4095

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="D:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : tvl-vm-001

# __NAMESPACE : root\cimv2

# __PATH : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# AllocatedBaseSize : 4095 👈 It grew up to 4 GiBs!

# Caption : D:\pagefile.sys

# Description : D:\pagefile.sys

# InstallDate : 20220203220107.662896+000

# PeakUsage : 4095

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage.Name="D:\\pagefile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\tvl-vm-001\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

The page file on the host grew, due to the actions of our Windows container. This proves, again, that a containerized process is still just another process running on a Windows host. To me, this looks like exactly what the Windows kernel is supposed to do when a process starts using up all of the host’s memory.

In the Kubernetes docs, there is a great bit of information which can help us understand what is behind the scenes:

“Windows does not have an out-of-memory process killer as Linux does. Windows always treats all user-mode memory allocations as virtual, and pagefiles are mandatory. Windows nodes do not overcommit memory for processes running in containers. The net effect is that Windows won’t reach out of memory conditions the same way Linux does, and processes page to disk instead of being subject to out of memory (OOM) termination. If memory is over-provisioned and all physical memory is exhausted, then paging can slow down performance.”

Hyper-V isolated

When it comes to allocating a page file on a Hyper-V isolated container, it should conceptually be the same as with process isolated containers. The difference here is that the Windows kernel is running inside a Hyper-V virtual machine, but from the process its point of view, it is doing the same thing as when it was running in process isolation mode. It’s wrapped inside an additional VM, which the containerized process does not necessarily know anything about.

To run a Hyper-V isolated container, you can run the following commands:

# If you've followed the previous set of instructions,

# you might want to consider rebooting the Azure VM first so we can start with a clean slate.

# On the D4s_v3 (tvl-vm-001)

docker run -it -m 12000m --rm --isolation=hyperv mcr.microsoft.com/windows/servercore:ltsc2022 powershell

# Or on the E4-2s_v4

docker run -it -m 25000m --rm --isolation=hyperv mcr.microsoft.com/windows/servercore:ltsc2022 powershell

Just as before, we can take a look at the amount of disk space allocated for use with the page file, by inspecting the “AllocatedBaseSize”.

Get-WmiObject Win32_PageFileusage | Select-Object -Property *

# PSComputerName : C662B8730CB2

# Status :

# Name : C:\pagefile.sys

# CurrentUsage : 0

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="C:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : C662B8730CB2

# __NAMESPACE : root\cimv2

# __PATH : \\C662B8730CB2\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

# AllocatedBaseSize : 1728 👈

# Caption : C:\pagefile.sys

# Description : C:\pagefile.sys

# InstallDate :

# PeakUsage : 0

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\C662B8730CB2\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\C662B8730CB2\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

As you can see, the page file should be located on the host’s “C:” drive. This is very similar to how things work in “Process isolation” mode. As before, you will be unable to find the pagefile at “C:\” location inside of the container.

Get-ChildItem -Path "C:\" -Hidden -Force # Only hidden files and folders

# Directory: C:\

#

#

# Mode LastWriteTime Length Name

# ---- ------------- ------ ----

# d--hs- 1/16/2022 5:17 AM Boot

# d--hsl 5/8/2021 5:28 PM Documents and Settings

# d--h-- 1/16/2022 1:18 PM ProgramData

# d--hs- 2/1/2022 9:50 PM WcSandboxState

# -arhs- 1/16/2022 5:11 AM 436854 bootmgr

# -a-hs- 5/8/2021 8:11 AM 1 BOOTNXT

# -a-hs- 1/16/2022 1:18 PM 12288 DumpStack.log.tmp

Now here’s the bit that might seem strange at first; regardless of whether you run this on the D4s_v3 or E4-2s_v4 you will always end up with an “AllocatedBaseSize” of 1728. I was puzzled by this at first but after giving it some thought I think I may have an explanation for this. The virtual machine, used with Hyper-V containers, is always created from the same object: the utility VM. I think it’s perfectly valid to assume that it has been configured in such a way that the page file’s AllocatedBaseSize" is set to a value of 1728.

If we use Testlimit this time, we need to make sure that we do not fill up all of the available memory. We will not be able to execute other commands if we will use up all the available memory.

Invoke-WebRequest "https://download.sysinternals.com/files/Testlimit.zip" -OutFile "TestLimit.zip"

Expand-Archive -Path ".\TestLimit.zip"

Set-Location -Path "TestLimit"

.\Testlimit64.exe -d 1 -c 11750 -accepteula # 👈 11750 because we set our Hyper-V container's

# memory limit to 12000.

# -d Leak and touch memory in specified MBs (default is 1).

# -c Count of number of objects to allocate (default is as many as possible). This must be the last option specified.

# -accepteula Ensures the container does not freeze while waiting for us to accept the EULA through a UI prompt (which we can't see).

What does the page file of this Hyper-V container look like right now? We can take a look!

docker container ls

# CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 7c3816ad5876 mcr.microsoft.com/windows/servercore:ltsc2022 "powershell" 5 minutes ago Up 5 minutes thirsty_bell

# 👆 Take the container ID

# Use it to run a command inside the container and get its output

# 👇

docker exec 7c3816ad5876 powershell -c "Get-WmiObject Win32_PageFileusage | Select-Object -Property *"

# PSComputerName : 7C3816AD5876

# Status :

# Name : C:\pagefile.sys

# CurrentUsage : 645 👈

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="C:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : 7C3816AD5876

# __NAMESPACE : root\cimv2

# __PATH : \\7C3816AD5876\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

# AllocatedBaseSize : 1728 👈

# Caption : C:\pagefile.sys

# Description : C:\pagefile.sys

# InstallDate :

# PeakUsage : 1131 👈

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\7C3816AD5876\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\7C3816AD5876\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

It seems like the “AllocatedBaseSize” does not expand. Maybe we need to stress our container a little more so that we can trigger the resizing of the page file. Let’s try to allocate all the memory inside our Hyper-V container.

.\Testlimit64.exe -d 1 -c 12000 -accepteula # 👈 container's memory limit

# Testlimit v5.24 - test Windows limits

# Copyright (C) 2012-2015 Mark Russinovich

# Sysinternals - www.sysinternals.com

#

# Process ID: 1156

#

# Leaking private bytes with touch 1 MB at a time...

# Leaked 11826 MB of private memory (11826 MB total leaked). Lasterror: 1455

# The paging file is too small for this operation to complete.

# Leaked 0 MB of private memory (11826 MB total leaked). Lasterror: 1455

# The paging file is too small for this operation to complete.

We can wait all we like but it does not seem that the page file will expand. However, there is another way we can use TestLimitto force the page file to expand, by allocating some shared memory instead…

docker run -it -m 12000m --rm --isolation=hyperv mcr.microsoft.com/windows/servercore:ltsc2022 powershell

Invoke-WebRequest "https://download.sysinternals.com/files/Testlimit.zip" -OutFile "TestLimit.zip"

Expand-Archive -Path ".\TestLimit.zip"

Set-Location -Path "TestLimit"

.\Testlimit64.exe -s 1 -c 11750 -accepteula # 👈 note the '-s' parameter

When we query the page file’s usage, we can see that the “AllocatedBaseSize” did increase this time! 🥳

docker container ls

# CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# f157b08c6517 mcr.microsoft.com/windows/servercore:ltsc2022 "powershell" 5 minutes ago Up 6 minutes busy_agnesi

docker exec f157 powershell -c "Get-WmiObject Win32_PageFileusage | Select-Object -Property *"

# PSComputerName : F157B08C6517

# Status :

# Name : C:\pagefile.sys

# CurrentUsage : 0

# __GENUS : 2

# __CLASS : Win32_PageFileUsage

# __SUPERCLASS : CIM_LogicalElement

# __DYNASTY : CIM_ManagedSystemElement

# __RELPATH : Win32_PageFileUsage.Name="C:\\pagefile.sys"

# __PROPERTY_COUNT : 9

# __DERIVATION : {CIM_LogicalElement, CIM_ManagedSystemElement}

# __SERVER : F157B08C6517

# __NAMESPACE : root\cimv2

# __PATH : \\F157B08C6517\root\cimv2:Win32_PageFileUsage.Name="C:\\pag

# efile.sys"

# AllocatedBaseSize : 2704 👈 It changed!

# Caption : C:\pagefile.sys

# Description : C:\pagefile.sys

# InstallDate :

# PeakUsage : 0

# TempPageFile : False

# Scope : System.Management.ManagementScope

# Path : \\F157B08C6517\root\cimv2:Win32_PageFileUsage.Name="C:\\pag

# efile.sys"

# Options : System.Management.ObjectGetOptions

# ClassPath : \\F157B08C6517\root\cimv2:Win32_PageFileUsage

# Properties : {AllocatedBaseSize, Caption, CurrentUsage, Description...}

# SystemProperties : {__GENUS, __CLASS, __SUPERCLASS, __DYNASTY...}

# Qualifiers : {dynamic, Locale, provider, UUID}

# Site :

# Container :

What about Azure Container offerings?

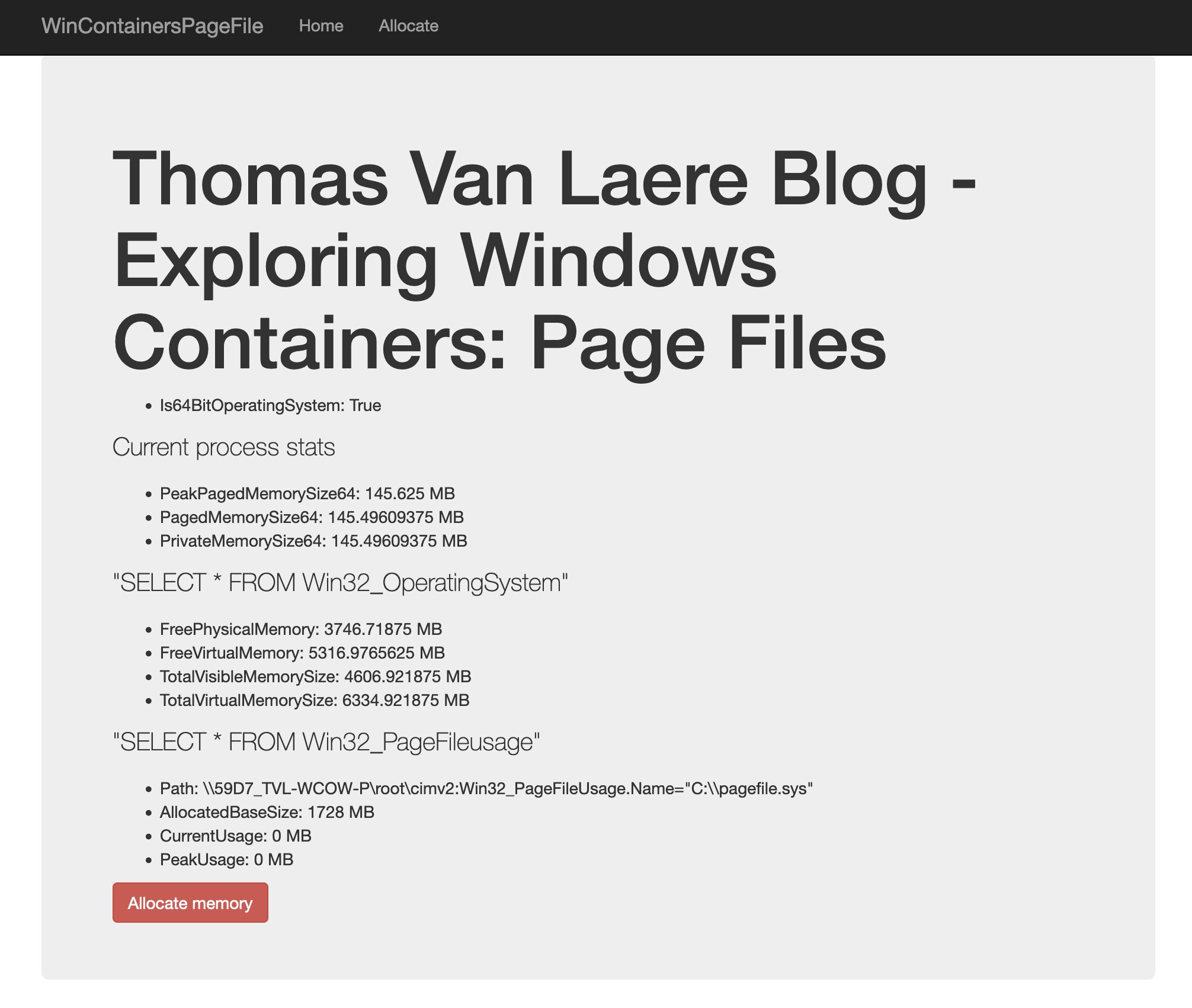

I decided to see if this behaviour would also occur if I ran the container on some of Azure’s various container offerings. I picked two platform-as-a-service offerings, App Services and Azure Container Instances, since they can run Windows Server 2019 containers. The former will run containers in Hyper-V isolated mode.

Before creating the App Services, I would need to have a way to output the value of “AllocatedBaseSize”, I figured I could probably use C# to perform a WMI query and output it to a web page. I have also added functionality to allocate a specific amount of bytes, so we can force the page file to increase its size. The web app lets you perform the following allocations:

- Physical RAM

- Virtual RAM

- Create commit demand only: allocates memory for us, but doesn’t use up any of it.

- Create commit and RAM demand: allocates memory for us and sets the allocated blocks to zeroes, which causes the memory to become used.

- Create and fill up a file mapping in the system page file.

- A file mapping is the association of a file’s contents with a portion of the virtual address space of a process. The file on disk can be any file that you want to map into memory, or it can be the system page file.

So, I fired up Visual Studio and created a .NET Framework 4.8 ASP.NET MVC app that does just that. You can achieve the same result with a .NET 5 or 6 app, but since I was already going to use the “mcr.microsoft.com/windows/servercore:ltsc2019” base image I thought I might as well make the most of my experience. Once that was complete, all that was left was to publish the Windows Docker image to Azure Container Registry.

💡 When you deploy my Bicep/ARM sample, which is included in my GitHub sample repository, this ASP.NET 4.8 application will be automatically built using Azure Container Registry Tasks and deployed to an App Service and an Azure Container Instance.

Both services do not support Windows Server 2022 at the time of writing this post. I was getting the following error when deploying to Azure Container Instances (ACI):

{

"status": "Failed",

"error": {

"code": "UnsupportedWindowsVersion",

"message": "Unsupported windows image version. Supported versions are 'Windows Server 2016 - Before 2B, Windows Server 2019 - Before 2B, Windows Server 2016 - After 2B, Windows Server 2019 - After 2B, Windows Server, Version 1903 - After 2B, Windows Server, Version 2004'"

}

}

And these errors when deploying to Azure App Services (P1V3):

{

"status": "Failed",

"error": {

"code": "UnsupportedMediaType",

"message": "The parameter WindowsFxVersion has an invalid value. Cannot run the specified image as a Windows Containers Web App. App Service supports Windows Containers up to Windows Server Core 2019 and Nanoserver 1809. Platform of the specified image: windows, Version: 10.0.20348.473;",

"details": [

{

"message": "The parameter WindowsFxVersion has an invalid value. Cannot run the specified image as a Windows Containers Web App. App Service supports Windows Containers up to Windows Server Core 2019 and Nanoserver 1809. Platform of the specified image: windows, Version: 10.0.20348.473;"

},

{

"code": "UnsupportedMediaType"

},

{}

]

}

}

The only real workaround here is to build a container image that does not use the Windows Server 2022 base image.

Azure App Services Pv3

Azure App Services is a platform-as-a-service offering for running web apps, including Windows containers. I cannot modify the page file settings as I normally would inside of a Windows VM. Containers running on Azure App Services P1V3 run in Hyper-V isolation and as we saw earlier, we cannot modify the state of the utility VM.

I provisioned an App Service Plans P1V3, which comes with 2 vcpus and 8 GB memory. I can scale this plan up to a P2V3 (4vcpus, 16 GB memory), or higher when needed. The reason why I picked these two plans was that I initially assumed that if I used a plan with more memory, it would show me a different output for the “AllocatedBaseSize” value.

After deploying the container to App Services, these were the stats that I was initially seeing:

"SELECT * FROM Win32_PageFileusage"

Path: \\59D7_TVL-WCOW-P\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

AllocatedBaseSize: 1728 MB

CurrentUsage: 0 MB

PeakUsage: 0 MB

As I began allocating memory, I noticed that I was hitting the physical and virtual memory limit before the page file would even consider increasing in size. I restarted the App Service so I could start with the memory wiped, and started to create and allocate file mappings instead. This was the result of doing the operation a few times, allocating two GBs every time:

"SELECT * FROM Win32_PageFileusage"

Path: \\59D7_TVL-WCOW-P\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

AllocatedBaseSize: 4084 MB

CurrentUsage: 4084 MB

PeakUsage: 4084 MB

After a while, the web app would throw an error saying “CreateFileMapping - System error code: 1455”. In case you don’t know this, Microsoft keeps a list of Windows its system error codes.

ERROR_COMMITMENT_LIMIT

1455 (0x5AF)

The paging file is too small for this operation to complete.

Looks like we’ve hit the limit of what the page file is willing to allocate. I scaled up the App Service plan to the P2V3 App Service Plan and modify the maximum allowed memory for a given container. I set the WEBSITE_MEMORY_LIMIT_MB setting to 10000, I could not claim all of the available memory since the Azure App Services management software (KUDU) has to be able to run alongside the app.

After performing a couple of more tests, I seemed to be getting similar results:

"SELECT * FROM Win32_PageFileusage"

Path: \\DAC0_TVL-WCOW-P\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

AllocatedBaseSize: 4084 MB

CurrentUsage: 4084 MB

PeakUsage: 4084 MB

Azure Container Instances

Azure Container Instances allows you to create container groups, which are container hosts (also known as nodes), to run your containers on. As I was running my app on ACI, I immediately noticed that the default size for “AllocatedBaseSize” was very different to what I’d seen before.

"SELECT * FROM Win32_PageFileusage"

Path: \\SANDBOXHOST-XYZ\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

AllocatedBaseSize: 8192 MB

CurrentUsage: 79 MB

PeakUsage: 9566 MB

This is interesting to me because the default size of the “AllocatedBaseSize” was not set to “1728”… This made me wonder whether ACI runs Windows containers in “process isolation” mode as opposed to “Hyper-V isolation”. I think it is likely that ACI runs Windows containers in process isolation mode on a Hyper-V isolated host, but that is just me speculating as I could not find a definitive answer…

💡 I had a look at the Azure Container Instances (ACI) under the hood Azure Friday video but I couldn’t quite get a clear answer. There is a concept of a utility VM, for sure, but I am still not entirely certain whether this is the same utility VM used with Hyper-V container isolation mode. Perhaps it is the same utility VM, only slightly modified? Who knows! 😁 I cannot tell at this stage.

At this point, I am expecting the page file to behave similarly when we create and allocate a file mapping.

"SELECT * FROM Win32_PageFileusage"

Path: \\SANDBOXHOST-XYZ\root\cimv2:Win32_PageFileUsage.Name="C:\\pagefile.sys"

AllocatedBaseSize: 9566 MB

CurrentUsage: 9566 MB

PeakUsage: 9566 MB

That seems to work just fine!

Conclusion

This was a fun topic to dive into! I did not think I would end up doing this much investigating on the subject. But that’s the thing with these types of subjects… There is always this little extra layer that might be worth looking into and before you know it, there are another five distinct layers after that.

Anyway, compiling this information proved to be quite an arduous journey and I’m sure I’ve made an error here or there. Pretty sure there’s a memory leak or two in my code-sample. 😁 If you’ve spotted a mistake, please feel free to let me know!