Azure Red Hat OpenShift 4

In this post

Today I’m going to jot down some thoughts on Azure Red Hat OpenShift 4, which is something that I’ve wanted to do for a long time.

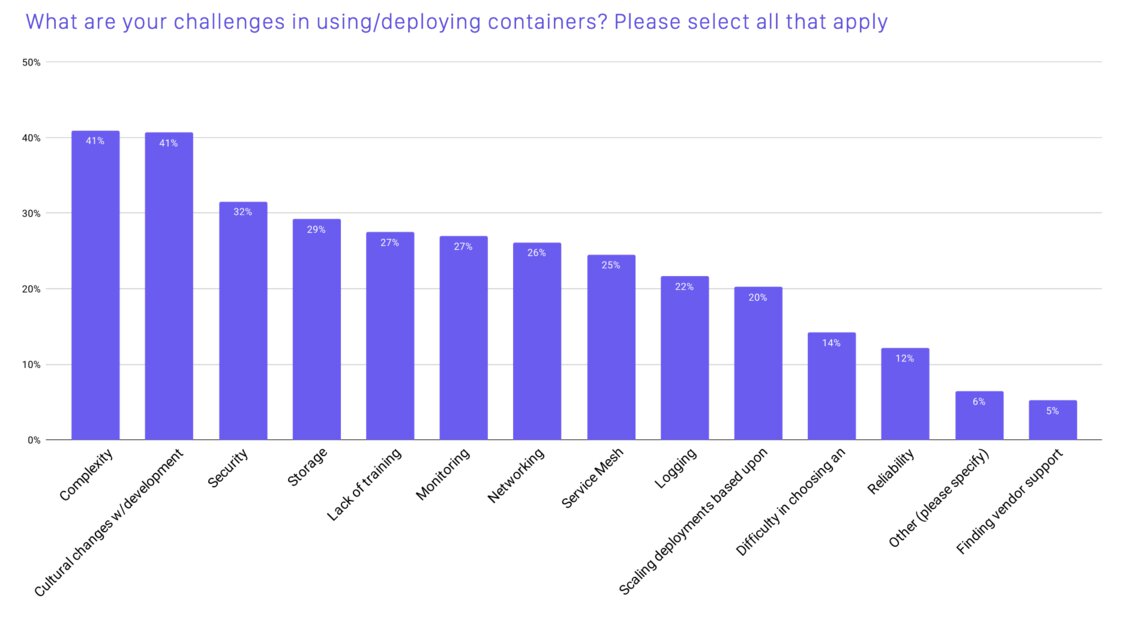

According to the CNCF 2020 survey report, using and deploying containers is still challenging and getting your team to make the cultural shift to containers is just as difficult (if you’ve ever tried to change the way people work, chances are that you might be able to relate).

On top of that, managing Kubernetes the right way is a rather difficult task in and of itself. There are lots of different variables to take into consideration when building and running a cluster all by yourself. It is certainly a great way to get a better understanding of the platform, you can learn about the potential of container orchestration and how it can add value to your business. But building a container platform, for your organization to use, is a very expensive endeavour.

Security is ranked as the third biggest challenge. I am inclined to agree, there is a steep learning curve if you want to be knowledgeable on how to lock down a cluster. You could certainly go a long way by applying your existing operations knowledge, but there are several container-specific topics that you need to keep in mind as well. How do I handle container image hardening? Can I scan for certain vulnerabilities in my container images? What monitoring and alerting tools do I need to use to monitor these systems? The list of questions can go on for quite a while…

Why OpenShift

If you are looking for a Kubernetes offering that is “delivered, optimized and updated as a single atomic unit”, OpenShift is worth taking a closer look at. You might think that managed Kubernetes offerings such as Azure Kubernetes Service offer just as much, which would be partially correct.

However, every Kubernetes vendor configures and operates its Kubernetes distribution differently. This is true for Azure Kubernetes Service, Google Kubernetes Engine, Amazon Elastic Kubernetes Service, etc… To be clear, OpenShift is certified Kubernetes, meaning that users of OpenShift can be certain that there will be a high level of common functionality and that the platform will behave as Kubernetes users have come to expect.

Not all Kubernetes vendors provide all of the necessary tools out of the box to run container workloads. This is another way in which OpenShift is different from Azure Kubernetes Service. For example, AKS does not come with a built-in container registry or monitoring tools. You will need a couple of additional Azure services stitched into your architecture such as Azure Container Registry and Azure Monitor for Containers to fill in that gap. Both ACR and Monitor for Containers are great services that I’ve used extensively, but with OpenShift these things are all natively part of the solution.

OpenShift aims to be more portable than other vendors’ Kubernetes offerings. If you were to run OpenShift on-prem, on Azure, on AWS, etc.. you will still get the same level of support and security. This is great if you want to keep your options open, should you ever want to extend or migrate to a different cloud provider, all you would need are VMs that can run OpenShift!

What’s inside the box?

OpenShift is not vanilla Kubernetes, but it is “certified Kubernetes with additional layers of abstraction that aim to increase the value of the entire solution”. It is an opinionated stack, which is not necessarily a bad thing. You are not locked to the solutions that come with OpenShift either, you are free to deviate from what Red Hat has provided you with.

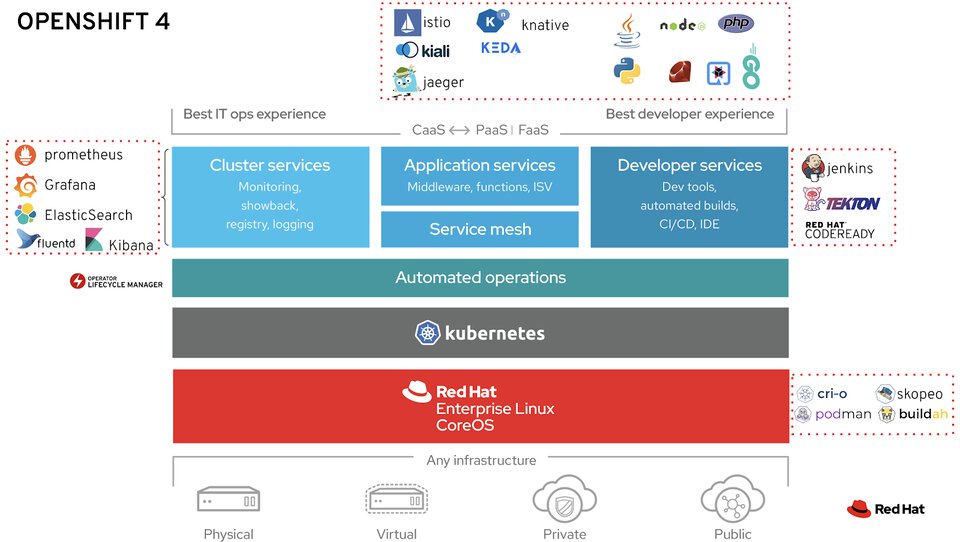

Take a look at what is inside the box:

As you can see OpenShift comes with more than you might expect and is not afraid to make a couple of decisions for you, based on Red Hat’s own best-practices and experiences with Kubernetes and those projects. You will get a bunch of carefully selected developer and operations tools, frameworks and services. There is also a web GUI for both devs and operations teams.

Whenever you update OpenShift you can be certain that Red Hat has certified that every component inside the box will still function correctly. Should you bring in your tools, you will be responsible for ensuring that they keep working across different versions of OpenShift. If you were to deploy any of these software tools, such as Grafana, to your AKS cluster, you would be responsible for managing it. Since this is part of OpenShift, you can log a support ticket and get an engineer to help you with your Grafana related issue.

ARO 4.x

So now that we have a very basic understanding of what OpenShift contains and what it can do for you, what makes Azure Red Hat OpenShift, ARO, unique?

First and foremost:

- Architecture for ARO is jointly created, managed and supported by Microsoft and Red Hat.

- Sits on a 99.95% SLA

- Cluster components are deployed across three Azure availability zones.

- ARO is compliant with several industry-specific standards and regulations. It’s able to piggyback on to several of Azure’s certifications and features such as guaranteed encryption-at-rest, etc…

- Everything is part of your Microsoft Azure invoice and does not require you to create a separate contract with Red Hat.

- You can purchase one or three year reserved instance commitments on your master and worker nodes.

- You get support from both Microsoft and Red Hat, provided that you play according to specific rules.

- Support is handled through the same Azure support experience that you’re used to.

- Microsoft and Red Hat support for ARO are co-located!

- Certain configurations for Azure Red Hat OpenShift 4 clusters can affect your cluster’s supportability, you are allowed to make some changes to internal cluster components but before doing so take a look at what is and is not allowed.

Red Hat and Microsoft will take care of the provisioning, managing and installing critical patches to ARO, as well as monitoring the core cluster infrastructure for availability.

ARO 4 architecture

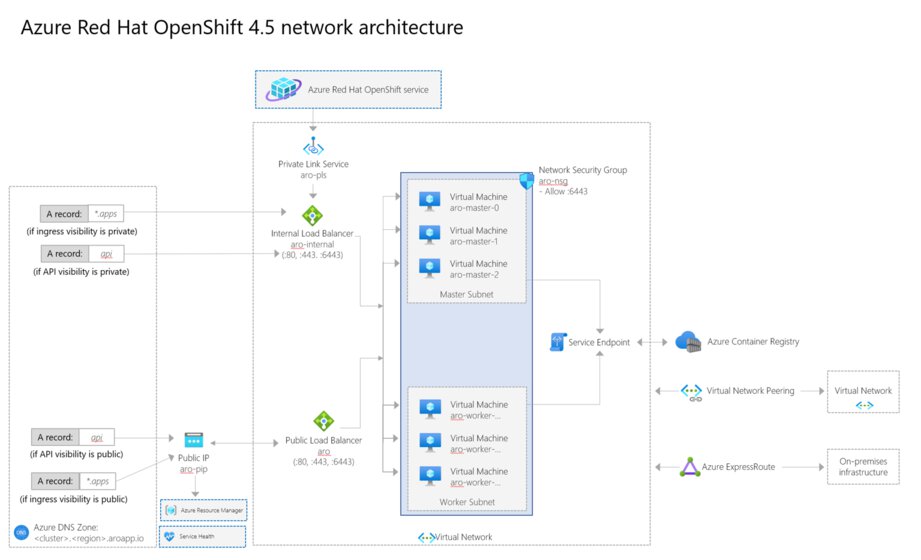

As an end-user of ARO 4, the idea is that you should not be all that concerned about the architecture. Still, it’s fun to know what the underlying parts of ARO consist of. The networking concepts section of the ARO docs has this great picture:

It looks like a straightforward Azure architecture, which is good. You will end up with a set of master and worker nodes, in separate subnets with a single network security group attached to it. Depending on your selected options during the setup process, you will receive a mix of public and/or private load balancers to access the cluster applications and the cluster API server.

To drive home the point that this is managed by both companies, you will see that there is a private link service. This is used to administer your cluster, regardless of whether it has been configured as private or public-facing.

It should be noted that the virtual network is something that you will need to provision (more on that in a second) and will therefore be in your control. This will grant you some additional networking flexibility, allowing you to use user-defined routes, VNET peering or set up various sorts of hybrid scenarios!

What about updates

Azure Red Hat OpenShift is built from specific releases of ‘OpenShift Container Platform’, which is the host-it-yourself-edition (or self-managed version). The contents of the box is ‘certified’ by Red Hat so that all of its parts continue to work after updating. Red Hat releases new minor versions of Red Hat OpenShift Container Platform (OCP) about every three months, patch releases are typically weekly and are only intended for critical bug fixes within a minor version.

Microsoft and Red Hat recommend that customers run the latest minor release of the major version they are running. New minor versions can include: “feature additions, enhancements, deprecations, removals, bug fixes, security enhancements and other improvement”.

To be eligible to receive support and to meet your obligations under the ARO SLA, you must ensure that you are either running the latest minor version or the most recent previous version. Whenever a new minor version is released you are expected to upgrade your cluster within 30 days. You will also be informed at least 30 days before a new version’s release, to be informed you can subscribe to ARO service health notifications within Azure or you can track the releases in GitHub!

Provisioning a cluster

If you’re thinking of running a quick ARO lab in the Azure subscription that’s associated to your MSDN account you might want to hold off on doing that. It is not as straight forward as you might think, hear me out. I usually run most of my tests on an MSDN account, they are great for testing. But when you take a look at the docs for ARO:

Azure Red Hat OpenShift requires a minimum of 40 cores to create and run an OpenShift cluster. The default Azure resource quota for a new Azure subscription does not meet this requirement. To request an increase in your resource limit, see Standard quota: Increase limits by VM series.

I can understand why this is the case, ARO’s selling point is that it comes as that atomic unit and that it is deployed according to a set of best practices. That’s fair, but it’s still important to keep in mind.

Now you might think that you can minimize the hit to your wallet by provisioning a few B-series machines, spot VMs or maybe even lowering the master/worker node count to one of each kind, this is unfortunately not possible. According to Red Hat, they do not provide free-trials or a PoC environment for ARO.

You must select one of the following VM series, note that they all use premium managed disks:

- Master nodes:

- Dsv3: 8 cores or above

- Worker nodes:

- Dasv4: 4 cores or above

- Dsv3: 4 cores or above

- Esv3: 4 cores or above

- Fsv3: 4 cores or above

At a minimum, you will need three master nodes, three worker nodes and a pay-as-you-go subscription (EA, CSP subscriptions work as well). Be sure to clean up your resources when you’re finished! 😊

I have not seen any PowerShell equivalents for the commands I’m about to use, however it is possible to use ARM templates to set up an ARO 4.x cluster, so you could use that combined with a “New-AzDeployment”.

To quickly fire up a cluster, simply do the following:

# Register some providers, you can also do this through via the Azure Portal.

az provider register -n Microsoft.RedHatOpenShift --wait

az provider register -n Microsoft.Compute --wait

az provider register -n Microsoft.Storage --wait

If you’re having issues registering the resource providers, chances are that you might not have the correct subscription type. I, for one, could not get this to work on an MSDN account. Fortunately, the kind people over at Aspex, were gracious enough to lend me one of their subscriptions for my tests.

LOCATION=westeurope

RESOURCEGROUP=aro-rg

AROCLUSTERNAME=aro-cluster

AROVNETNAME=aro-vnet

AROSUBNETMASTER=aro-sn-master

AROSUBNETWORKER=aro-sn-worker

# Create the resource group

az group create --name $RESOURCEGROUP --location $LOCATION

# Provision a VNET

az network vnet create --resource-group $RESOURCEGROUP --name $AROVNETNAME --address-prefixes 10.0.0.0/22

# Provision two subnets, in said VNET

az network vnet subnet create --resource-group $RESOURCEGROUP --vnet-name $AROVNETNAME --name $AROSUBNETMASTER --address-prefixes 10.0.0.0/23 --service-endpoints Microsoft.ContainerRegistry

az network vnet subnet create --resource-group $RESOURCEGROUP --vnet-name $AROVNETNAME --name $AROSUBNETWORKER --address-prefixes 10.0.2.0/23 --service-endpoints Microsoft.ContainerRegistry

# Disable private link service network policies; required for the service to be able to connect to and manage the cluster.

az network vnet subnet update --resource-group $RESOURCEGROUP --vnet-name $AROVNETNAME --name $AROSUBNETMASTER --disable-private-link-service-network-policies true

# Provision your very own ARO cluster

# ❗️ Hold up a minute, don't use "az openshift" since those are commands used for managing OpenShift 3.x clusters.

az aro create --resource-group $RESOURCEGROUP --name $AROCLUSTERNAME --vnet $AROVNETNAME --master-subnet $AROSUBNETMASTER --worker-subnet $AROSUBNETWORKER

# No --pull-secret provided: cluster will not include samples or operators from Red Hat or certified partners.

# Running -

There are also several other parameters that you can use with “az aro create”. For instance, if you want to hook up this cluster to your Red Hat account and use Red Hat services such as: Quay, Red Hat Container Catalog, etc.. You will need to provide the pull secret, which can be found in the Red Hat cluster management portal.

It’s worth taking a look at all the ‘az aro’ commands to see what they can do for you.

Once the cluster has been provisioned, you can do the following to connect to it. Since we did not specify that we wanted a private cluster API endpoint, we can connect to the endpoint over the internet. We will fetch the administrator credentials, as well as the web console URL.

az aro list-credentials --name $AROCLUSTERNAME --resource-group $RESOURCEGROUP

# {

# "kubeadminPassword": "1234-5678-1234-5678",

# "kubeadminUsername": "kubeadmin"

# }

az aro show --name $AROCLUSTERNAME --resource-group $RESOURCEGROUP --query "consoleProfile.url"

# "https://console-openshift-console.apps.abcdef.westeurope.aroapp.io/"

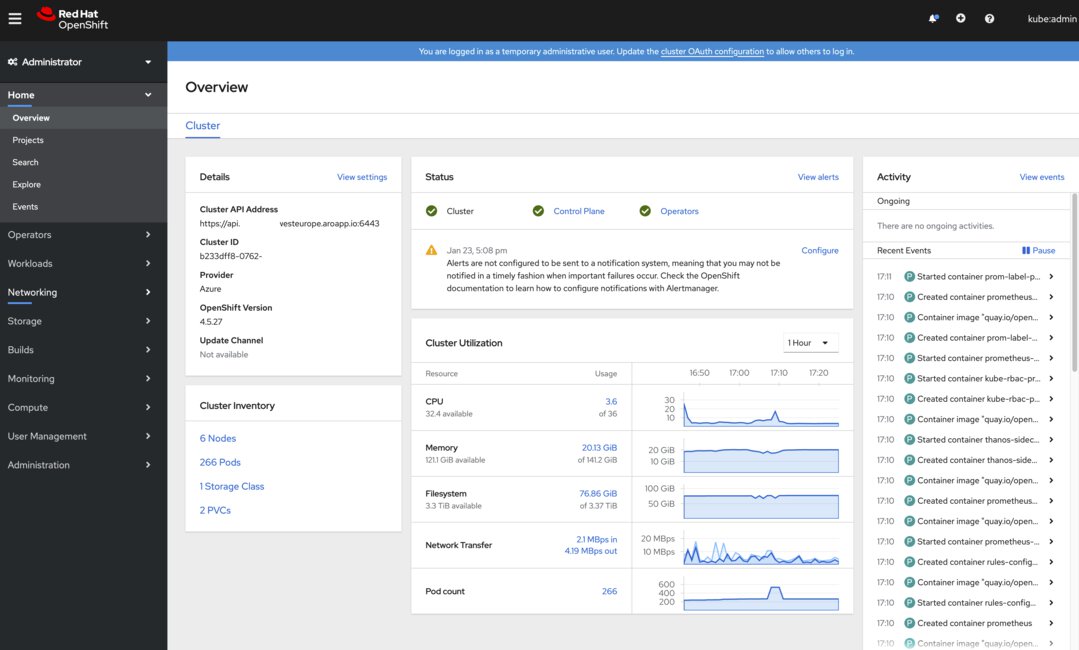

After entering the kubeadmin credentials, you will be presented with the web console.

If you are used to using kubectl, you’re in luck… Since OpenShift is a certified Kubernetes distribution, you can use kubectl to support your existing workflows! You can, however, gain extended functionality by using the OpenShift client or “oc” binary.

cd ~

wget https://mirror.openshift.com/pub/openshift-v4/clients/ocp/latest/openshift-client-linux.tar.gz

mkdir ~/openshift

tar -zxvf openshift-client-linux.tar.gz -C openshift

echo 'export PATH=$PATH:~/openshift' >> ~/.bashrc && source ~/.bashrc

apiServer=$(az aro show -g $RESOURCEGROUP -n $AROCLUSTERNAME --query apiserverProfile.url -o tsv)

oc login $apiServer -u kubeadmin -p 1234-5678-1234-5678

Conclusion

There isn’t too much to set up an Azure Red Hat OpenShift cluster, just a couple of commands and you’re good to go. At a first glance, I’d say that this is a nice approach and fits into the “single atomic unit” philosophy.

After an ARO deployment, you are left with a solid foundation and you don’t need to worry too much about the validity of the architecture. I suppose you could easily argue that additional options could be added to the deployment experience, such as N-series (GPU) worker nodes or the ability to use ultra SSDs on worker nodes.

By the way, should you need more control over your OpenShift environment on Azure, you can always manage it yourself! This can be done by deploying your own infrastructure, setting up OpenShift Container Platform and purchasing an OpenShift subscription via Red Hat. You could even swap out OCP and set up the community version of OpenShift, OKD, which includes most of the OpenShift’s features except for Red Hat support!

One final thing, in case you’re wondering how you can test drive OpenShift 4.x itself and mess about with all the bells and whistles that ship with it, “CodeReady Containers” is a minimal and ephemeral OpenShift 4.x cluster, created by Red Hat, that you typically run on your local development machine. Give it a go, in case you want to familiarize yourself with OpenShift 4.x!

Related posts

- Local OpenShift 4 with Azure App Services on Azure Arc

- Open Policy Agent

- Azure Chaos Studio and PowerShell

- Azure Chaos Studio - Public Preview

- Azure Purview

- Azure Resource Locks

- Exploring Windows Containers

- Verifying Azure AD tenant availability

- Microsoft Entra Privileged Identity Management (for Azure Resources) Revisited

- Windows Containers: Azure Pipeline Agents with Entra Workload ID in Azure Kubernetes Service

- Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service

- SPIFFE and Entra Workload Identity Federation

- Azure Confidential Computing: CoCo - Confidential Containers

- Exploring Windows Containers: Page Files

- Exploring Containers - Part 3