Windows Containers: Azure Pipeline Agents with Entra Workload ID in Azure Kubernetes Service

In this post

Not long ago, a community member reached out with an intriguing question:

💬 “Can the steps in your blog for replacing the Azure DevOps Personal Access Token be applied to Azure Pipeline agents running in Windows containers as well?”

This is a great question! The original steps I shared were designed specifically for hosting Azure DevOps agents on Linux. While adapting them for Windows containers is absolutely doable, it does require some adjustments. In this post, I will guide you through that process step by step.

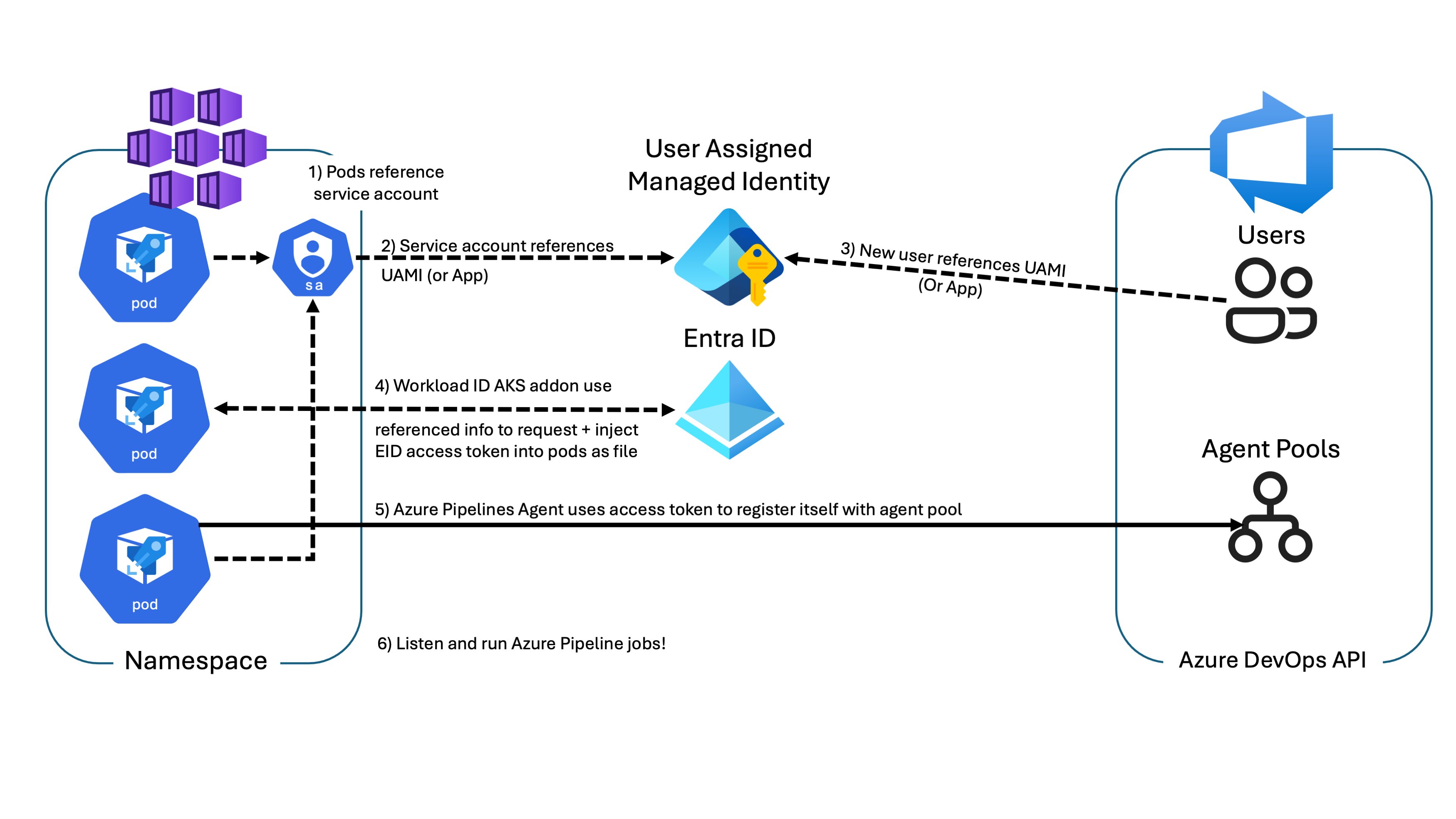

Think of this current blog post as a streamlined adaptation of my in-depth blog, "Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service". However, If you’re looking for a deeper dive into the concepts discussed here, be sure to check out that other blog post.

Why Azure Pipelines without PATS?

Until recently, the most commonly used method to connect self-hosted Azure Pipelines Agents was by using a Personal Access Token (PAT).

However, with the introduction of a new capability, it’s now possible to connect to Azure DevOps APIs using Entra ID Service Principals or Azure Managed Identities. They can be configured in Azure DevOps and assigned permissions to specific assets (projects, repos, pipelines), just like a regular user. This allows applications that use Managed Identities or Service Principals to interact with Azure DevOps APIs directly, performing actions once they’ve gotten an access token, rather than relying on a PAT tied to a specific user account.

Self-hosted Azure Pipeline agents, especially when not utilizing “Azure Virtual Machine Scale Set agents” feature, required securely storing a PAT. While this could be automated by leveraging secrets management tools like Azure Key Vault, which is accessed through managed identities, this approach isn’t without risks.

- If the PAT is compromised, it could lead to security breaches.

- PATs also require manual or scripted rotation to remain valid which may increase operational complexity.

💡 Version 2 of the Azure Pipelines agent “uses the PAT token only during the initial configuration of the agent; if the PAT expires or needs to be renewed, no further changes are required by the agent.” I could not find a similar statement for version 3. See the docs for the v2 agent

As mentioned earlier, Azure DevOps now supports authentication through service principals and managed identities. This brings us to a place where we can do away with the need for storing secrets altogether. By hosting Azure Pipelines Agents on Linux within Azure Kubernetes Service (AKS) and leveraging the Entra Workload Identity feature, you can securely obtain an Entra ID access token, via OAuth 2.0 client credentials flow, to then access the Azure DevOps API.

Deploying Azure Pipelines on Windows Containers, without a PAT

This time, I’ll keep things concise since we’ve already explored these components in depth in my previous blog. And again, if you’re interested in a deeper dive, make sure to check out the Linux variant of this post, as it explains all these concepts in way more detail.

Fortunately, setting everything up won’t be too daunting. Let’s outline the key modifications needed to get everything up and running smoothly:

- Set up an Azure Kubernetes cluster with both a Linux Profile and a Windows Profile.

- Create a user node pool with Windows Server 2022 nodes.

- Update the Azure DevOps agent pool name to reflect the Windows-specific agents.

- Update the Azure Pipelines agent for Windows containers

start.ps1script. - Verify the KEDA trigger to dynamically deploy Windows-based Azure Pipeline Agent containers.

Define Variables and Create a Resource Group

We’ll begin by defining some variables for our resource names and setting up a resource group to host the Azure Kubernetes Service (AKS) cluster:

export SUBSCRIPTION="$(az account show --query id --output tsv)"

export RESOURCE_GROUP="myResourceGroup"

export LOCATION="eastus"

export AKS_CLUSTER_NAME="myAKSCluster"

az group create --name "${RESOURCE_GROUP}" --location "${LOCATION}"

Fetch the Latest Kubernetes Version

We’ll grab the latest Kubernetes version available in your LOCATION.

💡 The

KubernetesVersiondiffers from the AKS version, which is updated regularly with fixes, features, and component upgrades. To track AKS releases for your region and when they might be rolled out next, be sure to check out the AKS release tracker.

export AKS_VERSION="$(az aks get-versions --location $LOCATION -o tsv --query "values[0].version")"

az aks get-versions --location $LOCATION -o table

# KubernetesVersion Upgrades

# ------------------- ----------------------

# 1.29.4 None available

# 1.29.2 1.29.4

# 1.28.9 1.29.2, 1.29.4

# 1.28.5 1.28.9, 1.29.2, 1.29.4

# 1.27.9 1.28.5, 1.28.9

# 1.27.7 1.27.9, 1.28.5, 1.28.9

Create the AKS Cluster

Next up, we create the AKS cluster, but this time around we ensure that we have the --windows-admin-username and --windows-admin-password flags set.

export AKS_CLUSTER_NAME="myAKSCluster"

az aks create --resource-group "${RESOURCE_GROUP}" \

--name "${AKS_CLUSTER_NAME}" \

--kubernetes-version "${AKS_VERSION}" \

--os-sku Ubuntu \

--node-vm-size Standard_D4_v5 \

--node-count 1 \

--enable-oidc-issuer \

--enable-workload-identity \

--generate-ssh-keys \

--windows-admin-username "azure" \

--windows-admin-password "replacePassword1234#"

Add a Windows Node Pool

The az aks create command by itself will not create a Windows node pool on its own, you will actually need to execute the az aks nodepool add for this. This, in turn, will use the Windows profile settings you provided during the creation process. So let’s do that and tell AKS to create a Windows node pool and let’s have it provision nodes using the Windows Server 2022 image.

📖 From the docs: “System node pools serve the primary purpose of hosting critical system pods such as CoreDNS and metrics-server. User node pools serve the primary purpose of hosting your application pods. However, application pods can be scheduled on system node pools if you wish to only have one pool in your AKS cluster. Every AKS cluster must contain at least one system node pool with at least two nodes.”

az aks nodepool add --resource-group "${RESOURCE_GROUP}" \

--cluster-name "${AKS_CLUSTER_NAME}" \

--name "win22" \

--os-sku "Windows2022" \

--mode "User"

With a bit of patience, your Windows nodes will be provisioned and ready to use!

Configure Entra Workload Identity

To ensure your pods can securely request access tokens, the next step is to set up a user-assigned managed identity or an Entra ID app registration. For simplicity, we’ll use a user-assigned managed identity.

export USER_ASSIGNED_IDENTITY_NAME="myIdentity"

az identity create --resource-group "${RESOURCE_GROUP}" \

--name "${USER_ASSIGNED_IDENTITY_NAME}" \

--location "${LOCATION}" \

--subscription "${SUBSCRIPTION}"

export USER_ASSIGNED_CLIENT_ID="$(az identity show --resource-group "${RESOURCE_GROUP}" --name "${USER_ASSIGNED_IDENTITY_NAME}" --query 'clientId' -o tsv)"

We create a Kubernetes service account and make sure we have the correct settings filled in. Since we’re using a user-assigned identity, all we need to do is make sure we have the correct value for metadata.annotation.azure.workload.identity/client-id. This would be our user-assigned identity’s client ID.

💡 An overview of the service account annotations which can be configured for Microsoft Entra Workload ID for AKS, can be found right here.

export SERVICE_ACCOUNT_NAMESPACE="default"

export SERVICE_ACCOUNT_NAME="workload-identity-sa"

az aks get-credentials --name "${AKS_CLUSTER_NAME}" --resource-group "${RESOURCE_GROUP}"

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

azure.workload.identity/client-id: "${USER_ASSIGNED_CLIENT_ID}"

name: "${SERVICE_ACCOUNT_NAME}"

namespace: "${SERVICE_ACCOUNT_NAMESPACE}"

EOF

Since we created our AKS cluster with the --enable-oidc-issuer and --enable-workload-identity flags, we should be able to set up our federated identity credential.

export FEDERATED_IDENTITY_CREDENTIAL_NAME="myFedIdentity"

export AKS_OIDC_ISSUER="$(az aks show --name "${AKS_CLUSTER_NAME}" --resource-group "${RESOURCE_GROUP}" --query "oidcIssuerProfile.issuerUrl" -o tsv)"

az identity federated-credential create --name ${FEDERATED_IDENTITY_CREDENTIAL_NAME} \

--identity-name "${USER_ASSIGNED_IDENTITY_NAME}" \

--resource-group "${RESOURCE_GROUP}" \

--issuer "${AKS_OIDC_ISSUER}" \

--subject system:serviceaccount:"${SERVICE_ACCOUNT_NAMESPACE}":"${SERVICE_ACCOUNT_NAME}" \

--audience "api://AzureADTokenExchange"

Set Up a Container Registry

To link your Azure Pipeline agents to the correct pool, create a ConfigMap with the pool name and Azure DevOps organization URL.

export AZP_ORGANIZATION="DevOpsOrg"

export AZP_URL="https://dev.azure.com/${AZP_ORGANIZATION}"

export AZP_POOL="aks-windows-pool"

kubectl create configmap azdevops \

--from-literal=AZP_URL="${AZP_URL}" \

--from-literal=AZP_POOL="${AZP_POOL}"

You’ll need a container registry to store the custom Windows container image. We will simply, once again, use an Azure Container Registry. We will also make sure that the Kubernetes’ Kubelet identity is assigned the Acrpull RBAC role, this will allow the cluster to pull images from the registry.

export AKS_KUBELETIDENTITY_OBJECT_ID="$(az aks show --name "${AKS_CLUSTER_NAME}" --resource-group "${RESOURCE_GROUP}" --query "identityProfile.kubeletidentity.objectId" -o tsv)"

export ACR_NAME="mysweetregistry"

az acr create --name "${ACR_NAME}" --resource-group "${RESOURCE_GROUP}" --sku "Standard"

export ACR_RESOURCE_ID="$(az acr show --name "${ACR_NAME}" --query "id" -o tsv)"

az role assignment create --role "Acrpull" \

--assignee-principal-type "ServicePrincipal" \

--assignee-object-id "${AKS_KUBELETIDENTITY_OBJECT_ID}" \

--scope "${ACR_RESOURCE_ID}"

Build and Push the Agent Image

The workflow described in the Azure DevOps documentation remains largely the same as well. The Dockerfile remains unchanged. As the documentation mentions “Save the following content to a file called C:\azp-agent-in-docker\azp-agent-windows.dockerfile”:

FROM mcr.microsoft.com/windows/servercore:ltsc2022

WORKDIR /azp/

COPY ./start.ps1 ./

CMD powershell .\start.ps1

💡 It goes without saying that this is a very simple container image definition, you will most likely want to expand it so it includes more tools.

We need to make some adjustments to the start.ps1 file to expand its functionality. Here’s what will be updated:

- Add a check to ensure the

AZURE_FEDERATED_TOKEN_FILEenvironment variable is present. - Implement logic to fetch an access token for the Azure DevOps REST API.

- Replace the

AZP_TOKENvalue with the token obtained from the REST API response. - Include the

--onceflag to ensure the container self-terminates after completing a job.

💡 A quick heads-up on

Invoke-RestMethod -UseBasicParsing: this parameter is deprecated.Starting with PowerShell 6.0.0, all web requests use basic parsing by default, and this parameter is retained solely for backward compatibility. Since PowerShell 5.1 remains the default on Windows images, including the flag is not a bad idea. Enabling this indicates that the cmdlet uses the response object for HTML content without Document Object Model (DOM) parsing.

function Print-Header ($header) {

Write-Host "`n${header}`n" -ForegroundColor Cyan

}

if (-not (Test-Path Env:AZP_URL)) {

Write-Error "error: missing AZP_URL environment variable"

exit 1

}

# Fetch an Azure DevOps access token and

# write it to environment variable AZP_TOKEN

if (-not (Test-Path Env:AZURE_FEDERATED_TOKEN_FILE)) {

Write-Error "error: missing AZURE_FEDERATED_TOKEN_FILE environment variable"

exit 1

}

$identity_token = Get-Content -Path $env:AZURE_FEDERATED_TOKEN_FILE

$token_response = Invoke-RestMethod -Method POST `

-UseBasicParsing `

-Uri ("{0}{1}/oauth2/v2.0/token" -f $env:AZURE_AUTHORITY_HOST, $env:AZURE_TENANT_ID) `

-ContentType "application/x-www-form-urlencoded" `

-Body @{

grant_type = "client_credentials"

client_id = $env:AZURE_CLIENT_ID

scope = "499b84ac-1321-427f-aa17-267ca6975798/.default"

client_assertion_type = "urn:ietf:params:oauth:client-assertion-type:jwt-bearer"

client_assertion = $identity_token

}

$env:AZP_TOKEN = $token_response.access_token

if (-not (Test-Path Env:AZP_TOKEN_FILE)) {

if (-not (Test-Path Env:AZP_TOKEN)) {

Write-Error "error: missing AZP_TOKEN environment variable"

exit 1

}

$Env:AZP_TOKEN_FILE = "\azp\.token"

$Env:AZP_TOKEN | Out-File -FilePath $Env:AZP_TOKEN_FILE

}

Remove-Item Env:AZP_TOKEN

if ((Test-Path Env:AZP_WORK) -and -not (Test-Path $Env:AZP_WORK)) {

New-Item $Env:AZP_WORK -ItemType directory | Out-Null

}

New-Item "\azp\agent" -ItemType directory | Out-Null

# Let the agent ignore the token env variables

$Env:VSO_AGENT_IGNORE = "AZP_TOKEN,AZP_TOKEN_FILE"

Set-Location agent

Print-Header "1. Determining matching Azure Pipelines agent..."

$base64AuthInfo = [Convert]::ToBase64String([Text.Encoding]::ASCII.GetBytes(":$(Get-Content ${Env:AZP_TOKEN_FILE})"))

$package = Invoke-RestMethod -Headers @{Authorization = ("Basic $base64AuthInfo") } "$(${Env:AZP_URL})/_apis/distributedtask/packages/agent?platform=win-x64&`$top=1"

$packageUrl = $package[0].Value.downloadUrl

Write-Host $packageUrl

Print-Header "2. Downloading and installing Azure Pipelines agent..."

$wc = New-Object System.Net.WebClient

$wc.DownloadFile($packageUrl, "$(Get-Location)\agent.zip")

Expand-Archive -Path "agent.zip" -DestinationPath "\azp\agent"

try {

Print-Header "3. Configuring Azure Pipelines agent..."

.\config.cmd --unattended --agent "$(if (Test-Path Env:AZP_AGENT_NAME) { ${Env:AZP_AGENT_NAME} } else { hostname })" --url "$(${Env:AZP_URL})" --auth PAT --token "$(Get-Content ${Env:AZP_TOKEN_FILE})" --pool "$(if (Test-Path Env:AZP_POOL) { ${Env:AZP_POOL} } else { 'Default' })" --work "$(if (Test-Path Env:AZP_WORK) { ${Env:AZP_WORK} } else { '_work' })" --replace

Print-Header "4. Running Azure Pipelines agent..."

.\run.cmd --once

}

finally {

Print-Header "Cleanup. Removing Azure Pipelines agent..."

.\config.cmd remove --unattended --auth PAT --token "$(Get-Content ${Env:AZP_TOKEN_FILE})"

}

Run the following command within that directory.. That gives us a container image.

docker build --tag "${ACR_NAME}.azurecr.io/azp-agent:windows" --file "./azp-agent-windows.dockerfile" .

Now upload the image to the container registry. The quickest way to do this, I think, is this way:

az acr login --name "${ACR_NAME}"

docker push "${ACR_NAME}.azurecr.io/azp-agent:windows"

Creating an ADO agent pool

While you can do this through the UI, we’ll use the CLI here. Let’s start by installing the CLI, which is an extension to the Azure cross-platform CLI, az.

az extension add --name azure-devops

az extension show --name azure-devops

Login in to Azure DevOps via the CLI extension can be done in several ways:

- Login with your Azure user credentials.

- Using

az login

- Using

- Login with your Azure DevOps user’s PAT.

- Using

az devops login --organization

- Using

🔥 The Azure DevOps extension does not currently support authenticating with Managed Identities.

az devops configure --defaults organization=${AZP_URL}

az login

# Alternatively

az devops login --organization ${AZP_URL}

We’ll assign the user-assigned managed identity a basic Azure DevOps license by creating a “user entitlement”.

export USER_ASSIGNED_OBJECT_ID="$(az identity show --resource-group "${RESOURCE_GROUP}" --name "${USER_ASSIGNED_IDENTITY_NAME}" --query 'principalId' -o tsv)"

cat << EOF > serviceprincipalentitlements.json

{

"accessLevel": {

"accountLicenseType": "express"

},

"projectEntitlements": [],

"servicePrincipal": {

"displayName": "${USER_ASSIGNED_IDENTITY_NAME}",

"originId": "${USER_ASSIGNED_OBJECT_ID}",

"origin": "aad",

"subjectKind": "servicePrincipal"

}

}

EOF

export ADO_USER_ID="$(az devops invoke \

--http-method POST \

--organization "${AZP_URL}" \

--area MemberEntitlementManagement \

--resource ServicePrincipalEntitlements\

--api-version 7.2-preview \

--in-file serviceprincipalentitlements.json \

--query "operationResult.result.id" \

--output tsv)"

The autoProvision setting ensures that all projects have access to this agent pool.

⚠️ The “recommendations to secure shared infrastructure in Azure Pipelines” docs page states that Microsoft recommends having separate agent pools for each project.

cat << EOF > pool.json

{

"name": "${AZP_POOL}",

"autoProvision": true

}

EOF

export AZP_AGENT_POOL_ID="$(az devops invoke \

--http-method POST \

--organization "${AZP_URL}" \

--area distributedtask \

--resource pools \

--api-version 7.1 \

--in-file pool.json \

--query "id" \

--output tsv)"

The result for this is a JSON response, look like this:

{

"agentCloudId": null,

"autoProvision": true,

"autoSize": true,

"autoUpdate": true,

"continuation_token": null,

"createdBy": {},

"createdOn": "2024-11-28T22:45:00.387Z",

"id": 123,

"isHosted": false,

"isLegacy": false,

"name": "aks-windows-pool",

"options": "none",

"owner": {},

"poolType": "automation",

"properties": {},

"scope": "00000000-1111-1111-0000-000000000000",

"size": 0,

"targetSize": null

}

We then assign the administrator role to the newly created ADO user. You can assign users to various security roles, such as Reader, Service Account, or Administrator. Each role has specific permissions and responsibilities within the organization or project.

cat << EOF > roleassignments.json

[

{

"userId": "${ADO_USER_ID}",

"roleName": "Administrator"

}

]

EOF

az devops invoke \

--http-method PUT \

--organization "${AZP_URL}" \

--area securityroles \

--resource roleassignments \

--route-parameters scopeId="distributedtask.agentpoolrole" resourceId="${AZP_AGENT_POOL_ID}" \

--api-version 7.2-preview \

--in-file roleassignments.json

It seems there’s a bug preventing the command from executing correctly when using this specific --area and --resource, but a workaround has been suggested here. For the time being, you can perform this action using the Azure DevOps REST API or via the web interface, in the newly created agent pool’s security settings.

PUT /{{AZP_ORGANIZATION}}/_apis/securityroles/scopes/distributedtask.agentpoolrole/roleassignments/resources/{{AZP_AGENT_POOL_ID}}?api-version=7.2-preview.1 HTTP/1.1

Host: dev.azure.com

Authorization: Basic username:PAT

Content-Type: application/json

[

{

"userId": "{{ADO_USER_ID}}",

"roleName": "Administrator"

}

]

The response you receive after adding a user to the security role contains:

{

"continuation_token": null,

"count": 1,

"value": [

{

"access": "assigned",

"accessDisplayName": "Assigned",

"identity": {

"displayName": "myIdentity",

"id": "12345678-0000-0000-0000-000000000000",

"uniqueName": "myIdentity"

},

"role": {

"allowPermissions": 27,

"denyPermissions": 0,

"description": "Administrator can administer, manage, view and use agent pools.",

"displayName": "Administrator",

"identifier": "distributedtask.agentpoolrole.Administrator",

"name": "Administrator",

"scope": "distributedtask.agentpoolrole"

}

}

]

}

Deploy the Azure DevOps Agents

The following Kubernetes deployment runs 3 agent pods using the custom image.

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: azdevops-deployment

labels:

app: azdevopsagent-windows

spec:

replicas: 3

selector:

matchLabels:

app: azdevopsagent-windows

template:

metadata:

labels:

azure.workload.identity/use: "true"

app: azdevopsagent-windows

spec:

serviceAccountName: workload-identity-sa

containers:

- name: azuredevopsagent

image: "${ACR_NAME}.azurecr.io/azp-agent:windows"

imagePullPolicy: Always

env:

- name: AZP_URL

valueFrom:

configMapKeyRef:

name: azdevops

key: AZP_URL

- name: AZP_POOL

valueFrom:

configMapKeyRef:

name: azdevops

key: AZP_POOL

resources:

limits:

memory: 1024Mi

cpu: 500m

EOF

The container logs should now display the Azure Pipelines banner, along with a confirmation message indicating a successful connection to the Azure DevOps service. This process is virtually identical to the Linux setup.

1. Determining matching Azure Pipelines agent...

https://vstsagentpackage.azureedge.net/agent/4.248.0/vsts-agent-win-x64-4.248.0.zip

2. Downloading and installing Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v4.248.0 |_| (commit 4dd8b81)

>> Connect:

Connecting to server ...

>> Register Agent:

Scanning for tool capabilities.

Connecting to the server.

Successfully added the agent

Testing agent connection.

2024-11-29 17:47:20Z: Settings Saved.

4. Running Azure Pipelines agent...

Scanning for tool capabilities.

Connecting to the server.

2024-11-29 17:47:33Z: Listening for Jobs

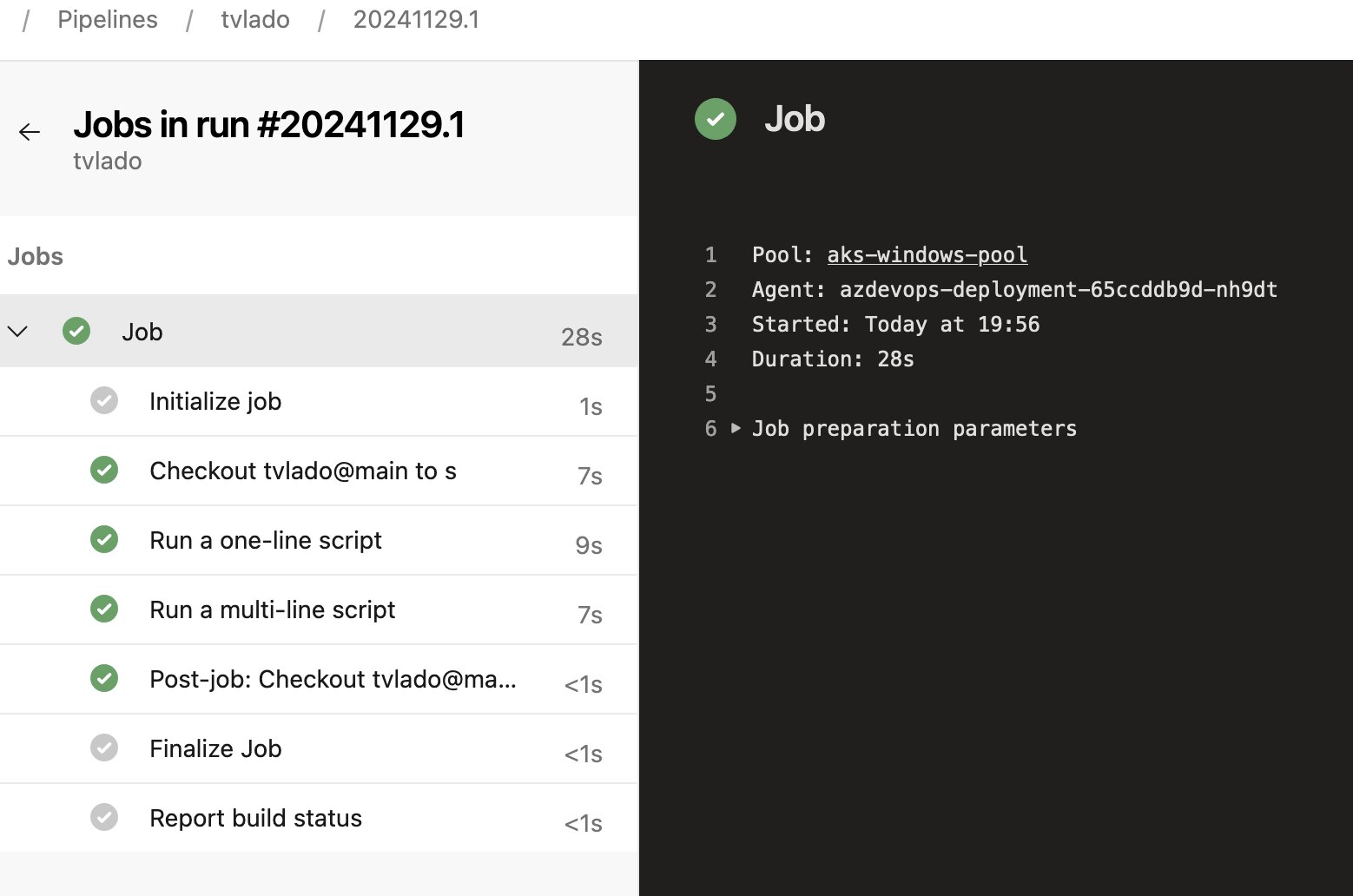

Next, you can trigger an Azure DevOps pipeline and ensure that the pool property is set to aks-windows-pool. Here’s the YAML pipeline configuration to get started. If it’s your first time running the pipeline, you may need to approve the use of the agent pool:

trigger:

- main

pool: "aks-windows-pool"

steps:

- script: echo Hello, world!

displayName: 'Run a one-line script'

- script: |

echo Add other tasks to build, test, and deploy your project.

echo See https://aka.ms/yaml

displayName: 'Run a multi-line script'

Once the pipeline job is queued, you should see the logs confirming the agent is running and processing the job.

2024-11-29 17:47:33Z: Listening for Jobs

2024-11-29 18:56:04Z: Running job: Job

2024-11-29 18:56:39Z: Job Job completed with result: Succeeded

Cleanup. Removing Azure Pipelines agent...

Removing agent from the server

Connecting to server ...

Succeeded: Removing agent from the server

Removing .credentials

Succeeded: Removing .credentials

Removing .agent

Succeeded: Removing .agent

Stream closed EOF for default/azdevops-deployment-65ccddb9d-nh9dt (azuredevopsagent)

And in the Azure DevOps portal, you’ll see that the pipeline executed successfully. The logs will show the progress and completion of the tasks, confirming that everything is working as expected.

KEDA triggers for Azure Pipelines on Windows Containers?

Even though we are running Windows container workloads on AKS, we can still use KEDA (Kubernetes Event-Driven Autoscaling) to dynamically scale those agents. While KEDA’s core components run exclusively on Linux, it can instruct Kubernetes to deploy additional Windows workloads as needed.

System Node Pool Gotcha

Does that mean we need a Linux user node pool?

Well.. While it’s possible to use a separate Linux user node pool, when you provision AKS, a Linux system node pool is automatically created. This system node pool can be used to host KEDA. Though system node pools are primarily designed to host critical system pods, for this demo, we will allow KEDA to deploy itself in the Linux system node pool.

AKS automatically assigns the label kubernetes.azure.com/mode: system to the nodes in the system node pool. This label signals AKS to prefer scheduling system pods on nodes that have this label. However, since it is a label, that does not prevent the scheduling of application pods on system node pools. For our KEDA deployment, AKS will deploy it on the only available Linux node pool. If you want to prevents application pods from being scheduled on system node pools, you can apply a taint with CriticalAddonsOnly=true:NoSchedule when you create a node pool.

Installing KEDA

To install KEDA, you can use the following Helm command. We’ll reuse the user-assigned managed identity we created for demonstration purposes. You have the option to use a different managed identity or application, depending on your setup.

# Install KEDA using Helm

helm install keda kedacore/keda --namespace keda \

--create-namespace \

--set podIdentity.azureWorkload.enabled=true \

--set podIdentity.azureWorkload.clientId="${USER_ASSIGNED_CLIENT_ID}"

💡 If you want to configure tolerations for KEDA, you can set them using the

resources.tolerationsproperty in the KEDA values.yaml file.

Configure the azure-pipelines trigger

For KEDA to scale based on the number of triggered pipeline runs, it needs to authenticate with Azure and obtain an Entra ID access token. To achieve this, you’ll need to create a new federated credential. Note that the subject field for the federated credential must be set to system:serviceaccount:keda:keda-operator because the KEDA operator uses a different service account in a different namespace. This federated credential setup is necessary for KEDA to interact with the Azure DevOps REST API securely and monitor for pipeline runs.

az identity federated-credential create --name "keda-operator" \

--identity-name "${USER_ASSIGNED_IDENTITY_NAME}" \

--resource-group "${RESOURCE_GROUP}" \

--issuer "${AKS_OIDC_ISSUER}" \

--subject "system:serviceaccount:keda:keda-operator" \

--audience "api://AzureADTokenExchange"

Each TriggerAuthentication is defined within a specific namespace and can only be used by a ScaledObject in the same namespace. To authenticate using the azure-workload provider, specify it in the TriggerAuthentication definition.

cat <<EOF | kubectl apply -f -

---

apiVersion: keda.sh/v1alpha1

kind: TriggerAuthentication

metadata:

name: pipeline-trigger-auth

namespace: default

spec:

podIdentity:

provider: azure-workload

EOF

We can reference the TriggerAuthentication in the ScaledObject resource using spec.triggers[*].authenticationRef.name.

cat <<EOF | kubectl apply -f -

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: azure-pipelines-scaledobject

namespace: default

spec:

scaleTargetRef:

name: azdevops-deployment

minReplicaCount: 1

maxReplicaCount: 5

triggers:

- type: azure-pipelines

metadata:

poolName: "${AZP_POOL}"

organizationURLFromEnv: "AZP_URL"

authenticationRef:

name: pipeline-trigger-auth

EOF

⚠️ Be mindful of the maximum number of parallel jobs you can trigger. For the latest limits, consult the Azure DevOps documentation.

Tangent: Azure DevOps Scopes

While revisiting this topic, I stumbled upon a Microsoft DevBlog titled “New Azure DevOps scopes now available for Microsoft Identity OAuth delegated flow apps” and, at first, I misunderstood it. I thought it meant I could narrow the scope of the requested access token, which is usually a good practice.

Developers are encouraged to specify the exact scopes they require from users. Fortunately, the Azure DevOps team maintains a list of OBO scopes that can be referenced.

But then, I realized we’re not using delegated (OBO) authentication flows. Instead, we’re using client credential flows.

The client credentials grant flow lets a web service (confidential client) authenticate with its own credentials, rather than impersonating a user. This flow is ideal for server-to-server interactions, often referred to as daemons or service accounts, which run in the background without user involvement.

As a result, we must keep the scope set to "499b84ac-1321-427f-aa17-267ca6975798/.default" so Entra ID grants us an access token for the Azure DevOps Services REST API.

Final Thoughts

That was a straightforward switch to Windows containers, if you ask me! 😎

I was able to reuse much of the code I had written previously and let Kubernetes, Entra Workload Identity on AKS, and federated identity handle the heavy lifting. However, I want to emphasize that this approach is not a one-size-fits-all solution. While workload identity is a substantial improvement for authenticating Azure resources, it’s not immune to risks. These tokens can still be captured and misused if not properly secured.

Thankfully, you can mitigate these risks with Entra ID Workload Identity Premium, along with conditional access policies and continuous access evaluation. These tools help enhance security and ensure more robust protection for your workloads.

Related posts

- Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service

- Azure Confidential Computing: CoCo - Confidential Containers

- Making Sense of AI

- Azure Confidential Computing: Confidential GPUs and AI

- Azure Confidential Computing: Confidential Temp Disk Encryption

- Azure Confidential Computing: Secure Key Release - Part 2

- Azure Confidential Computing: Microsoft Azure Attestation

- Azure Confidential Computing: Azure RBAC for Secure Key Release

- Azure Confidential Computing: Verifying Microsoft Azure Attestation JWT tokens

- Azure Confidential Computing: Secure Key Release

- Azure Confidential Computing: Confidential VMs

- Azure Confidential Computing: IaaS

- Azure Confidential Computing

- Key Vault for Azure virtual machines extension

- SPIFFE and Entra Workload Identity Federation