Azure Confidential Computing: IaaS

In this post

It’s been a while since I explored the intriguing universe of confidential computing. Before diving into the subject, I was under the impression that confidential computing was something that you could toggle at the VM level to magically make everything more secure. Upon closer inspection, however, I began to realise that this was not entirely the case. The idea behind confidential computing is that it exists to allow for the protection of data as it is being processed.

A lot of fantastic progress has been made in the confidential computing space since writing my initial post. During Ignite 2020, around September of the same year, we had a glimpse of some of the new developments surrounding confidential computing. By the time Ignite 2021 came around, a few of those developments had successfully gone through their preview phases and are now generally available for everyone to use.

I figured I’d write down some of the new features that have been added since 2020, I hope it proves to be of some use.

Recap: Why Confidential Computing

Microsoft Azure has had existing protection and built-in encryption safeguards on the platform for many years. If you have learnt about encryption on Azure, you might have read that it fully supports these types of encryption:

- Encryption at rest

- Prevents data from being obtained in an unencrypted form, in the event of an attack.

- Used in services such as Azure Disk Encryption, Azure SQL Databases, Azure Storage Accounts, etc…

- Encryption in transit (E2E)

- Prevents data from being sent in clear text over public or private networks.

- Typically happens via SSL and TLS protocols.

Data, on the other hand, can exist in three states:

- Data in storage is “at rest”.

- Data traversing the network is “in transit”.

- Data being processed is “in use”.

And so we could say that there should also be another type of encryption:

- Encryption when “in use”.

Make no mistake, previous mechanisms to protect data in transit and at rest remain must-haves. However, more often than not these are no longer enough for use-cases where (very) sensitive data is being processed. Even when you’re diligently keeping your environments up-to-date with all of the latest governance and security recommendations that Microsoft has on offer, protecting sensitive data while it is being processed might still be worth considering.

How do we go about doing this? Well, I think that a basic security measure might give us a clue as to how we can get started, namely by keeping your attack surface small. (Easier said than done, I wholeheartedly agree.) Fortunately for us, Azure Confidential Computing aims to lower the trusted computing base for cloud workloads by offering trusted execution environments.

📖 From the docs: “The trusted computing base (TCB) refers to all of a system’s hardware, firmware, and software components that provide a secure environment. The components inside the TCB are considered “critical”. If one component inside the TCB is compromised, the entire system’s security may be jeopardized. A lower TCB means higher security.”

A trusted execution environment (TEE), a.k.a. an Enclave, is a tampering resistant environment that provides security assurances to us. The range of assurances often depends on what ships with the TEE, but at a minimum, we will often see the following types of security assurances:

- Data integrity

- Prevents unauthorized entities from modifying data inside of an enclave.

- Code integrity

- Prevents unauthorized entities from modifying code inside of an enclave.

- Data confidentiality

- Prevents unauthorized entities from viewing data as it is being processed inside of an enclave.

💡 “Keep the attack surface small!” Hardware-based TEEs can remove the operating system, (cloud) platform and service providers, along with their administrators, from the list of parties that your company places trust in. Doing so can potentially reduce the risk of a security breach or compromise.

What’s on the menu?

For a while now, the Azure platform has had several interesting offers for those who require confidential computing capabilities. Microsoft has a few very interesting and successful customer cases that highlight some of the things that are possible with confidential computing:

- Signal utilizes parts of Azure confidential computing to provide a scalable and secure environment for its messenger app.

- Platforms to generate and gain insights without providing access to any individual or groups of customer datasets:

- The University of California, San Francisco (UCSF), along with Fortanix, Intel, and Microsoft are building a privacy-preserving analytics platform to help accelerate AI in health care.

- In a similar vein, the Royal Bank of Canada has created a privacy-preserving multiparty data sharing and collective insight generation platform.

What specifically does Azure have on offer when it comes to confidential computing? As with many of the other different types of offerings on the platform, you decide just how much control you want and the responsibility you are committed to taking on this means that you can either pick from a few platform-as-a-service or infrastructure-as-a-service offerings. To round things off, Microsoft is also investing in developer tools, many of which are open-source. You can use these tools to power the development of your next big project.

“IaaS is a ladder”

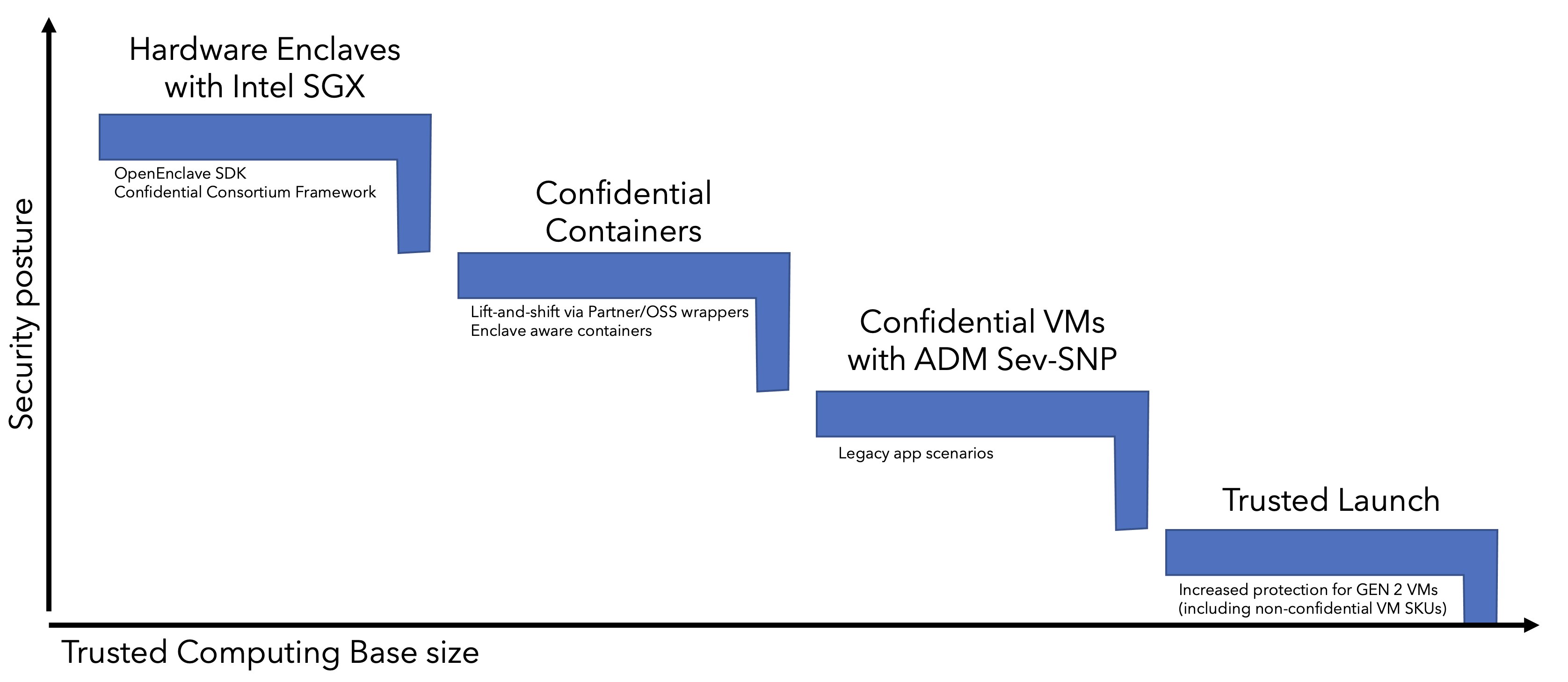

Many of Microsoft’s sessions on the subject of confidential computing, that I’ve seen, talk about the “trust ladder”. The basic premise of the ladder comes down to this: the higher up the ladder we go, the more our security posture is strengthened to some extent.

If you want to run specific workloads in a confidential setting, using infrastructure as a service component, you’re in luck. Microsoft lets you pick from a couple of different virtual machine SKUs, designed specifically for confidential computing IaaS workloads. Customers can choose what’s best for them depending on their desired security posture.

Let’s take a look at that ladder. Keep in mind that, as we go through these “rungs”, there may not be a one-size-fits-all answer. As with lots of app modernization efforts, where you land partially depends on the requirements of your application and the constraints that its codebase is built on.

💡 I’ve expanded the ladder with confidential containers, I thought they too deserved a spot.

The more code that is put inside of the trusted execution environment, the more vulnerable your setup might become due to the larger trusted computing base. Again, code that is run inside of an enclave cannot be viewed by a host or hypervisor, so keeping the code-base that runs inside the TEE to a minimum will typically result in more confidentiality assurances.

VMs with Intel SGX app enclaves

At the very top of the ladder, you have “Confidential VMs with Intel SGX application enclaves”. Typically you would go for this option if you only trust the application code and the processor that it runs on. Intel SGX is a feature for a specific set of processors, that lets you create enclaves. These enclaves can keep data encrypted, as the CPU is processing it. An operating system or hypervisor can’t even view or access the data, the enclave is completely isolated from the host system.

This also implies that your very own virtual machine administrators also cannot gain access to any of this data. Even Azure datacenter administrators, who might have physical access to the box your VM is running on, cannot access the data. Intel SGX isolates its encrypted memory from all software on the VM, except for the application code inside the protected Intel SGX enclave.

💡 If you’d like to know more about this topic, feel free to read through my first blog post on Azure Confidential Computing, where I tinker around with the OpenEnclave SDK or check out the Microsoft Azure docs page.

An Intel SGX enclave is a hardware-based TEE and thus it only works with Intel SGX capable processors. Microsoft Azure provides a few SKUs that will allow you to use those TEEs. Virtual machine SKUs that are associated with the Intel SGX features all start with the “DC” prefix, as I am writing this you can utilize the Intel SGX features by deploying from the following virtual machine SKUs:

- DCs_v2

- A note about the Standard_DC8_v2 SKU, it is the only one that only supports standard disks. All other SKUs also have premium disk support.

- DCs_v3 and DCds_v3

- As of May 24th, 2022, these SKUs are generally available.

- Intel Total Memory Encryption - Multi Key support

- Allows you to encrypt VM with separate and unique keys, which enables always-on encryption and provides protection against tenants on the same node.

💡 Are you also wondering how Microsoft comes up with all those virtual machine SKU names? Do you oftentimes wonder what they might mean? Well fortunately for you, Microsoft has a neat Azure docs page that goes into the details and explains their reasoning!

Confidential Containers

The virtual machine SKUs we mentioned earlier open up an additional path by which you can run your workloads, through containerization. You can deploy confidential containers with enclaves. This method of container deployments has the strongest security and compute isolation, with a lower Trusted Computing Base (TCB).

Azure Kubernetes Services allows you to set up node pools that are backed by an Intel SGX capable processor. You have two programming/deployment models that you can sink your teeth in:

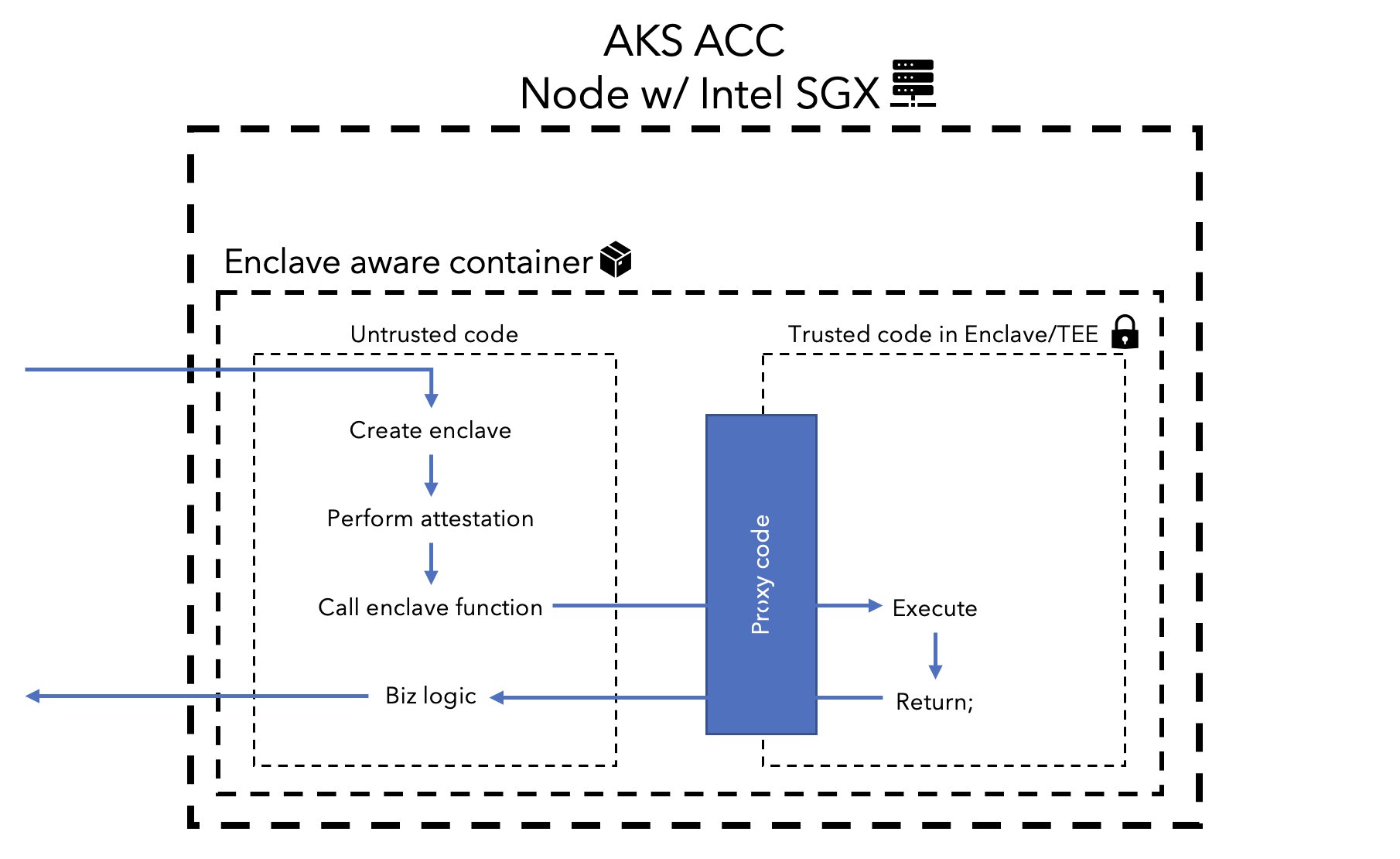

Enclave Aware Containers

These are conceptually very similar to typical SGX applications. When developing those kinds of applications, you have an untrusted component (host app) and a trusted component (enclave app). The host app can only communicate with the enclave through a layer of proxy code.

As far as I can tell, an Enclave aware container will contain both the untrusted and trusted components. Code, which is marked to run inside the “trusted component” will be executed inside of the trusted execution environment.

💡 There are many ways by which you can create SGX applications. You can choose from several types of SDKs and frameworks that work with a variety of high and lower-level programming languages. Have a look at this list.

Unmodified containers

You can also lift and shift existing container images and run them inside of an enclave without having to modify any of the source code or perform recompilation. As you can probably imagine, you must wrap your existing container images with the Intel Software Guard Extensions (SGX) runtime, to be able to let your container run inside an enclave.

This can be as simple as switching from building from the official alpine base image to building from a different base image that has been modified to use Intel SGX. Though the exact method of how your app is loaded into an enclave is specific to the wrapper solution, that is how they typically will work.

💡 Microsoft lists several partners and open-source based solutions that can help you jumpstart this process, in their docs page.

Confidential VMs

A confidential virtual machine is much like what you’re used to when using any other virtual machine. The confidential VM runs an operating system like Windows or Linux, without having to make any modifications to the OS. Though you will need to run a generation 2 Azure virtual machine, along with a generation 2 operating system image. In any case, everything runs on top of a hypervisor but the hypervisor has no access to what is running inside of the virtual machine. In essence, the entire state of the VM is encrypted.

An important thing to keep in mind is that the trusted computing base is much larger when using a confidential VM. On one hand, you have to place your trust in all of the kernel and user code on that VM. On the other hand, you will be able to perform tasks with the tools you are familiar with, as you normally would in Windows or Linux, the thing that stands out is that everything is encrypted.

Azure confidential computing offers confidential VM SKUs, based on AMD processors with SEV/SNP technology:

- DCas_v5 and DCads_v5 series.

- General-purpose hardware category.

- ECas_v5 and ECads_v5 series.

- Memory-optimized hardware category.

As of July 19th 2022 these VM SKUs are generally available!

💡 I have another blog post dedicated entirely to Azure Confidential Virtual Machines.

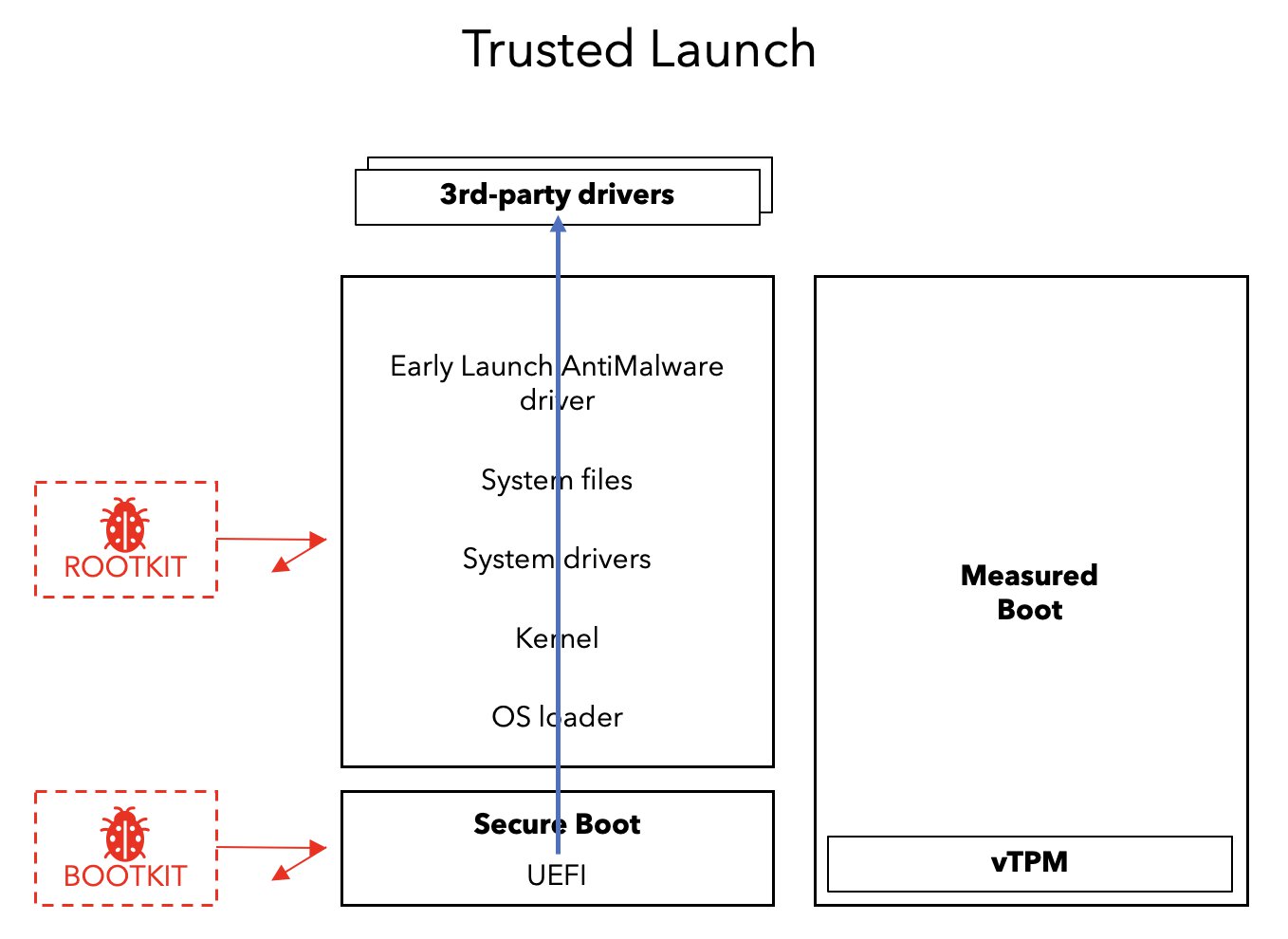

Trusted Launch

The last technology that I will mention is called “Trusted Launch for Azure VMs”, which is a key foundational component of trusted execution environments and provides your VM with it own dedicated Trusted Platform Module instance. It consists of multiple security technologies, such as Secure Boot and Virtualized Trusted Platform Module (vTPM), that all operate in tandem. By deploying virtual machines that utilize Trusted Launch, with Secure Boot and vTP enabled, you can be more confident in the fact that the VM’s boot chain has not been compromised. The Trusted Launch capability comes at no additional cost.

- Secure Boot provides a way to guard against sophisticated types of malware, that typically run in kernel mode and are hidden from a user’s sight.

- As the VM boots up, Secure Boot checks the signature of each piece of boot software, including UEFI firmware drivers, EFI applications and the operating system. If Secure Boot detects a compromised component in the boot chain, a cryptographic signature won’t match, and the VM will not boot.

- The vTPM enables attestation by measuring the entire boot chain of your VM.

- This process does not halt the boot process, it only computes the hash of the next objects in the chain and stores the hashes on the vTPM. If this process fails, alerts will be issued by Azure Defender for Cloud.

Trusted Launch protects against the following types of malware:

- Boot kits

- Firmware rootkits

- Kernel rootkits

- Driver rootkits

⚠️ You cannot enable Trusted Launch on existing virtual machines that were initially created without the feature, the only workaround is to deploy a new virtual machine with Trusted Launch enabled.

As mentioned earlier, Trusted Launch is able to work in conjunction with Azure Defender for Cloud, formerly known as Azure Security Center and Azure Defender. Amongst other things, Defender for Cloud will periodically perform attestation. In the event that a threat is detected and you’ve opted into Defender for Cloud its enhanced security features, a medium severity alert will be triggered.

💡 Attestation is a mechanism by which a third entity establishes that an algorithm is running on an Intel SGX enabled platform protected within an enclave, before provisioning that software with secrets and protected data.

Another thing to keep in mind is that Trusted Launch is only compatible with generation 2 (g2) Azure VMs and VM images. Be aware though… Some of your favourite Azure IaaS features and addons might be incompatible with generation 2 VMs, though over the years that list has been shrinking. And since we’re mentioning constraints, Trusted Launch itself also has some limitations that you may want to keep in mind prior to enabling the feature. So.. that’s three things in total to keep in mind!

You can use all of the Azure APIs and SDKs to quickly get a list of generation 2 virtual machine images, here is how I did it with Azure PowerShell:

$deploymentLocation = "westeurope"

$publishers = @(

"MicrosoftWindowsServer"

"MicrosoftWindowsDesktop"

)

$publishers | ForEach-Object {

Write-Host "`r`n⚡️ Gen 2 offers for publisher '$_' in '$deploymentLocation' :"

Get-AzVMImageOffer -Location $deploymentLocation -Publisher $_ | `

Get-AzVMImageSku | `

Where-Object {

$_.Skus -ilike "*-gensecond" -OR `

$_.Skus -ilike "*-g2" -OR `

$_.Skus -imatch "win.*11" # 👈 Windows 11 always requires gen 2

}

}

# ⚡️ Gen 2 offers for publisher 'MicrosoftWindowsServer' in westeurope

#

# Skus Offer PublisherName Location Id

# ---- ----- ------------- -------- --

# windows-server-2022-g2 microsoftserveroperatingsystems-previews MicrosoftWindowsServer westeurope /Subscriptions/00000000-0000-0000-0000-000000000000/Providers/Microsoft.Compute/Locations/westeurope/Publishers/MicrosoftWindowsServer/ArtifactTypes/V…

# 2016-datacenter-gensecond windows-10-1607-vhd-server-prod-stage MicrosoftWindowsServer westeurope /Subscriptions/00000000-0000-0000-0000-000000000000/Providers/Microsoft.Compute/Locations/westeurope/Publishers/MicrosoftWindowsServer/ArtifactTypes/V…

💡 Microsoft, and its open-source contributors, maintain lists of generation 2 VM compatible virtual machine SKUs, as well as compatible marketplace OS images.

Developer tooling

In my previous post on this subject, I wanted to get a feel for what it would be like to build software that utilises confidential computing capabilities, mainly with enclaves. In order to do just that, I wrote a tiny little application, in plain old C, using Microsoft it’s OpenEnclave SDK.

💡 OpenEnclave lets developers run their applications inside of a trusted execution environment on either ARM Trustzone or Intel Software Guard Extensions enabled CPUs.

Since I wanted to debug and test my application on actual SGX enabled hardware, I fired up an Azure confidential compute Dc_v2 Linux VM. Afterwards, there was only the matter of hooking up VS Code’s remote development extension and I was able to build and test the OpenEnclave SDK. Rad 😎! You can read my first blog post on Azure Confidential Computing, here or check out the Microsoft Azure docs page.

Anything else?

There are also plenty platform-as-a-service and PAAS features that are powered by confidential computing capabilities, many of which I didn’t discuss here but might also be worth checking out.

Azure IoT Edge has enclave support, if you need to keep thing confidential at the edge.

Microsoft Research provided an as-is example of how you can add confidential inference support to the ONNX runtime, which was ported to work with SGX enclaves. This restrict a machine learning hosting party from accessing the inferencing request and its corresponding response.

Conclusion

Confidential computing is starting to gain a lot of interest and according to Microsoft its market is likely to grow exponentially over the coming years. I think that just as cloud platforms have really commoditized lots of different parts of our IT infrastructure, it won’t be long before confidential computing features will be among those features that everyone will want to use.

I am very tempted to take a closer look and test out how easy it is to lift-and-shift existing container images and run them inside of an enclave. Perhaps I should write about that next. 🤔

If you’ve noticed anything that’s partially or completely incorrect, feel free to let me know!

Related posts

- Azure Confidential Computing: Secure Key Release

- Azure Confidential Computing: Confidential VMs

- Making Sense of AI

- Azure Confidential Computing: Confidential GPUs and AI

- Windows Containers: Azure Pipeline Agents with Entra Workload ID in Azure Kubernetes Service

- Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service

- Azure Confidential Computing: CoCo - Confidential Containers

- Azure Confidential Computing: Confidential Temp Disk Encryption

- Azure Confidential Computing: Secure Key Release - Part 2

- Azure Confidential Computing: Microsoft Azure Attestation

- Azure Confidential Computing: Azure RBAC for Secure Key Release

- Azure Confidential Computing: Verifying Microsoft Azure Attestation JWT tokens

- Azure Confidential Computing

- Key Vault for Azure virtual machines extension