Local OpenShift 4 with Azure App Services on Azure Arc

In this post

I started my Azure journey somewhere in 2014 by deploying .NET applications while I was still making a living as a Microsoft .NET consultant. I suppose I could say that Windows Azure Web Sites was one of the first services that got me interested in and ultimately hooked to the potential of the Azure cloud. Windows Azure Web Sites its successor, Azure App Services, is also a very versatile and fully managed web hosting platform that runs just about anything you can throw at it.

Fortunately, the service has been improved upon over the years with a plethora of features such as hardware SKUs, Web Jobs, Functions apps, “Easy Auth”, high-density hosting, isolated tiers, VNET integration, container and Linux support, etc… There is a lot of value packed into this platform, for sure.

💖 I would be lying if I said that I do not have a soft spot for Azure App Services.

Fast forward to May 25th, at around 6:10:50 PM and perhaps you can imagine my delight when I heard Satya Nadella, Microsoft’s CEO, announce that Azure Application Services was going to be able to “Run your apps, anywhere”, through “App Services on Azure Arc enabled Kubernetes”.

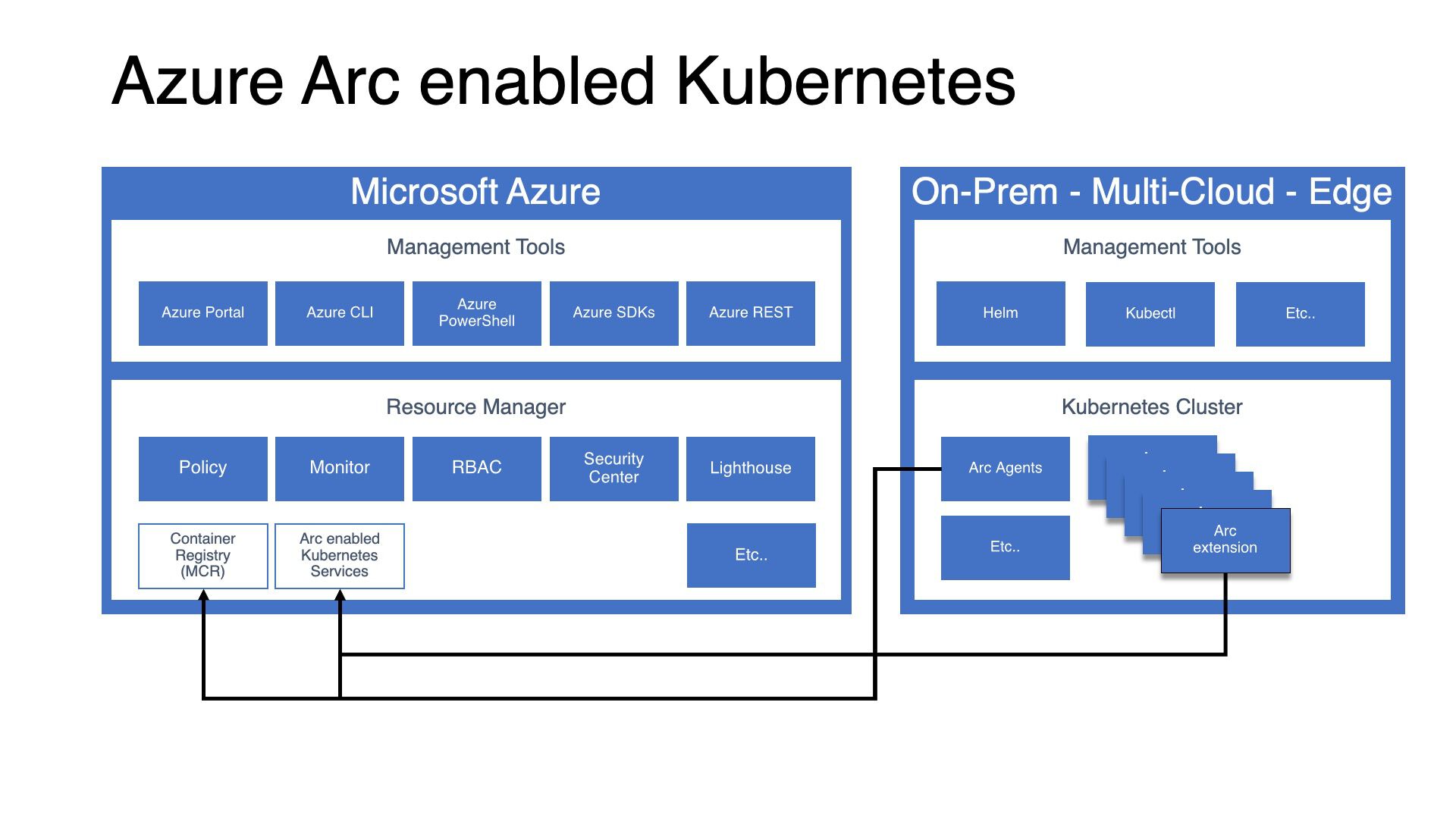

What is Azure Arc enabled Kubernetes?

If you have not yet heard of “Azure Arc enabled Kubernetes” before then don’t worry, we’ll take a look at what the Microsoft docs have to say about this piece of technology:

“On its own, Kubernetes can deploy containerized workloads consistently on hybrid and multi-cloud environments. Azure Arc enabled Kubernetes, however, works as a centralized, consistent control plane that manages policy, governance, and security across heterogeneous (dissimilar) environments.”

With “Azure Arc enabled Kubernetes” you can hook up any Kubernetes cluster, that can be located anywhere, to an Azure subscription. These clusters will appear as “Connected Kubernetes” resources inside of the Azure portal, complete with their resource ID and managed identity.

You can manipulate the “Microsoft.Kubernetes/connectedClusters” type object ("Azure Arc enabled Kubernetes" resource) as you would any other Azure resource, you can tinker with tags, set RBAC rules, view activity logs, set locks and assign it to a resource group, though it doesn’t end there. Where things start to get interesting with “Azure Arc enabled Kubernetes” is how it integrates with other Azure services, at the time of writing it allows for the following scenarios:

- Deploy containerized applications using Helm charts.

- GitOps-based configuration management via Flux.

- View and monitor clusters via Azure Monitor for containers.

- Plugs into Azure Security Center

- Enforce threat protection using Azure Defender for Kubernetes.

- Apply policies using Azure Policy for Kubernetes.

- Uses a mix of Azure Policies and Rego syntax, the latter of which I have written about earlier this year.

- Create custom locations as target locations for deploying “Azure Arc enabled Kubernetes” extensions.

App Services on Azure Arc (Preview)

This brings us to “App Services on Azure Arc” (K8SE), currently in public preview, which is one of the many available cluster extensions.

Extensions are built on top of the packaging components of Helm, for “Azure Arc enabled Kubernetes”. The installation and management of a cluster extensions are very straightforward as it works similar to how we normally manage most resources in Azure, by using the CLI/PowerShell/REST tooling that we have grown accustomed to.

As I was reading through the “Azure Arc enabled Kubernetes” documentation I noticed that the Azure Arc validation program page stated:

“Azure Arc enabled Kubernetes works with any Cloud Native Computing Foundation (CNCF) certified Kubernetes clusters. Future major and minor versions of Kubernetes distributions released by these (key industry Kubernetes offering) providers will be validated for compatibility with Azure Arc enabled Kubernetes.”

And a little further down the page, you have a list of Kubernetes distributions that have been tested with “Azure Arc enabled Kubernetes”. However, one, in particular, caught my eye:

| Provider name | Distribution name | Version |

|---|---|---|

| RedHat | OpenShift Container Platform | 4.5, 4.6, 4.7 |

Red Hat OpenShift Container Platform is the self-managed version of OpenShift. This got me wondering whether I could get App Services running Red Hat CodeReady Containers (CRC), which is a minimal OpenShift 4 cluster for development and testing purposes. Not entirely the same deal, but I’ve managed to get “App Services on Azure Arc” to work on CRC. 🎉

Running K8SE Preview on CodeReady Containers

⚠️ Careful with preview features: “Azure Arc enabled Kubernetes preview features are available on a self-service, opt-in basis. Previews are provided “as is” and “as available,” and they’re excluded from the service-level agreements and limited warranty. Azure Arc enabled Kubernetes previews are partially covered by Azure customer support on a best-effort basis.”

I decided to skip the deployment of a full-scale Azure Red Hat OpenShift (ARO) cluster this time around, though the process should be roughly the same for any type of OpenShift cluster. At a high level we will do the following:

- Set up the CodeReady Containers (OpenShift) cluster;

- Deploy “Azure Arc enabled Kubernetes” agent;

- Deploy “Azure App Services on Azure Arc” Preview (K8SE);

- Fix issues related to security context constraints, dynamic volume provisioning, incorrect service type.

CRC: Required specs

The OpenShift cluster requires the following minimum resources to run in the CodeReady Containers virtual machine:

- 4 physical CPU cores

- 9 GB of free memory

- 35 GB of storage space

14 GB of memory is required if you want to enable monitoring, alerting and telemetry gathering. To ensure that CRC can run on most desktops and laptops, this feature is initially disabled but can be switched on with one simple command.

If you’re low on available memory, don’t fear. I would recommend that you deploy an Azure VM, that allows nested virtualization and has about 32 GiB of total memory. A “Standard D8s v3” (8 vcpus, 32 GiB memory) will work quite well here.

CRC: Setup Ceremony

We need to download the latest release of CodeReady Containers for your platform, from Red Hat’s website. You will also need to download the pull secret, for CRC to function. Once you have downloaded the binary, open up a terminal and execute the following commands.

# We want our cluster to consume a little more available memory.

# Set in the limit in mebibytes (MiB)!

crc config set memory 15360

# Prometheus and the related monitoring, alerting, and telemetry functionality.

# Telemetry functionality is responsible for listing your cluster in the Red Hat OpenShift Cluster Manager.

crc config set enable-cluster-monitoring true

# ⚠️ Cluster monitoring cannot be disabled; You must set "enable-cluster-monitoring" to false and run "crc delete".

# Execute the setup and follow its instructions.

crc setup

# Start the OpenShift cluster, you will be prompted to enter the pull secret.

crc start

# The server is accessible via web console at:

# https://console-openshift-console.apps-crc.testing

#

# Log in as administrator:

# Username: kubeadmin

# Password: <password>

#

# Log in as user:

# Username: developer

# Password: developer

# Add the OpenShift CLI to your environment.

crc oc-env

# Copy, paste and run the output.

Deploying Azure Arc enabled Kubernetes

We’ve reached the stage where we want to onboard our local dev cluster into Azure, using “Azure Arc”. The “Arc enabled Kubernetes” quickstart is very clear on how this needs to be done.

To follow along, you will need these tools:

- Azure CLI v2.16.0+

- Helm v3+

- OpenShift CLI

- This should come with CRC, we activated this in the previous step.

Let’s run a few checks before we begin deploying resources.

# Ensure that you have Helm v3+ installed.

helm version --short

# v3.6.3

# Upgrade connectedk8s extension

az extension add --upgrade --yes --name connectedk8s

# log in to your Azure subscription

# ensure that you have

az login

# log in to your OpenShift cluster

oc login -u kubeadmin

# Set the context to crc-admin

oc config set-context crc-admin

# Logged into "https://api.crc.testing:6443" as "kubeadmin" using existing credentials.

# You have access to 61 projects, the list has been suppressed. You can list all projects with 'oc projects'

# Using project "default".

# Register the resource providers

az provider register --namespace Microsoft.Kubernetes

az provider register --namespace Microsoft.KubernetesConfiguration

az provider register --namespace Microsoft.ExtendedLocation

# Track the registration here, it should take about 10 minutes.

az provider show -n Microsoft.Kubernetes -o table

az provider show -n Microsoft.KubernetesConfiguration -o table

az provider show -n Microsoft.ExtendedLocation -o table

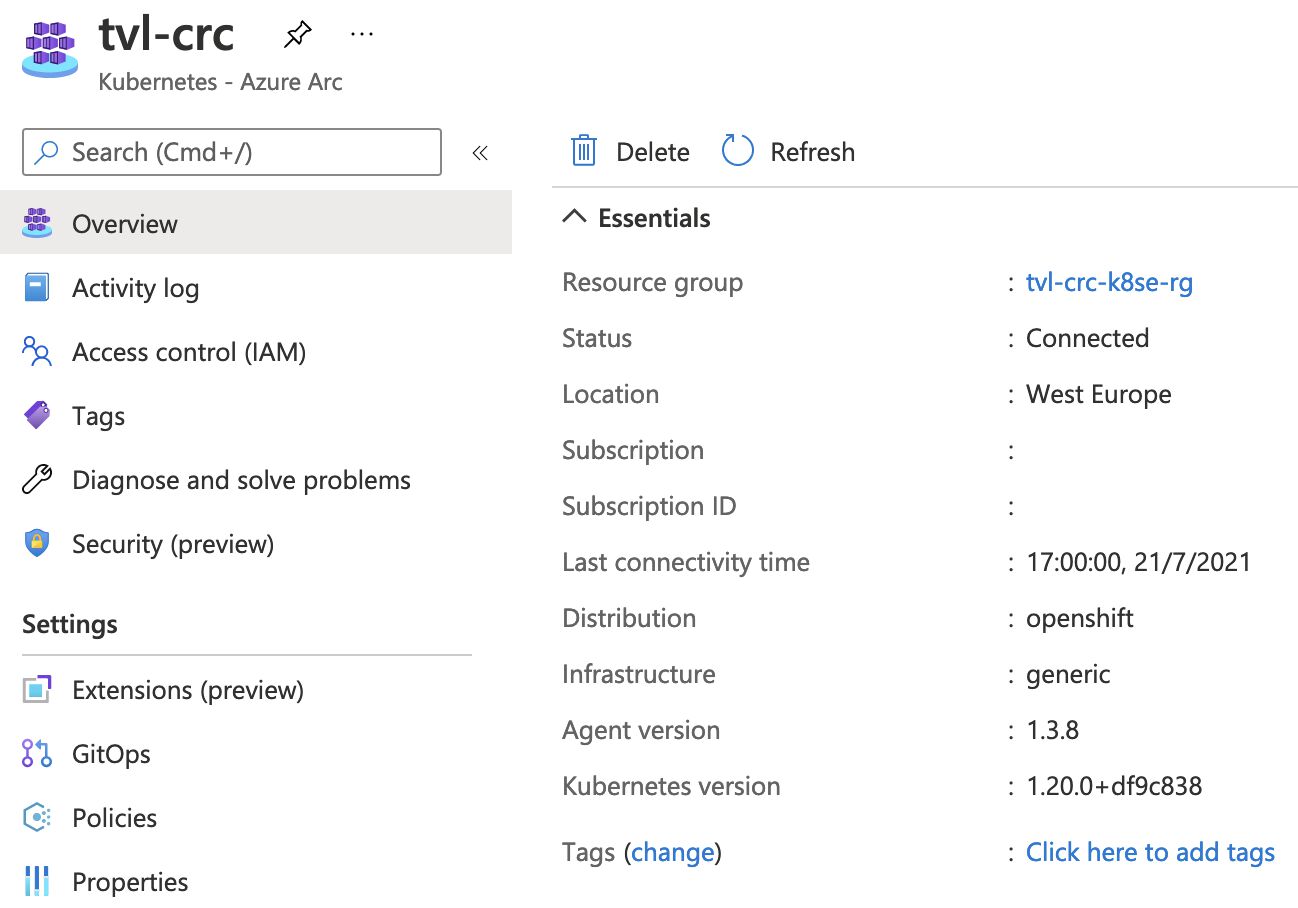

We can now start with deploying some “Azure Arc enabled Kubernetes” resources!

resourceGroupName="tvl-crc-k8se-rg"

clusterName="tvl-crc"

resourceGroupLocation="westeurope"

# Create the resource group that will contain our Azure resources

az group create --name $resourceGroupName --location $resourceGroupLocation --output table

# This operation might take a while...

# You must add to correct security context constraint to the service account!

# 👇 Skipping this will, most likely, ruin your day. I'll explain why in a moment.

oc adm policy add-scc-to-user privileged system:serviceaccount:azure-arc:azure-arc-kube-aad-proxy-sa

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:privileged added: "azure-arc-kube-aad-proxy-sa"

# Make sure that you have connected to the OpenShift cluster at least once as it

# will read from your local "kubeconfig" file! Also ensure that the project "azure-arc" does not exist

az connectedk8s connect --resource-group $resourceGroupName --name $clusterName --distribution openshift --infrastructure auto

# Helm release deployment succeeded

That’s all it takes to get started with “Azure Arc enabled Kubernetes”, that was a very straightforward experience! We can already begin to take advantage of some of the features, such as Azure Policy for instance.

By default the “Azure Arc enabled Kubernetes” agent polls Azure hourly for the availability of a newer version of the agent. If the agent finds an available newer version, it triggers a Helm chart upgrade for the “Azure Arc” agents. To opt-out of auto-upgrade, specify the “--disable-auto-upgrade” flag when using “az connectedk8s connect” command. Alternatively, you can toggle this feature on or off and even perform manual agent upgrades, you can read more about this feature here.

oc get pods --namespace azure-arc

# NAME READY STATUS RESTARTS AGE

# cluster-metadata-operator-79b689c88d-h7qqr 2/2 Running 0 1m

# clusterconnect-agent-576b94657c-r6fb8 3/3 Running 0 1m

# clusteridentityoperator-54456c4b9c-hrpdv 2/2 Running 0 1m

# config-agent-59fb85f-2bd2g 2/2 Running 0 1m

# controller-manager-56786854d5-2spx8 2/2 Running 0 1m

# extension-manager-9fb8c5b47-k67m2 2/2 Running 0 1m

# flux-logs-agent-6596f58c56-dd6xp 1/1 Running 0 1m

# kube-aad-proxy-847f7887c9-zvfp8 2/2 Running 0 1m

# metrics-agent-57d5b4c5b4-d4kdb 2/2 Running 0 1m

# resource-sync-agent-68548bd947-jflrx 2/2 Running 0 1m

az connectedk8s list --resource-group $resourceGroupName --output table

# Name Location ResourceGroup

# ------------- ---------- ---------------

# tvl-crc westeurope tvl-crc-k8se-rg

However there is an important OpenShift concept that, I believe, we need to grasp to fully understand what it is we are doing. What’s up with the “oc adm policy add-scc-to-user privileged” command that we ran?

OpenShift security context constraints 101

As far as I am aware, OpenShift is an opinionated, enterprise-ready, Kubernetes stack which means that some things will be slightly different from some of the other Kubernetes distributions that you may be familiar with. One of these things is the concept of security context constraints or SCC, which is similar to pod security policies.

To prevent that any pod or container requests more than what it should, in terms of process privileges, we can use OpenShift’s security context constraints. An SCC is a special configuration that can be set on your pod or container YAML manifest to request from the OpenShift API some additional privileges. SCCs are composed of settings and strategies that control the security features a pod has access to.

💡 The admission process in the API server will take care of checking if a pod is compliant or not. The set of SCCs that admission uses to authorize a pod are determined by the user identity and groups that the user belongs to. Additionally, if the pod specifies a service account, the set of allowable SCCs includes any constraints accessible to the service account. All three of these (user, group and service account name) have to be allowed to request a specific SCC before it is granted to the pod!

Alexandre Menezes, who works at Red Hat, wrote an excellent blog post detailing some of the different types of SCC you can enable:

| SCC | Description |

|---|---|

| restricted | Denies access to all host features and requires pods to be run with a UID, and SELinux context that are allocated to the namespace. |

| nonroot | All features of the restricted SCC but allows users to run with any non-root UID. |

| anyuid | Provides all features of the restricted SCC but allows users to run with any UID and any GID. |

| hostmount-anyuid | Provides all the features of the restricted SCC but allows host mounts and any UID by a pod. |

| hostnetwork | Allows using host networking and host ports but still requires pods to be run with a UID and SELinux context that are allocated to the namespace |

| node-exporter | Provides features of anyuid but also allows access to the host network, host PIDS, and host volumes, but not host IPC. (Only use with Prometheus) |

| hostaccess | Allows access to all host namespaces but still requires pods to be run with a UID and SELinux context that are allocated to the namespace |

| privileged | Allows access to all privileged and host features and the ability to run as any user, any group, any fsGroup, and with any SELinux context. |

You might find yourself wondering: “why is he going on this tangent”?

It so happens to be that for “Azure Arc enabled Kubernetes” to work, the “kube-aad-proxy” pod is associated to the “azure-arc-kube-aad-proxy-sa” service account. It also happens to be that the “privileged” SCC has to be added to this service account in order for the pod to function. The deployment manifest for “kube-aad-proxy” has the “securityContext.privileged” property set to “true”, so we definitely need to use the an SCC that has the “allowPrivilegedContainer” property set to “true”. Out of the box, the “privileged” SCC is the only SCC that can allow this.

We can check that the SCC has been added to the service account correctly:

oc adm policy who-can use scc privileged --namespace azure-arc

# resourceaccessreviewresponse.authorization.openshift.io/<unknown>

#

# Namespace: azure-arc

# Verb: use

# Resource: securitycontextconstraints.security.openshift.io

#

# Users: kubeadmin

# system:admin

# system:serviceaccount:azure-arc:azure-arc-kube-aad-proxy-sa 👈 Created by us!

# system:serviceaccount:azure-arc:azure-arc-operatorsa 👈 Created automatically?

# system:serviceaccount:openshift-..

# ...

# Groups: system:cluster-admins

# system:masters

Many of the “Arc enabled Kubernetes” pods run a Fluent-Bit container alongside another piece of software, at the time of writing. All pods, except the “Flux” pods (used for enabling the GitOps feature), seem to be running privileged containers. Since privileged containers have almost completely unrestricted access to the host, it might be beneficial to investigate whether or not we can use a new SCC, specifically for this use case.

I stumbled across a custom SCC, written by Fluent-Bit contributor Mohammad Yosefpor, that might just do the trick. Even though we still need to run privileged containers to get this to work, we’re able to lock down certain seccompProfiles and allowedUnsafeSysctls a bit more, compared to when we’d use the “privileged” SCC.

It’s also likely that I might be entirely missing the point and that we simply need the “privileged” SCC for a very specific reason. It’s not 100% clear to me, at this time. At any rate, should you want to know more about SCCs then you can peruse the OpenShift docs for managing SCCs, here.

K8SE: Adding Azure CLI extensions

Because some of the CLI commands we are about to use are not yet part of the core CLI set we can add them with the following commands.

az extension add --upgrade --yes --name connectedk8s

az extension add --upgrade --yes --name k8s-extension

az extension add --upgrade --yes --name customlocation

az provider register --namespace Microsoft.ExtendedLocation --wait

az provider register --namespace Microsoft.Web --wait

az provider register --namespace Microsoft.KubernetesConfiguration --wait

az extension remove --name appservice-kube

az extension add --yes --source "https://aka.ms/appsvc/appservice_kube-latest-py2.py3-none-any.whl" # Installs the appservice-kube preview

A Log-Analytics workspace is not required to run “App Service on Azure Arc”, it is very useful for getting application logs for your apps that are running in the “Azure Arc enabled Kubernetes” cluster via the Azure tools or integrations you might already be familiar with.

resourceGroupName="tvl-crc-k8se-rg"

clusterName="tvl-crc"

workspaceName="$clusterName-workspace"

# We will deploy a log-analytics workspace and set a daily limit of 3 gigabytes

# to keep our costs of our dev environment down.

az monitor log-analytics workspace create \

--resource-group $resourceGroupName \

--workspace-name $workspaceName \

--quota 3

logAnalyticsWorkspaceId=$(az monitor log-analytics workspace show \

--resource-group $resourceGroupName \

--workspace-name $workspaceName \

--query customerId \

--output tsv)

logAnalyticsWorkspaceIdEnc=$(printf %s $logAnalyticsWorkspaceId | base64)

logAnalyticsKey=$(az monitor log-analytics workspace get-shared-keys \

--resource-group $resourceGroupName \

--workspace-name $workspaceName \

--query primarySharedKey \

--output tsv)

logAnalyticsKeyEncWithSpace=$(printf %s $logAnalyticsKey | base64)

logAnalyticsKeyEnc=$(echo -n "${logAnalyticsKeyEncWithSpace//[[:space:]]/}")

K8SE: Deploying the K8SE extension

Set the following environment variables for the desired name of the App Service extension and the cluster namespace in which resources should be provisioned. We will also enter the IP address we want App Service app hostnames to resolve to.

# Name of the App Service extension

extensionName="appservice-ext"

# Namespace in your cluster to install the extension and provision resources

appsNamespace="appservice-ns"

# 👇 Your CRC IP, the IP that I used was "127.0.0.1".

staticIp=$(crc ip)

We can now begin the installation of the App Service extension into the “Azure Arc” connected cluster, CRC in our case, with Log Analytics enabled.

az k8s-extension create \

--resource-group $resourceGroupName \

--name $extensionName \

--cluster-type connectedClusters \

--cluster-name $clusterName \

--extension-type 'Microsoft.Web.Appservice' \

--release-train stable \

--auto-upgrade-minor-version true \

--scope cluster \

--release-namespace $appsNamespace \

--configuration-settings "Microsoft.CustomLocation.ServiceAccount=default" \

--configuration-settings "appsNamespace=${appsNamespace}" \

--configuration-settings "clusterName=${clusterName}" \

--configuration-settings "loadBalancerIp=${staticIp}" \

--configuration-settings "keda.enabled=true" \

--configuration-settings "buildService.storageClassName=appservice-ext-k8se-build-service" \

--configuration-settings "buildService.storageAccessMode=ReadWriteOnce" \

--configuration-settings "customConfigMap=${appsNamespace}/kube-environment-config" \

--configuration-settings "logProcessor.appLogs.destination=log-analytics" \

--configuration-protected-settings "logProcessor.appLogs.logAnalyticsConfig.customerId=${logAnalyticsWorkspaceIdEnc}" \

--configuration-protected-settings "logProcessor.appLogs.logAnalyticsConfig.sharedKey=${logAnalyticsKeyEnc}"

extensionId=$(az k8s-extension show \

--cluster-type connectedClusters \

--cluster-name $clusterName \

--resource-group $resourceGroupName \

--name $extensionName \

--query id \

--output tsv)

az resource wait \

--ids $extensionId \

--custom "properties.installState!='Pending'" \

--api-version "2020-07-01-preview"

You can track the deployment of extensions by looking at the “extension-manager” pod’s logs, more specifically the “manager” container within the pod, located in the “azure-arc” namespace. The following command will output the last 10 logs entries for the container:

oc logs $(oc get pod --selector app.kubernetes.io/component=extension-manager --namespace azure-arc -o jsonpath="{.items[0].metadata.name}") --tail=10 --container manager --namespace azure-arc

# ...

# {"Message":"Service does not have load balancer ingress IP address: appservice-ns/appservice-ext-k8se-envoy" ... }

K8SE: A note on missing configuration settings

I could not find a full list of “--configuration-settings” in the docs, anywhere. Those settings are part of the “K8SE” Helm chart that gets deployed into the cluster.

I will not reveal the full list of settings here, because I may not be at liberty to do so. But, if you connect to the “extension-manager” pod in the “azure-arc” namespace, there will be a directory called “aHR0cHM6Ly9tY3IubWljcm9zb2Z0LmNvbS9rOHNlL2dvY2FuYXJ5Lw==”, a base64’d version of “https://mcr.microsoft.com/k8se/gocanary/" , which contains a TAR archive of the Helm Chart for “K8SE” and extracting the contents of this TAR will reveal the Helm chart.

In the values.yaml you will see the different configuration settings. This is a convoluted way of getting to the settings, but “App Services on Azure Arc” is still in preview so perhaps the final list with settings will appear at a later date. If you want to see this for yourself, you will need to SSH into the pod:

# 👇 This is optional, only if you want to see the Helm chart.

oc rsh $(oc get pod --selector app.kubernetes.io/component=extension-manager --namespace azure-arc -o jsonpath="{.items[0].metadata.name}")

K8SE: Fixing deployment issues

A couple of OpenShift/CRC related issues should begin to pop up at this stage. Let’s see how the installation is going:

oc get deployments --namespace appservice-ns

# NAME READY UP-TO-DATE AVAILABLE AGE

# appservice-ext-k8se-activator 1/1 1 1 45s

# appservice-ext-k8se-app-controller 1/1 1 1 45s

# appservice-ext-k8se-build-service 0/1 0 0 45s

# appservice-ext-k8se-envoy 0/3 3 0 45s

# appservice-ext-k8se-http-scaler 1/1 1 1 45s

# appservice-ext-k8se-keda-metrics-apiserver 1/1 1 1 45s

# appservice-ext-k8se-keda-operator 1/1 1 1 45s

oc get pods --namespace appservice-ns

# NAME READY STATUS RESTARTS AGE

# appservice-ext-k8se-activator-94f8b76f5-nbczp 1/1 Running 0 13m

# appservice-ext-k8se-app-controller-787dbb799b-sspld 1/1 Running 0 13m

# appservice-ext-k8se-envoy-78485f7fb9-44mq8 0/1 CrashLoopBackOff 7 13m

# appservice-ext-k8se-envoy-78485f7fb9-9klkv 0/1 CrashLoopBackOff 7 13m

# appservice-ext-k8se-envoy-78485f7fb9-sdk6p 0/1 CrashLoopBackOff 7 13m

# appservice-ext-k8se-http-scaler-69df84c9d7-gktjp 1/1 Running 0 13m

# appservice-ext-k8se-keda-metrics-apiserver-6649456bbd-kkqjk 1/1 Running 0 13m

# appservice-ext-k8se-keda-operator-848b8fdf67-26fk2 1/1 Running 0 13m

oc get events --namespace appservice-ns

# LAST SEEN TYPE REASON OBJECT MESSAGE

# 0s Warning ProvisioningFailed persistentvolumeclaim/appservice-ext-k8se-build-service Failed to create provisioner: Failed to get Azure Cloud Provider. GetCloudProvider returned <nil> instead.

# 0s Warning FailedCreate replicaset/appservice-ext-k8se-build-service-76fc77dc64 Error creating: pods "appservice-ext-k8se-build-service-76fc77dc64-" is forbidden: unable to validate against any security context constraint: [spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]

# 0s Warning FailedCreate daemonset/appservice-ext-k8se-img-cacher Error creating: pods "appservice-ext-k8se-img-cacher-" is forbidden: unable to validate against any security context constraint: [spec.volumes[0]: Invalid value: "hostPath": hostPath volumes are not allowed to be used]

# 0s Warning FailedCreate daemonset/appservice-ext-k8se-log-processor Error creating: pods "appservice-ext-k8se-log-processor-" is forbidden: unable to validate against any security context constraint: [spec.volumes[0]: Invalid value: "hostPath": hostPath volumes are not allowed to be used spec.volumes[1]: Invalid value: "hostPath": hostPath volumes are not allowed to be used]

# ...

It’s important to get to these issues quickly because otherwise the installation will time out and you will need to remove and create the extension all over.

Fixing appservice-ext-k8se-envoy

The first couple of issues are perhaps the most important ones to fix if we want to complete the installation.

First of all the “appservice-ext-k8se-envoy” pods do not appear to start correctly. All of the pods will have a “CrashLoopBackOff” status, which means that you have a pod starting, crashing, starting again, and then crashing again. Checking the logs revealed the following:

oc logs appservice-ext-k8se-envoy-78485f7fb9-44mq8 --namespace appservice-ns

# /startup.sh: line 9: can't create /etc/envoy/envoy.yaml: Permission denied

After some searching I found that the Envoy docs state the following about Envoy container images:

“By default, the Envoy Docker image will start as the root user but will switch to the envoy user created at build time, in the Docker ENTRYPOINT.”

So based on that description, if we set the SCC from “restricted” to “anyuid” we should be good to go. But, the “appservice-ext-k8se-envoy” deployment does not create a service account for these types of pods. This is where I am presented with a grave moral dilemma as I could modify the deployment, add a service account and wire up the SCC to the service account… But I believe I can risk losing those config settings when “Azure Arc” its “config-agent” performs an update to a newer version of K8SE.

I decided to add the “anyuid” to the “default” service account, this will sort out any remaining issues related to containers that use the service account name “default” implicitly or explicitly and that change their UIDs.

oc adm policy add-scc-to-user anyuid --serviceaccount default --namespace appservice-ns

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:anyuid added: "default"

# Delete the pods, so new ones get made.

oc delete pods $(oc get pod --selector project=appservice-ext-k8se-envoy --namespace appservice-ns -o jsonpath="{.items[*].metadata.name}") --namespace appservice-ns

# pod "appservice-ext-k8se-envoy-78485f7fb9-44mq8" deleted

# pod "appservice-ext-k8se-envoy-78485f7fb9-9klkv" deleted

# pod "appservice-ext-k8se-envoy-78485f7fb9-sdk6p" deleted

Second issue: the service that gets created will be of type “LoadBalancer”, this will keep the installation of K8SE in a “pending” state and will eventually cause the installation to fail due to a time-out. There’s a quick, but hacky, fix we can apply to cheat the system. We can change the service type to “ClusterIP” temporarily. Normally this service is used to access the cluster from the outside, but we will end up using an OpenShift route instead.

💡 Alternatively you could take a look at projects like MetalLB or the keepalived operator, both of these projects allow you to deploy services of type “

LoadBalancer” even when you’re not running the cluster in a Cloud environment.

oc patch svc appservice-ext-k8se-envoy --patch '{"spec":{"type":"ClusterIP"}}' --namespace appservice-ns

# service/appservice-ext-k8se-envoy patched

Fixing appservice-ext-k8se-build-service

The next couple of issues are related to the “appservice-ext-k8se-build-service”, again it’s nothing we can’t fix. The first issue is related to the use of the privileged container setting.

oc describe replicaset appservice-ext-k8se-build-service --namespace appservice-ns

# Message

# -------

# Error creating: pods "appservice-ext-k8se-build-service-76fc77dc64-" is forbidden:

# unable to validate against any security context constraint:

# [spec.containers[0]#.securityContext.privileged:invalid value: true: Privileged containers are not allowed]

We know what this is related to, it’s the SCC that we discussed earlier.

oc adm policy add-scc-to-user privileged --serviceaccount appservice-ext-k8se-build-service --namespace appservice-ns

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:privileged added: "appservice-ext-k8se-build-service"

oc delete pods $(oc get pod --selector project=appservice-ext-k8se-build-service --namespace appservice-ns -o jsonpath="{.items[*].metadata.name}") --namespace appservice-ns

# Pods deleted and recreated

The second issue I bumped into was related to storage, more specifically there was a problem with the PersistentVolumeClaim.

oc describe pods --selector project=appservice-ext-k8se-build-service --namespace appservice-ns

# Message

# -------

# 0/1 nodes are available: 1 pod has unbound immediate PersistentVolumeClaims.

The K8SE setup process deploys a StorageClass with “provisioner: kubernetes.io/azure-file”, this will not fully work on our local CRC environment as it does not know how to provision an Azure Files share. On top of this, CRC does not support dynamic provisioning out of the box (but it can be added). We can create the following PersistentVolume and attach it to the StorageClass to fix the issue temporarily. Use “oc create --namespace appservice-ns” to create the following object from a YAML file:

apiVersion: v1

kind: PersistentVolume

metadata:

name: appservice-ext-k8se-build-service

spec:

storageClassName: appservice-ext-k8se-build-service # 👈 We will use value az k8s-extension create

capacity: # --buildService.storageClassName

storage: 100Gi

hostPath:

path: /tmp/pv-data/appservice-ext-k8se-build-service # Make sure the path starts in /tmp

type: '' # Defaults to HostPath volume type

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete #host_path deleter only supports /tmp paths

volumeMode: Filesystem

Fixing the img-cacher and log-processor DaemonSets

There are two additional issues to fix, both issues are related to SCCs. Both DaemonSets are trying to use a file or directory on the host.

It seems that the “appservice-ext-k8se-img-cacher” gets provisioned without a service account, so we will need to modify the SCC of the the “default” service account, again. We will add the SCC “hostmount-anyuid” to the “default” service account.

oc describe daemonsets appservice-ext-k8se-img-cacher -n appservice-ns

# Events:

# Message

# -------

# Error creating: pods "appservice-ext-k8se-img-cacher-" is forbidden:

# unable to validate against any security context constraint:

# [spec.volumes[0]: Invalid value:# "hostPath": hostPath volumes are not allowed to be used spec.volumes[0]: Invalid value: "hostPath": hostPath volumes are not allowed to be used]

oc adm policy add-scc-to-user hostmount-anyuid --serviceaccount default --namespace appservice-ns

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:hostmount-anyuid added: "default"

It’s the same process for the “appservice-ext-k8se-log-processor” DaemonSet, however, this one does get provisioned with a service account. We will add the “hostmount-anyuid” SCC to “appservice-ext-k8se-log-processor”.

oc describe daemonsets appservice-ext-k8se-log-processor -n appservice-ns

# Events:

# Message

# -------

# Error creating: pods "appservice-ext-k8se-log-processor-" is forbidden:

# unable to validate against any security context constraint:

# [spec.volumes[0]: Invalid value:# "hostPath": hostPath volumes are not allowed to be used spec.volumes[0]: Invalid value: "hostPath": hostPath volumes are not allowed to be used]

oc adm policy add-scc-to-user hostmount-anyuid --serviceaccount appservice-ext-k8se-log-processor --namespace appservice-ns

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:hostmount-anyuid added: "appservice-ext-k8se-log-processor"

That fixes all the issues, as far as I was able to tell.

K8SE: Adding the custom location

We can now proceed with the setup of “K8SE”.

Custom locations provide a way for tenant administrators to use their “Azure Arc enabled Kubernetes” clusters as target locations for deploying Azure services instances.

customLocationName="tvl-location"

connectedClusterId=$(az connectedk8s show \

--resource-group $resourceGroupName \

--name $clusterName \

--query id --output tsv)

az customlocation create \

--resource-group $resourceGroupName \

--name $customLocationName \

--host-resource-id $connectedClusterId \

--namespace $appsNamespace \

--cluster-extension-ids $extensionId

customLocationId=$(az customlocation show \

--resource-group $resourceGroupName \

--name $customLocationName \

--query id \

--output tsv)

K8SE: Adding the Kubernetes App Service

Now we go back to following the instructions that are listed on the Microsoft docs. With the “appservice-kube” Azure CLI extension we can create a App Service Kubernetes Environment, after which we do what we typically do: create an app service plan and deploy a web app.

# staticIp=$(crc ip)

az appservice kube create \

--resource-group $resourceGroupName \

--name $clusterName \

--custom-location $customLocationId \

--static-ip $staticIp

appservicekubeId=$(az appservice kube show \

--resource-group $resourceGroupName \

--name $clusterName \

--query='id' \

-o tsv)

az resource wait \

--ids $appservicekubeId \

--custom "provisioningState!='Succeeded'" \

--api-version "2021-01-15"

appServicePlan="crc-premium-plan"

az appservice plan create \

--resource-group $resourceGroupName \

--name $appServicePlan \

--custom-location $customLocationId \

--per-site-scaling --is-linux --sku K1

az webapp create \

--plan $appServicePlan \

--resource-group $resourceGroupName \

--name "hello-crc" \

--custom-location $customLocationId \

--runtime 'DOTNET|5.0'

Every time a new web app or slot is deployed, a new deployment will be created and each deployment will have run those pods under a new service account name.

oc get pods --namespace appservice-ns

# NAME READY STATUS RESTARTS AGE

# ...

# hello-crc-77b696bc7b-zddtc 0/1 CrashLoopBackOff 2 110s

You know what to do by now, we will need to add an SCC for every one of those service accounts. I will need to use SCC “hostmount-anyuid”, unless specific App Service features require me to switch to an SCC with additional privileges.

oc adm policy add-scc-to-user hostmount-anyuid --serviceaccount hello-crc --namespace appservice-ns

# clusterrole.rbac.authorization.k8s.io/system:openshift:scc:hostmount-anyuid added: "hello-crc"

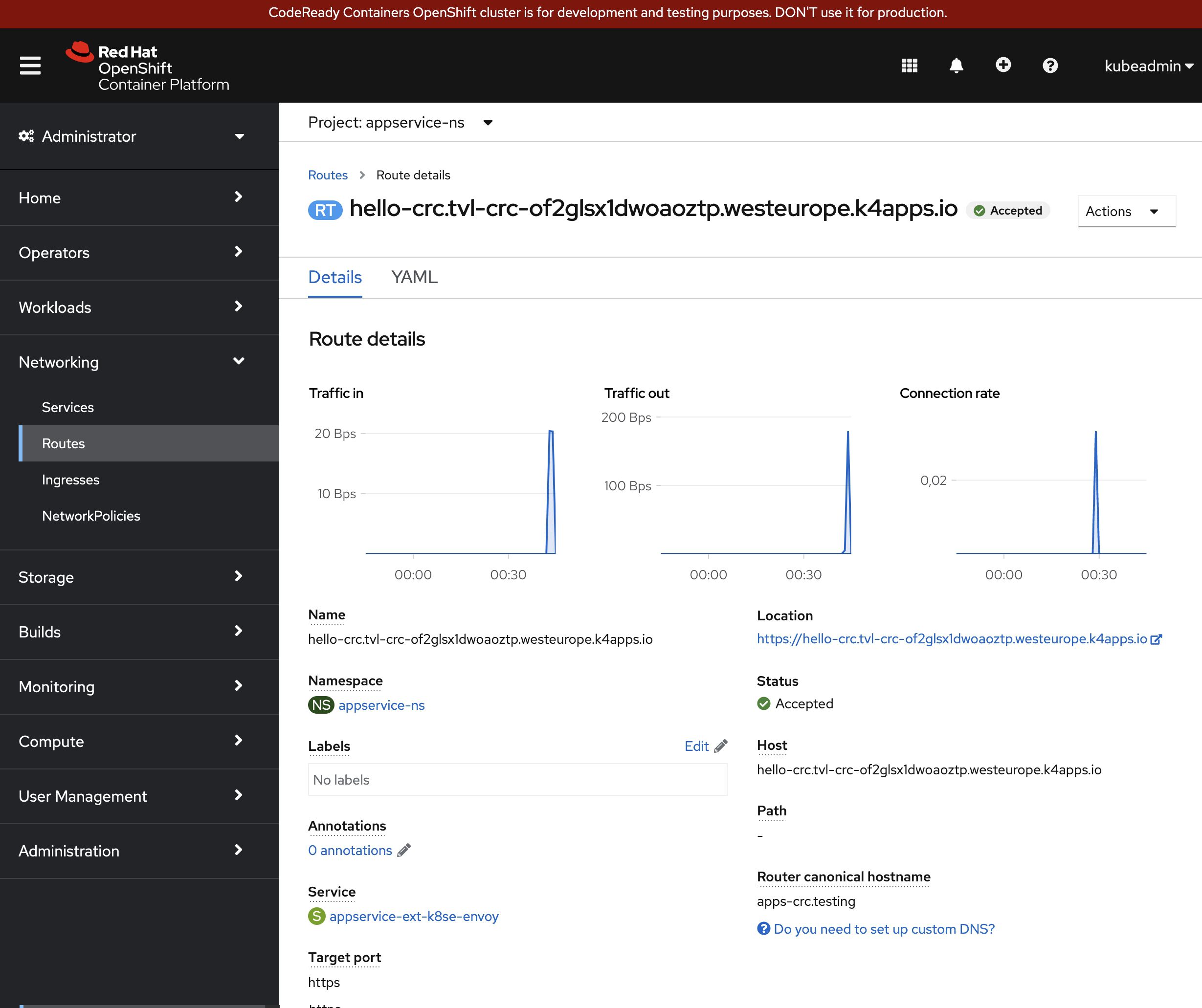

The last step is to make it possible for you to access the web application, we will need to get the hostname for this app from the Azure Portal or with the following command:

az webapp show --name hello-crc --resource-group $resourceGroupName --query defaultHostName -o tsv

# hello-crc.tvl-crc-of2glsx1dwoaoztp.westeurope.k4apps.io

All we need now is to open up a route into our cluster, use “oc create --namespace appservice-ns” to create the following route.

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: hello-crc.tvl-crc-of2glsx1dwoaoztp.westeurope.k4apps.io

namespace: appservice-ns

spec:

host: hello-crc.tvl-crc-of2glsx1dwoaoztp.westeurope.k4apps.io

to:

kind: Service

name: appservice-ext-k8se-envoy

weight: 100

port:

targetPort: https

tls:

termination: passthrough # TLS termination is handled by Envoy

insecureEdgeTerminationPolicy: Redirect # Redirect HTTP requests to HTTPS

wildcardPolicy: None

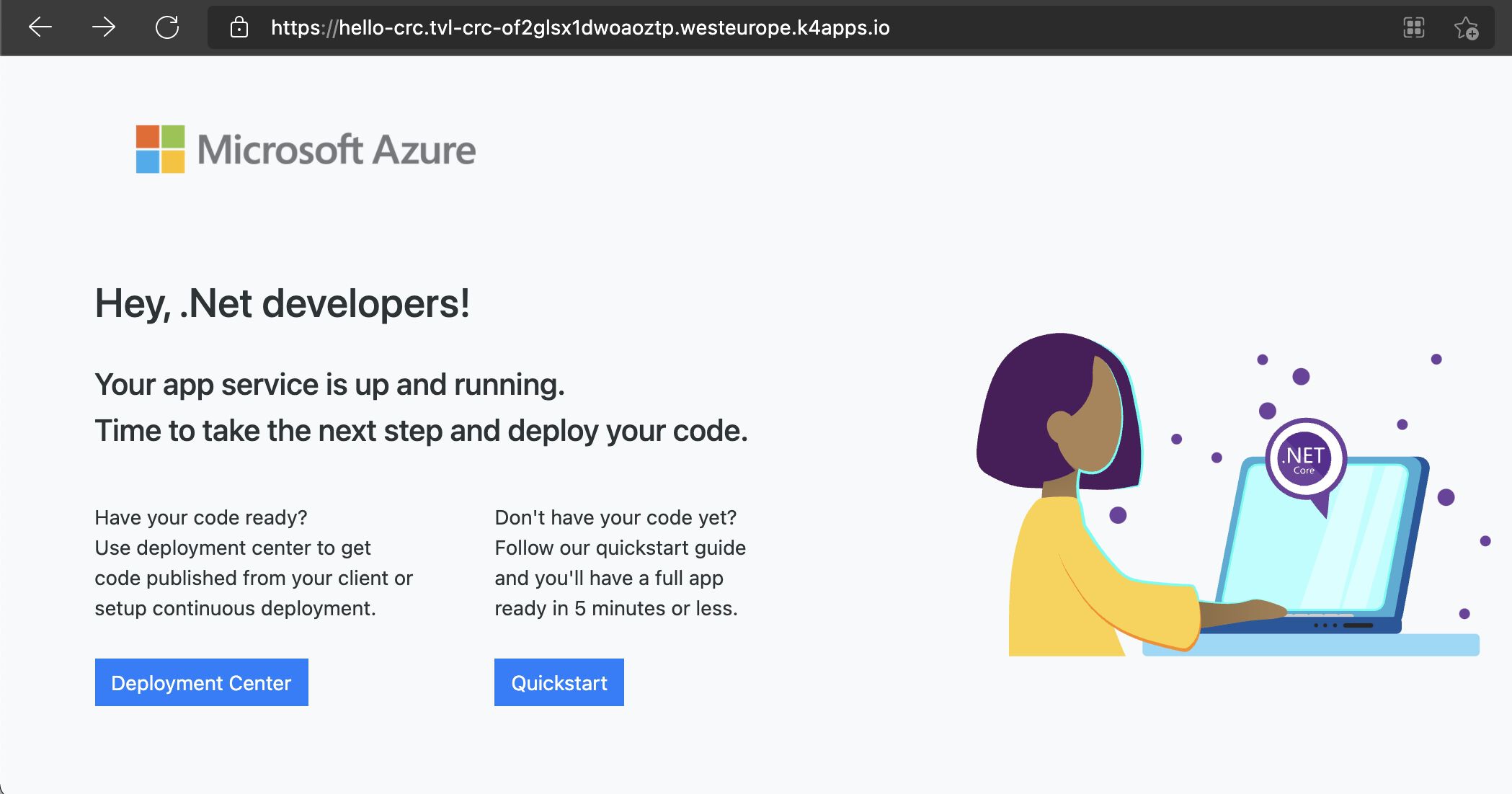

With the route created, we should be able to browse to our app. It’s important to understand that even though “hello-crc.tvl-crc-of2glsx1dwoaoztp.westeurope.k4apps.io” is managed by Azure, it should resolve to your “staticIp” (127.0.0.1 in my case).

You should be able to access the App Service.

Kudu works as well, hosted at “hello-crc.scm.tvl-crc-of2glsx1dwoaoztp.westeurope.k4apps.io” , though it’s a bit tricky to get started. Sometimes it works immediately and other times it doesn’t, I can’t quite pinpoint what exactly is happening. I can say that the “appservice-ext-k8se-build-service” takes care of all the requests coming into Kudu but there seems to be a bit of delay now and then. I was a bit surprised by this, as I had initially assumed that an instance of Kudu would run alongside the web app itself, as a sidecar container.

Conclusion

This was a fun experiment, for sure. I’d encourage you to try out some of the other supported features, during the public preview of K8SE.

We are now, theoretically, able to run Azure App Services anywhere: on-premises, edge devices (provided these are running x86-64 architecture, not ARM64) and on other cloud vendors. This is great news as it opens up a ton additional of hosting scenarios and allows us to tap into all sorts of App Service features. I think it’s got the potential to be an engaging value proposition!

I’ve compiled a little wishlist of things, that I hope will be added as development progresses:

- The full list of available “

--configuration-settings” for K8SE listed on the official Microsoft documentation. - I’d love it if Microsoft open-sourced the Helm charts for K8SE on GitHub. (I could not find them anywhere, at least.)

Even though I deployed K8SE to an untested version of OpenShift, I suspect that “az k8s-extension create” is not entirely complete yet, since I had to do a bit of tinkering to get things working. It left me wondering whether I’d also have these issues with a self-hosted version of OpenShift Container Platform on-premise. Perhaps the Helm chart can deploy a custom SCC when it detects that you’re deploying to an OpenShift cluster?

In a nutshell, here is a list of issues I encountered:

- I did end up having lots of issues related to SCC, which is an OpenShift specific feature, but those were fixed quickly.

- Privileged containers.

- Containers switching UIDs.

- Containers mounting “

HostPath” volumes.

- The “appservice-ext-k8se-envoy” service is “

type: LoadBalancer” and I had to patch it to “type: ClusterIP”.- Had I not done this, the K8SE extension installation would remain pending until it timed out.

- Dynamic volume provisioning is not supported out-of-the-box on CRC so I had to create a persistent volume manually and assign it to the storage class.

“App Services on Azure Arc” is one of many different extensions that you can deploy to your “Azure Arc enabled Kubernetes” cluster, feel free to check out a complete list of available extensions over on the Microsoft documentation.

Related posts

- Azure Red Hat OpenShift 4

- Open Policy Agent

- Azure Chaos Studio and PowerShell

- Azure Chaos Studio - Public Preview

- Azure Purview

- Azure Resource Locks

- Verifying Azure AD tenant availability

- Microsoft Entra Privileged Identity Management (for Azure Resources) Revisited

- Windows Containers: Azure Pipeline Agents with Entra Workload ID in Azure Kubernetes Service

- Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service

- SPIFFE and Entra Workload Identity Federation

- Azure Confidential Computing: CoCo - Confidential Containers

- Chaos Engineering on Azure

- Azure Policies & Azure AD PIM breakdown - Part 2

- Azure Policies & Azure AD PIM breakdown - Part 1