Chaos Engineering on Azure

In this post

📣 Dive into the code samples from this blog on GitHub!

The 28th of July started pretty much the same as every WFH-type morning ever since the second quarter of 2020, for me. A cup of coffee, reading through latest Azure blog posts that came through during the night and try my hardest to fend off the FOMO.

One of those blog posts was titled “Advancing resilience through chaos engineering and fault injection”, in it the reader is given a high-level overview on chaos engineering and fault injection and how Microsoft applies these principles to the products that they ship.

But what exactly does chaos engineering entail? The definition for Chaos Engineering as it appeared in a 2016 IEEE paper, written by employees over at Netflix, is as follows:

Chaos engineering involves experimenting on a distributed system to build confidence in its capability to withstand turbulent conditions in production.

The claim here is that by proactively, cautiously and purposely breaking things in a distributed system, we could potentially identify weaknesses in said system before they have a chance of occurring in production. It’s important to realize that the conditions for these failures could occur in any layer of our system: application, cache, database, network or hardware layer. It could range from an unexpected retry storm, erroneous configuration parameters, hardware issues, to a downstream dependency going offline.

Let’s assume that we’re running lots of different microservices, failures can become incredibly pernicious once they begin to cascade. When we find, highlight and fix potential failures before they have a chance of occurring in production, we will have made our system more resilient. We will also have built more confidence in our own system since we will have a better understanding of what the system is capable of when things go terribly south (or when we purposely throw something at it 😇).

By running these types of tests over and over again against our infrastructure, we can turn a potentially catastrophic event into a non-issue.

(Advanced) Principles of Chaos engineering

Even though the term “Chaos” might give you the impression that it involves a great deal of randomness, this is not entirely what we’re after. We aren’t looking for the piece of code that is broken either. We’re attempting to learn how the entirety of the system performs and what type of previously unknown behaviour emerges from a controlled experiment.

How do we go about doing this?

Build a hypothesis around steady-state behaviour

We define the system’s normal behaviour, we try to come up with a conclusion on how the system works and when we do something to the system, this is how it will behave! Again, Chaos engineering’s goal is not to prove how something works internally, but rather if something works. For example: if a particular back-end service goes offline, whilst a message is being sent from a client, the client will store the message in a local cache and retry in a few minutes.

Capturing metrics that are of interest to us can greatly help us in this step. These metrics can be anything you deem valuable and it doesn’t need to be a technical metric (CPU %) per se, it can also be a business metric such as sales per minute (or stream-starts-per-second in Netflix’s case).

It can also be particularly useful to try and get many people, with different specializations, involved in this process. If you’re working at a company where individual teams own a part of the solution, a UI-builder from the UI team will be able to offer different insights as opposed to a database engineer from the DB team. By having different experiences and ideas meet, you might be able to conjure up some very interesting experiments or uncover insights about the system that wasn’t visible to you before.

Vary real-world events

I have occasionally written a service of which I thought every possible bad scenario had been covered, only to be caught by reality and end up with some corner cases that I did not expect would happen. I remember a DNS configuration mishap that also affected Azure when my fiancee and I were at Disney Land, all of the pods on one of my AKS clusters ended up not working any more until I rebooted the VMs.

I did not expect that to happen.

When coming up with chaos experiments, try to be inspired by all sorts of events that can possibly take place. Have a look at what kinds of outages have, historically, taken place in during the system’s lifecycle. If we inject different similar types of events into the system, will the steady-state be disrupted or remain the same? This can be something relatively simple like shutting down a VM, synthetically increasing the load on the server or even breaking DNS on a large scale while you’re enjoying an overly expensive meal at a French amusement park.

It can be any type of disruptive event since our experiment is aiming to disrupt the known steady-state. If you cannot inject an event, try to simulate it instead. For instance; assume an entire Azure region has gone down and taken actions accordingly. It can be something simple like disabling an endpoint in Azure Traffic Manager and watching the traffic redirect to another region. Always take into account the risks of running the experiment and the risk of harming the system.

Run experiments in production

We should strive to design experiments that do not disrupt the customer. If we know that an experiment is going to impact a customer, there is no need to run it. This sounds incredibly daunting at first and you may be inclined to sample traffic from production and replaying it in a sandboxed environment. Doing this will build confidence in your sandboxed environment, as opposed to your production environment. It is therefore recommended that you do these types of experiments in production as it will give you a much higher level of confidence. You do have to start somewhere, so it might be more interesting (and easier to sell to your management) to start off in a sandboxed environment with synthetic workloads.

Be certain that when you’re running these experiments that you “minimize the blast radius” and have a means of stopping them in their tracks when something goes wrong and cascades further.

Automate experiments to run continuously

Lastly, when we work at companies that employ continuous delivery practices, we should strive towards integrating these experiments into the CI/CD systems, as well as running them automatically. Doing so will ensure that experiments remain relevant in case a bug is identified and fixed, should it then regress back at a later stage of development.

Running the experiments on a schedule or continuously is not a bad idea, though it depends on the context. I doubt it is recommended to run a region-wide failure continuously, though injecting some faulty requests into a system might be more feasible.

Running an experiment in theory

Based on those principals we can whip up an experiment like so:

- Define a steady-state hypothesis;

- When a specific event happens, the system continues to churn out measurable outputs. We attempt to verify that the system functions as it should, without trying to validate how it works.

- Hypothesise that the aforementioned steady state of an experimental group, as compared to a control group, will continue;

- We need to split our subjects up into two groups. One group is the control group, a group separated from the rest of the experiment, where we do not introduce any failures. For the experimental group, we introduce a failure!

- Introduce events, to the experimental group, that mimics real-world events;

- If we inject different types of events into the system will the steady-state be disrupted or remain the same?

- Attempt to disprove the hypothesis;

- Compare the steady-state of the experimental group to the control group its state. The fewer the differences, the more certain we can be that the system is working as intended. If the result of the experiment is different, you’ve disproved your hypothesis and you should inform the owner, of the affected part of the system, with this new knowledge.

The harder it is to disrupt the steady-state, the more confidence we will gain in the behaviour of the system. If a weakness is uncovered, we now have a target for improvement before that behaviour manifests in the system at large.

A simple example

In this example scenario, we will have a web application that is hosted in two regions, which is theoretically a good idea when we want to ensure a higher level of availability. We want to be certain that our setup is working as intended, in our case when the App Service in region A goes down, the user should failover to region B.

If you do not want to sully your local environment, I have prepared a Dockerfile that takes care of most of the installs that are needed to follow along. Better yet, when you open the repository with Visual Studio Code, you will get a pop-up to launch a development container.

This allows you to use the full VSC feature set from within a containerized environment. The repo is mounted inside of the container, but you may need to share the folder containing the repo, on your host, via your Docker settings first. Have a look at the installation procedure, it only takes a few minutes to set up.

You can find the example code over on Github.

Chaos Toolkit

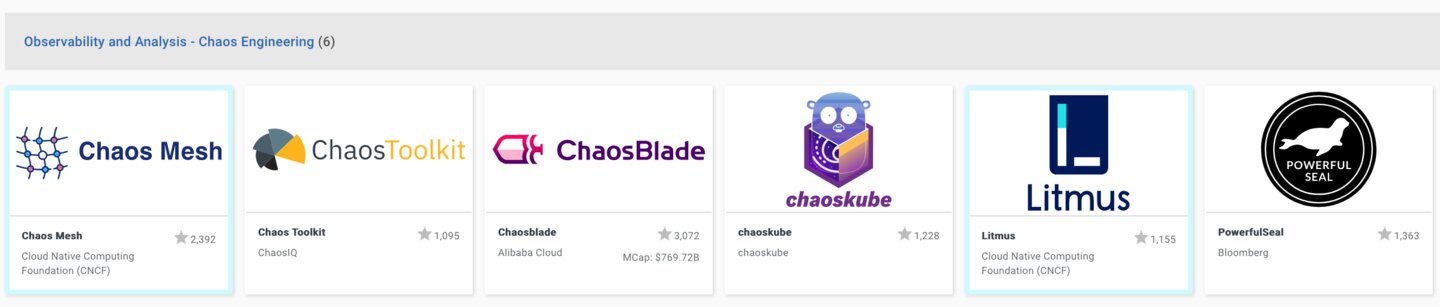

Let’s try to set up a basic chaos experiment next, for that we will need a framework or tool to get started. Luckily for us, we have a slew of choices, but I decided to narrow it down by having a look at the open-sourced tools on the CNCF interactive landscape page.

Chaos Toolkit is an open-source toolkit to perform chaos engineering experiments, it aims to be the simplest and easiest way to get started with chaos engineering. I’ve worked with it for a while now and I’ve found this to be the case. I think it fits quite well with the other tools that I have in my arsenal as an Azure consultant; ARM templates, Powershell, etc..

Chaos toolkit uses a declarative style language for you to define your experiments with. If you’re comfortable with using ARM templates you will feel right at home when using this. Have a look a the following template, I will explain what it all does in a second but for now, it should be fairly self-explanatory.

{

"version": "1.0.0",

"title": "What is the impact of an expired certificate on our application chain?",

"description": "If a certificate expires, we should gracefully deal with the issue.",

"tags": ["tls"],

"steady-state-hypothesis": {

"title": "Application responds",

"probes": []

},

"method": [],

"rollbacks": []

}

The Chaos toolkit has its fair share of additional “drivers”, plugins all written in Python, to connect to various cloud vendors, applications and environments. You are not limited to using these drivers and it should be said that you do not need Python skills to extend Chaos Toolkit. You can invoke just about any binary that is available on your system, as part of your experiment, and check its exit code via regex or JSONPath.

Another excellent feature is that Chaos Toolkit is automatable since it is “just” a Python application. That makes it relatively easy to run this from a container image, inside an Azure DevOps pipeline (or your CI/CD chain of choice) on a scheduled basis.

Azure lab environment

Our Azure environment will consist of the following:

- Azure Traffic Manager

- Endpoints that point to both App Services are added once the app services have been deployed.

- Azure App Services

- One app service per region (A & B).

Every App Service pulls in an application package from Github, which contains a static HTML file. This is just to make it very obvious to you where the traffic manager has directed your request to. The app hosted in region A will display “Hello from Region A” and the app hosted in region B will display “Hello from Region B”. Simple.

If you take a look at the ARM template you will notice that this is, in fact, a subscription level deployment. Since I wanted to deploy resource groups per region (where applicable), to really drive home the point that most of these resources live in a different region. It’s a big nested template and I can only recommend you split it up into multiple linked templates. But for the purposes of this experiment (no pun intended), we will leave it as it is.

You can deploy the template with the following PowerShell script, note that we are using New-AzDeployment as opposed to New-AzResourceGroupDeployment since we are performing a subscription level deployment.

<#

.SYNOPSIS

Deploys the sample to Azure.

.DESCRIPTION

Deploys the sample to Azure.

.EXAMPLE

PS C:\> .\Deploy-AzureSample.ps1

#>

[CmdletBinding()]

param (

)

#Requires -Modules @{ ModuleName="Az"; ModuleVersion="4.6.1" }

#Requires -Version 7

if (!(Get-AzContext)) {

Connect-AzAccount

}

$context = Get-AzContext

if ($context) {

$templateFile = Join-Path -Path $PSScriptRoot -ChildPath ".." -AdditionalChildPath "template", "azuredeploy.json"

$templateParametersFile = Join-Path -Path $PSScriptRoot -ChildPath ".." -AdditionalChildPath "template", "azuredeploy.parameters.json"

Write-Host -ForegroundColor Yellow "🚀 Starting subscription level deployment.."

$deployment = New-AzDeployment -Name "thomasvanlaere.com-Blog-Azure-Chaos-Engineering" -TemplateFile $templateFile -TemplateParameterFile $templateParametersFile -Location "westeurope" -Verbose

if ($deployment.Outputs){

Write-Host -ForegroundColor Yellow "👉 Trigger website A.."

Invoke-WebRequest -Uri ("https://{0}" -f $deployment.Outputs["regionAAppServiceUrl"].Value)

Write-Host -ForegroundColor Yellow "👉 Trigger website B.."

Invoke-WebRequest -Uri ("https://{0}" -f $deployment.Outputs["regionBAppServiceUrl"].Value)

} else {

Write-Warning -Message "No ARM template outputs found."

}

$deployment | Format-List

Write-Host -ForegroundColor Yellow "👉 Creating Azure AD Service Principal.."

$newSp = New-AzADServicePrincipal -Scope "/subscriptions/$($context.Subscription.Id)" -Role "Contributor" -DisplayName "thomasvanlaere.com-Blog-Azure-Chaos-Engineering" -ErrorVariable newSpError

if (!$newSpError) {

$newSpSecret = ConvertFrom-SecureString -SecureString $newSp.Secret -AsPlainText

Write-Host = "Service principal - client ID: $($newSp.ApplicationId)"

Write-Host = "Service principal - client secret: $($newSpSecret)"

}

else {

Write-Error "❗️ Could not create service principal!"

}

}

else {

Write-Error -Message "Not connected to Azure."

}

Once the deployment is done you can browse to the traffic manager endpoint, the FQDN should be present in the deployment output. Fire up a browser and check to see whether or not you can access the app services.

Intermission

If you want to run the Chaos Toolkit experiment, update the devcontainer.env file with the output from the ARM template and restart the container. If you do not want to restart the container, you can set the environment variables with the export command.

export AZURE_CLIENT_ID=<your-client-id>

export AZURE_CLIENT_SECRET=<your-client-secret>

export AZURE_TENANT_ID=<your-tenant-id>

export AZURE_SUBSCRIPTION_ID=<your-subscription-id>

export REGION_A_APP_SERVICE=<your-app-service-a>

export REGION_A_RESOURCE_GROUP=tvl-chaosengineering-app-a-rg

export TRAFFIC_MANAGER_ENDPOINT=<your-traffic-manager>.trafficmanager.net

Chaos Toolkit experiment

Installing Chaos toolkit is simple enough, start off with installing Python 3 and then run the following command.

pip install chaostoolkit

You can verify that the toolkit binary is working correctly by running:

chaos --version

At this stage, we have installed the chaos toolkit CLI and its core library. Next up we will need the Chaos Toolkit Extension for Azure, with it we can target a few key Azure resource types:

pip install -U chaostoolkit-azure

Currently, the driver allows you to target the following resource types:

- Virtual Machine Scale Sets

- Virtual Machines

- Azure Kubernetes Service

- Azure App Services

Keep in mind that we do not need to be dependent on the Azure driver to perform certain actions or probes. We can always break out to a process, PowerShell for instance, that performs an entirely different set of actions. A driver gives a more convenient way of executing certain actions, with less ceremony involved.

With the chaos init command we can create a new chaos experiment. You can use the following information:

- Experiment’s title: What is the impact on our application chain when Region A’s App Service goes offline?

- Hypothesis’s title: Application responds

This action should create two new files: chaostoolkit.log and experiment.json. You can open the latter, it doesn’t contain all that much for now, but that will change in just a moment.

{

"version": "1.0.0",

"title": "What is the impact on our application chain when Region A's App Service goes offline?",

"description": "N/A",

"tags": [],

"steady-state-hypothesis": {

"title": "Application responds",

"probes": []

}

}

First things first, we can add some tags to our experiment. This will effectively categorize your tests, useful when you start building even more experiments.

{

"version": "1.0.0",

"title": "What is the impact on our application chain when Region A's App Service goes offline?",

"description": "N/A",

"tags": [

"azure",

"traffic manager",

"app services"

],

`

The next section contains our experiment’s configuration values, it is a JSON object that is meant to provide runtime values to actions and probes. The value of each property must be a JSON string or object. We can choose to statically assign a value or load in values from the environment variables or HashiCorp Vault instance.

Since I was thinking of the potential of running this in Azure DevOps, I decided I would load in the configuration values from the environment variables.

"configuration": {

"azure_subscription_id": {

"type": "env",

"key": "AZURE_SUBSCRIPTION_ID"

},

"region_a_resource_group": {

"type": "env",

"key": "REGION_A_RESOURCE_GROUP"

},

"region_a_app_service": {

"type": "env",

"key": "REGION_A_APP_SERVICE"

},

"traffic_manager_endpoint": {

"type": "env",

"key": "TRAFFIC_MANAGER_ENDPOINT"

}

},

Secrets declare values that need to be passed on to actions or probes in a secure manner. Setting this up is very similar to setting up configuration properties. I could potentially pass these secret values in as container environment variables by connecting Azure Key Vault to an Azure DevOps pipeline.

"secrets": {

"azure": {

"client_id": {

"type": "env",

"key": "AZURE_CLIENT_ID"

},

"client_secret": {

"type": "env",

"key": "AZURE_CLIENT_SECRET"

},

"tenant_id": {

"type": "env",

"key": "AZURE_TENANT_ID"

},

"azure_cloud": "AZURE_PUBLIC_CLOUD"

}

},

The "steady-state-hypothesis" object describes what normal looks like in your system before the method element is applied. If the steady-state is not met, the method object is not applied and the experiment is stopped.

A steady-state-hypothesis object contains at least one probe, this is responsible for collecting information from the software system. The validation of the probes’ output is performed by tolerances, in our case our tolerance for the first probe of our steady-state-hypothesis element is a static value of 200 which is the HTTP status code we expect our HTTP call to return. It should be noted that you can also attempt to match the output with a regular expression pattern, JSONPath and range.

If the steady-state-hypothesis is met, the method element is executed. The method element is responsible for introducing chaos into the system. Usually, the method consists of at least one action but can also contain probes and other actions. Probes are purely used for analytical purposes in this stage. We are going to use just one action with which we will stop the app service in region A and wait for 40 seconds.

Next, the steady-state hypothesis is executed again and tells us if our baseline has deviated after the method was executed.

Finally, a set of rollback actions can be specified, these can help you to revert some the actions that occurred during the method phase. We will simply restart the application in region A.

"steady-state-hypothesis": {

"title": "Application responds",

"probes": [

{

"type": "probe",

"name": "we-can-request-website",

"tolerance": 200,

"provider": {

"type": "http",

"timeout": 3,

"verify_tls": false,

"url": "https://${traffic_manager_endpoint}"

}

}

]

},

"method": [

{

"type": "action",

"name": "stop-app-service-region-a",

"provider": {

"type": "python",

"module": "chaosazure.webapp.actions",

"func": "stop_webapp",

"arguments": {

"filter": "where resourceGroup=='${region_a_resource_group}' and name=='${region_a_app_service}'"

},

"secrets": [

"azure"

],

"config": [

"azure_subscription_id"

]

},

"pauses": {

"after": 40

}

}

],

"rollbacks": [

{

"type": "action",

"name": "start-app-service-region-a",

"provider": {

"type": "python",

"module": "chaosazure.webapp.actions",

"func": "start_webapp",

"arguments": {

"filter": "where resourceGroup=='${region_a_resource_group}' and name=='${region_a_app_service}'"

},

"secrets": [

"azure"

],

"config": [

"azure_subscription_id"

]

}

}

]

}

The chaosazure Python actions can accept a filter, this is simply an Azure Resource Graph query. We can dynamically create the filter by utilizing Chaostoolkit’s built-in variable substitution. These dynamic values must follow the ${name} syntax, where name is an identifier declared in either the configuration or secrets sections of the experiment. If “name” is declared in both sections, the configuration section takes precedence.

Running the experiment is as simple as:

chaos run experiment.json

#[2020-09-30 00:00:00 INFO] Validating the experiment's syntax

#[2020-09-30 00:00:00 INFO] Experiment looks valid

#[2020-09-30 00:00:00 INFO] Running experiment: What is the impact on our application chain when Region A's App Service goes offline?

#[2020-09-30 00:00:00 INFO] Steady-state strategy: default

#[2020-09-30 00:00:00 INFO] Rollbacks strategy: default

#[2020-09-30 00:00:00 INFO] Steady state hypothesis: Application responds

#[2020-09-30 00:00:00 INFO] Probe: we-can-request-website

#[2020-09-30 00:00:00 INFO] Steady state hypothesis is met!

#[2020-09-30 00:00:00 INFO] Playing your experiment's method now...

#[2020-09-30 00:00:00 INFO] Action: stop-app-service-region-a

#[2020-09-30 00:00:00 INFO] Pausing after activity for 20s...

#[2020-09-30 00:00:00 INFO] Steady state hypothesis: Application responds

#[2020-09-30 00:00:00 INFO] Probe: we-can-request-website

#[2020-09-30 00:00:00 INFO] Steady state hypothesis is met!

#[2020-09-30 00:00:00 INFO] Let's rollback...

#[2020-09-30 00:00:00 INFO] Rollback: start-app-service-region-a

#[2020-09-30 00:00:00 INFO] Action: start-app-service-region-a

#[2020-09-30 00:00:00 INFO] Experiment ended with status: completed

We can more confidently say that if an app service in region A goes down our users will still be able to use our application, as they will be redirected to region B due to Traffic Manager.

Performing the run command will also output a journal.json file, its purpose is to log all events that took place during the experiment’s run. The journal contains static information, such as the experiment that was run, as well as runtime entries. You could even turn this JSON output into a Markdown, HTML or PDF with the “chaos report” command.

In closing

This is just scratching the surface of what is possible in the realm of Chaos Engineering and I can absolutely see the benefits of incrementally building a team’s confidence in their system. Even though it might look like a very simple tool, I think it can be particularly useful when you’re just starting out with Chaos Engineering.

If you’d like to read more about this subject in the context of Azure, I’d suggest starting off with the Chaos engineering docs over at the Azure Architecture Center. Need to get the inspiration going? Take a look at the section in the docs page related to faults that you can potentially inject into your system.

Noticed any grotesque mistakes? Feel free to let me know!

Related posts

- Azure Policies & Azure AD PIM breakdown - Part 2

- Azure Policies & Azure AD PIM breakdown - Part 1

- Microsoft Entra Privileged Identity Management (for Azure Resources) Revisited

- SPIFFE and Entra Workload Identity Federation

- Azure Chaos Studio and PowerShell

- Azure Chaos Studio - Public Preview

- Azure Purview

- Azure Resource Locks

- Local OpenShift 4 with Azure App Services on Azure Arc

- Verifying Azure AD tenant availability

- Open Policy Agent

- Azure Red Hat OpenShift 4