Kubernetes in a Microsoft World - Part 1

In this post

In May 2019 my colleague Micha Wets and I met for Azure Saturday 2019 at the Microsoft HQ in Munich to talk about Kubernetes in a Microsoft World. We discussed what such a world would look like for someone who is primarily running a Microsoft-based technology stack. And in more detail, we showed the audience what we’ve noticed amongst our own customers, innovations in Windows Server Containers and how Kubernetes works with Windows-based worker nodes.

Even though some time has passed and this post might seem rather irrelevant at first glance, I frequently come across system- and software engineers that have not yet had the pleasure of getting their hands dirty with this technology. More of often than not they have heard of the benefits that containers can bring to the table; which most of the people I’ve spoken to will link to performance (though this not always the case). Hopefully this blog post can solidify your own understanding of containers, Kubernetes and how it all fits.

A trend

A lot of our customers want to take advantage of “the cloud”. Customers nowadays want, need, to innovate quickly. Gone are the days of deploying once every quarter because now customers want to deploy continuously. Ultimately customers want to get to the cloud, in our case Microsoft Azure, fast. How do we go about doing just that?

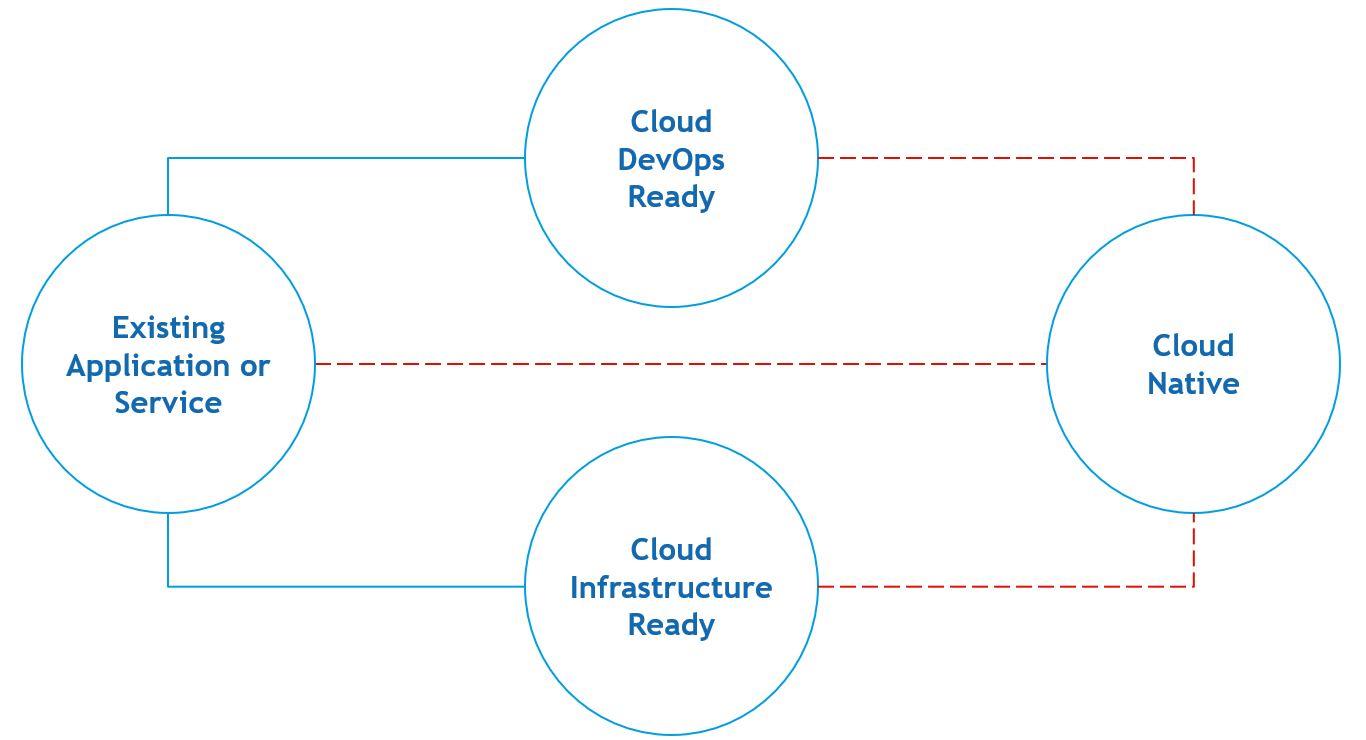

The way we, and Microsoft, see it there are three approaches when it comes to moving to the cloud.

Cloud Infrastructure Ready

A lift and shift approach with little to no code changes. Typically this will require you to perform an assessment with Azure Migrate, followed by a migration using Azure Site Recovery. The latter will allow you to migrate with the least amount of effort. There’s even an option to perform a test migration. The chances are that your application will continue to work once the virtual machines are active on the Azure side.

You will still be responsible for ensuring high-availability, auto-scaling, patching, etc. Be very aware of this fact, for it is no small thing.

Cloud DevOps Ready

Another lift and shift approach with little to no code changes. Containers are the stars of the show in this space. Containers will enable you to lower your deployment cost, increase your productivity and can potentially set you up with an easier path to achieving high-availability.

You must be aware of the possibly steep learning curve that may become an issue for some teams. I would suggest implementing this approach amongst teams that are fairly comfortable with change.

Cloud Native Ready

(Re)Architect your solution to take advantage of all of the agility that comes with certain PaaS features. This approach incorporates many of the different approaches and practices that we have seen throughout the last couple of years when it comes to:

- Infrastructure as code

- Modular applications with microservices

- Automatic failover

- Monitoring, logging, alerting

- Automated recovery

This may require a considerable amount of work and effort if you truly want to get the most out of the Microsoft Azure platform.

What could go wrong?

When it rains, it pours.

Depending on the path you wish to take on your cloud journey you might need to become a little, or perhaps a lot, more agile in the ways you might have not even considered. So where might this go wrong?

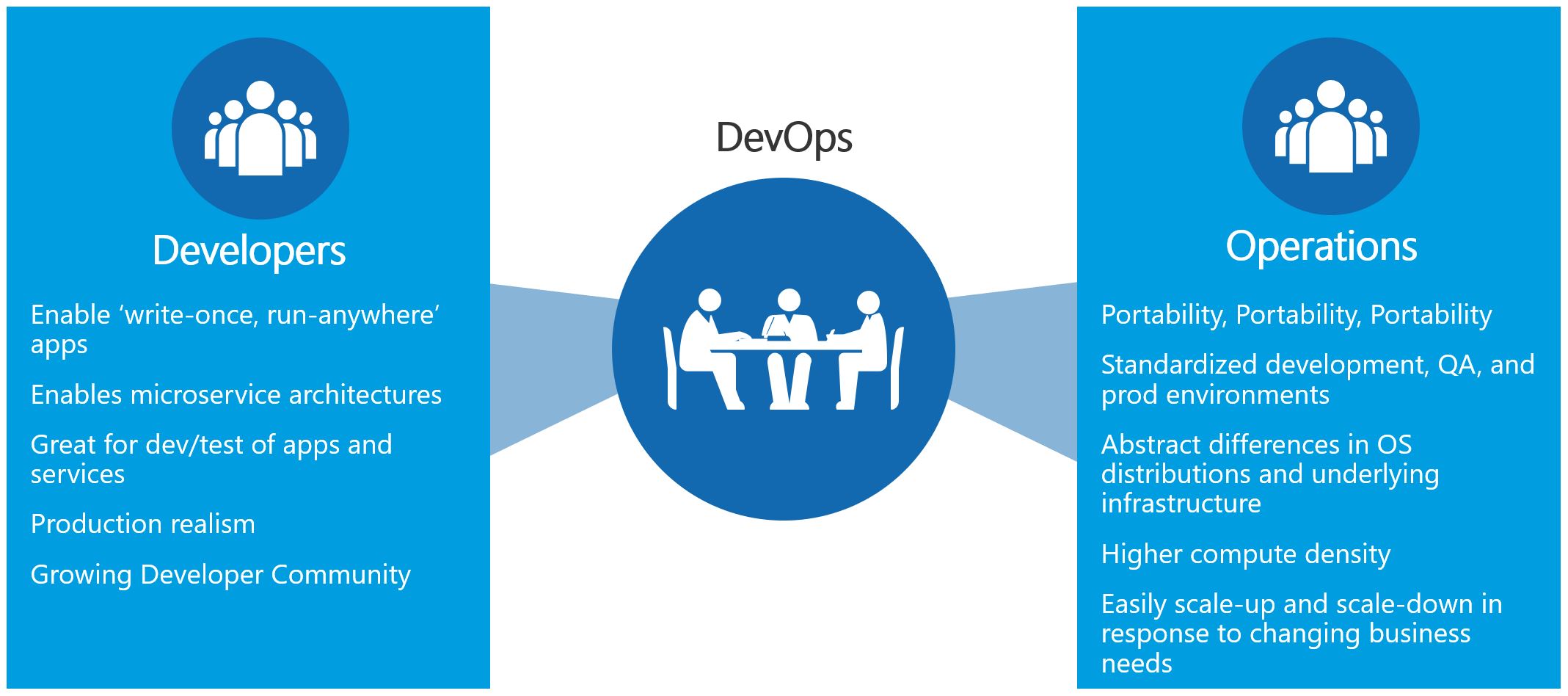

As a developer I might not get full access to a server and yet I might need it, right at this very second.. But alas, the operations person is enjoying their much needed time off. Or perhaps your application is not behaving the same way it did on your machine and now you still require assistance from the person over at the ops team.

The same could be said from the perspective of the operations person. He or she has to install a new application. But alas, there seems to be an issue with the application and now the operations person needs assistance from a developer. Now the operations person has to wait until the developer returns from sick leave..

I’m sure that these situations might be familiar to some. They hardly constitute as an agile way to go about delivering value to your customer.

Developers need:

- Enable ‘write-once, run-anywhere’ apps;

- Enables microservices architectures;

- Great for dev/test of apps and services;

- Production realism;

- Growing Developer Community.

IT Pros need:

- Portability;

- Standardized development, QA, and prod environments;

- Abstract differences in OS distributions and underlying infrastructure;

- Higher compute density;

- Easily scale-up and scale-down in response to changing business needs.

DevOps & Containers

The problems I described in the previous section can potentially be solved by containers. A container is a standardized package that you do not have to debug and troubleshoot in another environment. It will contain all of the required dependencies in order for my application to run and if you make it so you only add the dependencies that you truly need you can potentially increase performance, density and security. You’ll be able to deliver value faster. Tasks like onboarding new developers will be much more streamlined and simplified.

Having something that encapsulates an entire environment is incredibly powerful because you’ll know exactly what ports, which scripts need to be executed, etc.. No more having to read through a script or email in which a developer has listed all the steps for you to get an application up and running.

Containers

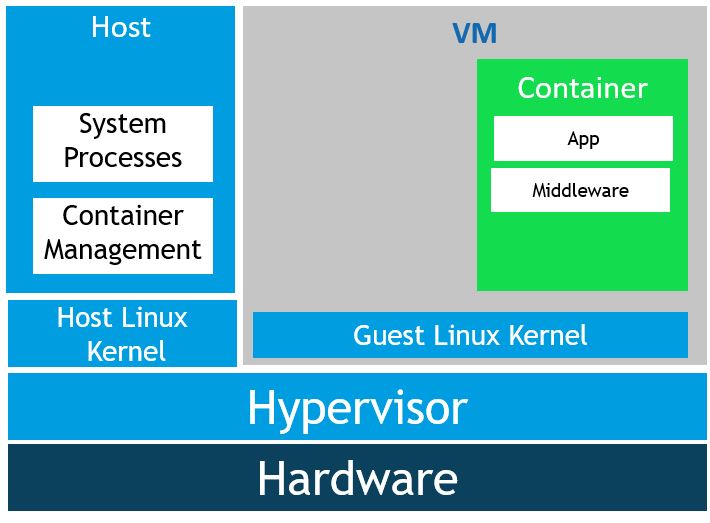

Since the aim of the talk was not too dwell too long on the internals of containers. We did briefly discuss which types of containers you have at your disposal and how you can compare them to virtual machines. Luckily for me I am not constrained by time, this time around.

In its simplest form a container is a form of process isolation. Docker containers work more or less the same way on Windows and Linux. There are no macOS container images available. Edit 2020/04/20: I’ve come across a talk by Chris Chapman of MacStadium, in which he describe’s the process of containerizing macOS. It’s a process involving genuine Apple hardware, QEMU, KVM, etc.. Link to the talk, here.

That is not to say you cannot do any container based development on macOS. For Linux Containers on Windows and macOS, Docker will actually run a small Linux based VM on the host, which will in turn be used to host your containers.

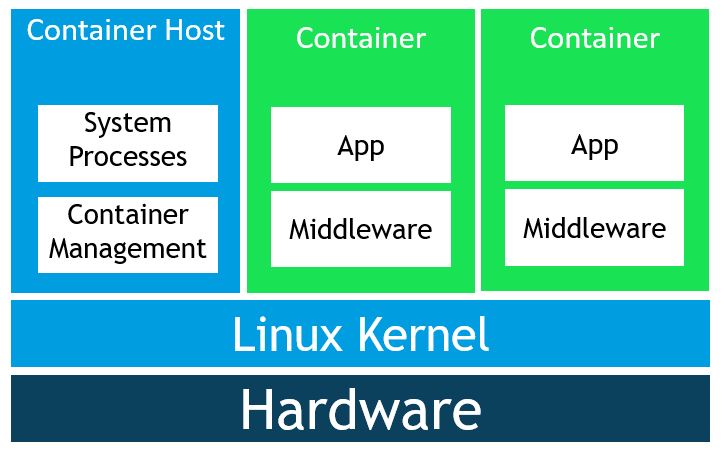

Containers are isolated groups of processes running on a host. These containers have to implement a common set of features, some of which are already present in the Linux kernel. One such feature is namespace isolation.

A Linux namespace includes the resources that a specific application can interact with. This includes network ports, list of running processes and files. Namespace isolation enables the container host to give each container a virtualized namespace that includes only the resources that it should be allowed to see. With this restricted view, a container cannot access files not included in its virtualized namespace regardless of its permissions because it simply can’t see them. Nor can it list or interact with applications that are not part of the container.

Another such feature is cgroups.

The host used cgroups to control how much of the host’s resources can be used by a container. This way we can set limits on resources like CPU, RAM and network bandwidth ensure that a container gets the resources it expects and that it doesn’t impact the performance of other containers running on the host.

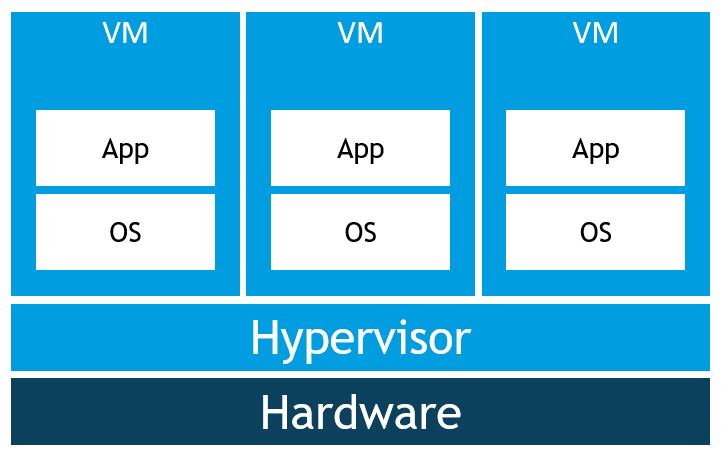

Virtual Machines

I am certain you know how this type of setup works. We typically have a virtual machine or set of virtual machines that we use for a specific application or task. Ideally we want to have some separation of concerns.

- Specific VMs for web servers

- Specific VMs for database servers, RDS hosts, etc..

All of these virtual machines run their own copy of an operating system, which requires licensing (not all of the time!), RAM, disk space, CPU cycles, etc.. This brings along additional issues such as additional update management and infrastructural complexity.

Linux Containers

Containers abstract away the VM model even further, which is why some people often refer to containers as operating system virtualization.

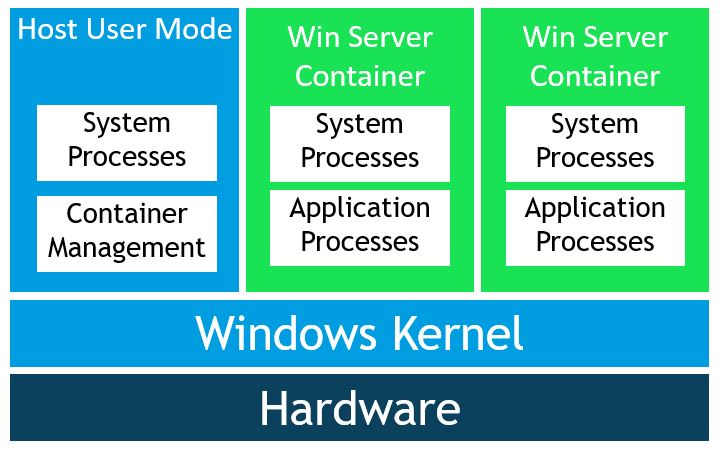

Windows Server Containers

Windows has two types of container runtimes.

Windows Server Containers provide application isolation through process and namespace isolation, much like the Linux example. It shares a kernel with the container host and all containers running on the host, these containers require the same kernel version and configuration. Process-isolated containers should not be used to run untrusted code in potentially hostile multi-tenant hosting scenarios because of the shared kernel.

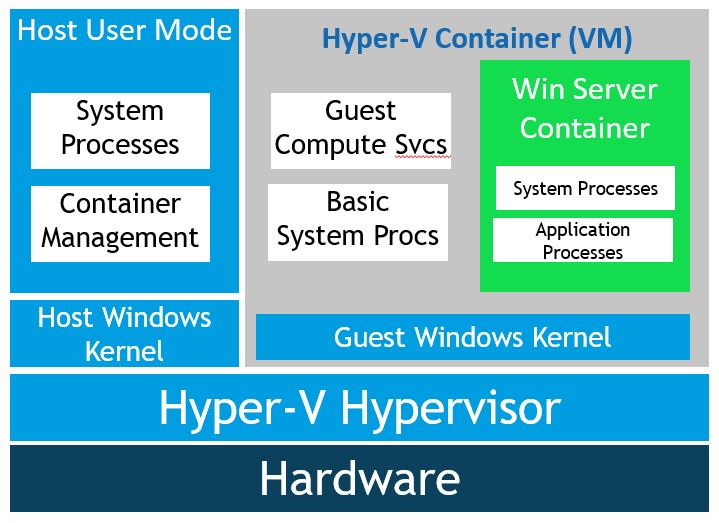

Windows Hyper-V Containers

The second type of container runtime solves the issue with running untrusted code on a shared kernel. Windows Server Hyper-V containers are generally used when security is of the utmost importance. Every container wrapped in a highly optimized virtual machine, which is often referred to as the utility VM.

In this configuration, the kernel of the container host is not shared with other containers on the same host. This also allows you to have a different kernel version on the host and inside of your container. It’s a more light weight alternative compared to having to run another separate, full on, VM for each workload.

There is nothing you have to manage about these VM, nor do they have any state associated to them as writes do not get persisted. To Docker, it’s another flag that’s been set in order for it to run a specific container. This is the default configuration when you run Windows containers on Windows 10.

As for startup times, it takes a few seconds to get the utility VM up and running. Once the startup process reaches a point where it has a specific kernel state and has loaded a couple of different processes, the utility VM its state gets frozen. Every time a new Hyper-V container gets started it will fork the utility VM state and inject the container into the VM. This significantly reduces startup time for subsequent Hyper-V containers.

Linux Kata Containers

And even though we did not discuss this in our session, there is also a way to add that additional layer of isolation to your Linux containers.

The Kata Containers Project combines the technologies from Intel Clear Containers and Hyper.sh RunV. I believe that on a high level they’re quite similar to Hyper-V containers. They provide that extra security boundary for Linux container that some customers might require in case that measures such as AppArmor or other host hardening techniques aren’t enough.

Kata Containers is being worked on by a multitude of different companies including Microsoft, Intel, Google and Amazon.

Then what is Docker?

There once was a company called Docker Inc that built this tool called Docker which was built on top of the Linux Container (LXC) software stack.

Working with Linux namespaces is not for everyone and there is a bit of setup involved in getting containers to work. One of Docker its innovations was that developers and ops are were able to use a common toolkit to package containers into Docker images and use them as an application delivery mechanism. Meaning that if you could easily distribute your containers between different machines.

Docker Images

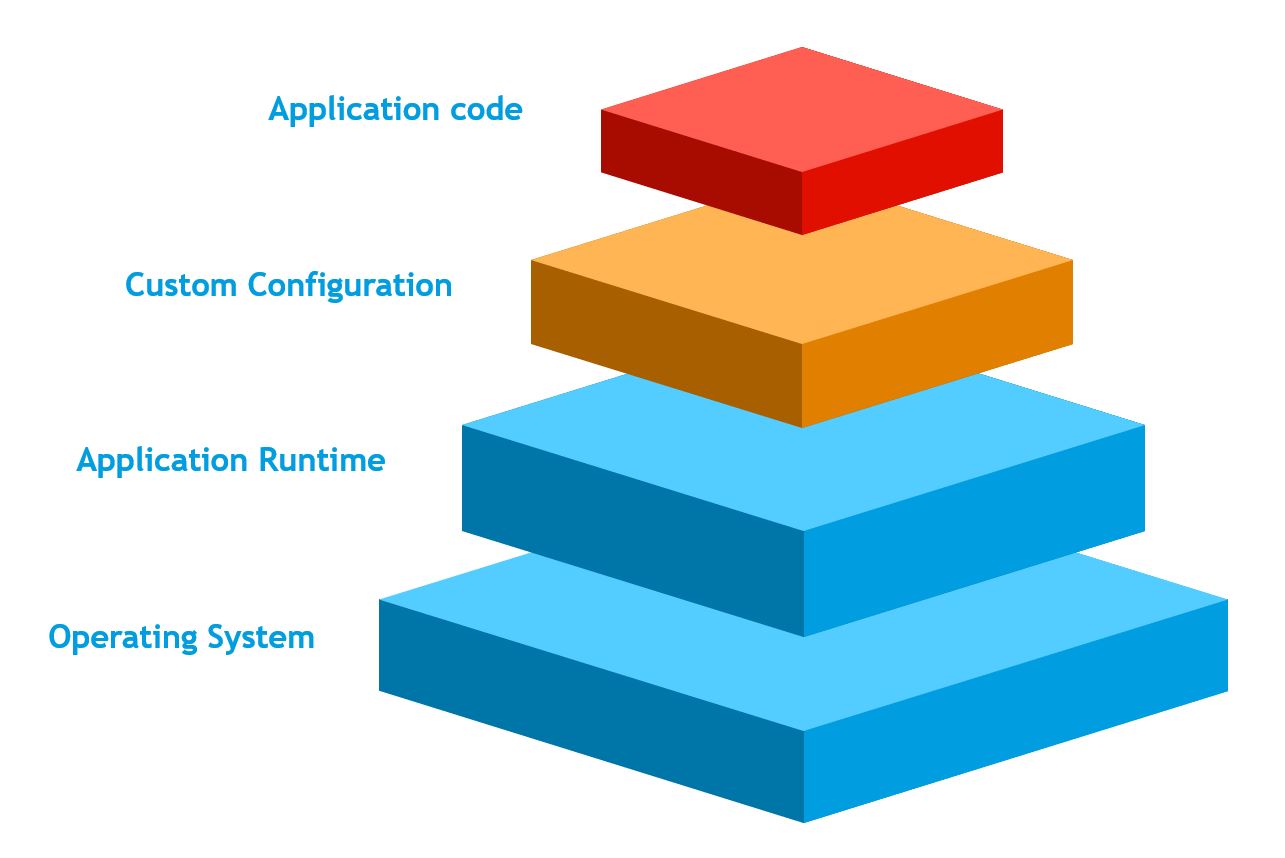

In order to use a container you will need a container image and in order to build a container image, you will need a Dockerfile. The goal is to build a self contained representation of your app and its dependencies. No need to fret if you haven’t heard of a Dockerfile before. Allow me to explain..

In order to run your favorite .NET 3.5 (I know) ASP.NET MVC web application through IIS, inside of a Windows Server Container you will need to take a long hard look of all the dependencies that are needed. Down to the public Windows APIs. If you’re familiar with how Windows user mode works you know you are in for a treat.

This can be daunting task. Luckily most of the times you do not need to worry about mapping out all of those dependencies because of base images. A base image contains a set of dependencies that you expect to be part of a certain OS.

You should also think about the other necessary steps that are required to get your application up and running. Perhaps you need to change some environment variables, add a key to the registry, install a specific binary or Powershell module. We will need to add them all to the Dockerfile.

Here is an example of how this is defined in a dockerfile.

FROM mcr.microsoft.com/windows/servercore:ltsc2019

RUN dism.exe /online /enable-feature /all /FeatureName:NetFx3

RUN dism.exe /online /enable-feature /all /featurename:iis-webserver /NoRestart

ADD build C:/inetpub/wwwroot/

CMD [ "cmd" ]

We’re saying that we want our Dockerfile to be based off of whatever is already packaged inside of Docker image “mcr.microsoft.com/windows/servercore” tagged with “ltsc2019”. The tag refers to the Long-Term Servicing Channel. With this we will have most of the dependencies that come with Windows.

Next up we can add some metadata to our container image and although most of it is optional it is considered a good practice to fill in some of these fields.

Let’s take a step back and figure out where would we be if we were working with a virtual machine. At this stage we have only installed our Windows operating system. Now we would need to configure IIS and .NET 3.5 through the Windows Server features installer and copy our binaries over so IIS can work with them. For simplicity’s sake I’m dropping those in the default wwwroot.

In our Dockerfile, we are doing the same thing. Every statement in the Dockerfile is essentially a command that gets executed and we are trying to execute those in order to get our application to work.

Microsoft 💖 Docker

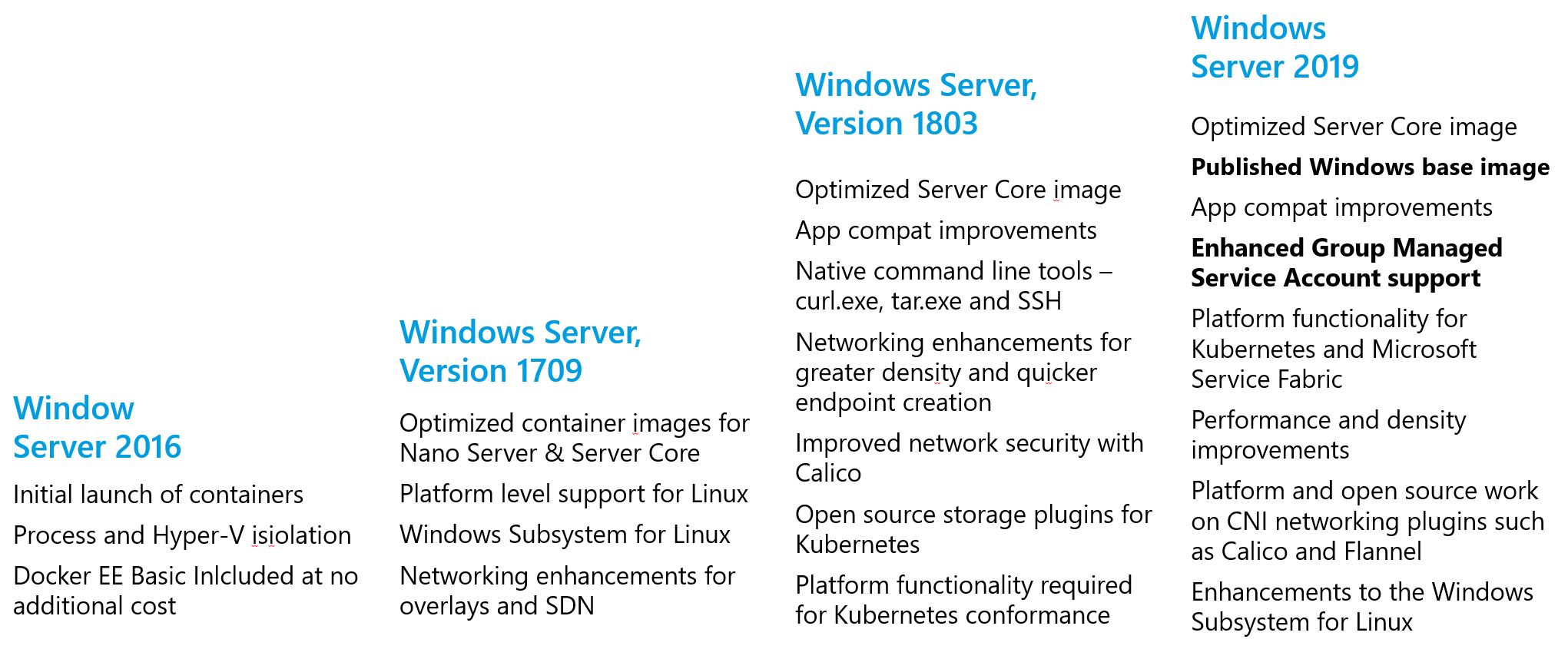

In 2014 Microsoft announced that it would enter into a partnership with Docker Inc. Since then support for Windows based containers has been fully integrated into Windows Server and Windows 10.

If we take a look at the historical overview of all the container related features that have been added into Windows Server since then you will see that Microsoft is taking containers very seriously.

Server 2019 included a couple of very interesting features.

Server 2019 included a couple of very interesting features.

- Firstly, for a lot of enterprise use-cases the most interesting one was probably the enhanced group managed service account support. This allows you to use a GMSA to enable Active Directory authentication from within your containerized applications. This meant that our old Windows authentication web applications could finally get the full DevOps treatment. Though you should know that even though gMSA works alright with Docker I still find it somewhat finicky to use with all the different flavours of Kubernetes (OpenShift/AKS/etc..).

- A second great feature was the release of the Windows base image. This one is special because it comes with the full Windows 32 API included. Allowing you to utilize, for instance, the Graphics Device Interface libraries should you need them.

Some features that stand out in Windows Server, version 1903:

- Tigera Calico for Windows now generally available

- Enhanced overlay networking support

- Enabled GPU acceleration for DirectX applications in containers

- Added Credential Spec module to the Powershell gallery

- Integrated CRI-Containerd to support Windows container pods and Linux container pods on Windows

- Windows Admin Center container extension is now GA

DirectX support is actually the big one in this list because with it came the announcement that you can you can access the GPU on the Windows host through containers that are running in process-isolation mode.

Here is a final rundown of when you could use the different Windows Docker images:

| Image Type | Image sizes | Key frameworks | Use case | Available Since |

|---|---|---|---|---|

| Nano Server | 100 Mb | .NET Core | New apps | Server 2016 |

| Server Core | 1,49 Gb | .NET Framework | Traditional server workloads | Server 2016 |

| Windows | 3,57 Gb | Win32 API | DirectX, print servers, new app experiences | Server 2019 |

What about those image sizes? A few years ago these were fairly large but as you can probably tell that is due to the differences in OS architectures. Though if you consider all the dependencies that are associated with these different images it makes sense that they allocate quite a bit of space. This is something that the teams over at Microsoft are constantly improving upon.

On Compatibility

Windows containers are offered with four container base flavors. Not all configurations support true process isolation. You can tell this by looking at this comparison table.

Windows Server, version 1903 host OS compatibility

| Container OS | Supports Hyper-V isolation | Supports process isolation |

|---|---|---|

| Windows Server, version 1903 | Yes | Yes |

| Windows Server 2019 | Yes | No |

| Windows Server, version 1803 | Yes | No |

| Windows Server, version 1709* | Yes | No |

| Windows Server 2016 | Yes | No |

Windows 10, version 1903 host OS compatibility

| Container OS | Supports Hyper-V isolation | Supports process isolation |

|---|---|---|

| Windows Server, version 1903 | Yes | No |

| Windows Server 2019 | Yes | No |

| Windows Server, version 1803 | Yes | No |

| Windows Server, version 1709* | Yes | No |

| Windows Server 2016 | Yes | No |

It is critically important that your container Windows version matches your host Windows version. Here is a quote from the Microsoft Docs regarding this topic:

Windows Server containers are blocked from starting when the build number between the container host and the container image are different. For example, when the container host is version 10.0.14393.(Windows Server 2016) and container image is version 10.0.16299. (Windows Server version 1709), the container won’t start.

As long as your hosts or images are using Windows Server version 1709 and higher you do not need to worry about containers being blocked if there is a difference between revision numbers.

Windows Server containers aren’t blocked from starting when the revision numbers of the container host and the container image are different. For example, if the container host is version 10.0.14393.1914 (Windows Server 2016 with KB4051033 applied) and the container image is version 10.0.14393.1944 (Windows Server 2016 with KB4053579 applied), then the image will still start even though their revision numbers are different.

Be aware though that for Windows Server 2016-based hosts or images, the container image’s revision number must match the host.

- Tags |

- Azure

- Kubernetes

Related posts

- Kubernetes in a Microsoft World - Part 3

- Kubernetes in a Microsoft World - Part 2

- Windows Containers: Azure Pipeline Agents with Entra Workload ID in Azure Kubernetes Service

- Register Azure Pipeline Agents using Entra Workload ID on Azure Kubernetes Service

- Azure Confidential Computing: CoCo - Confidential Containers

- Local OpenShift 4 with Azure App Services on Azure Arc

- Open Policy Agent

- Azure Red Hat OpenShift 4